第一种划分训练,1620训练180验证(9:1)

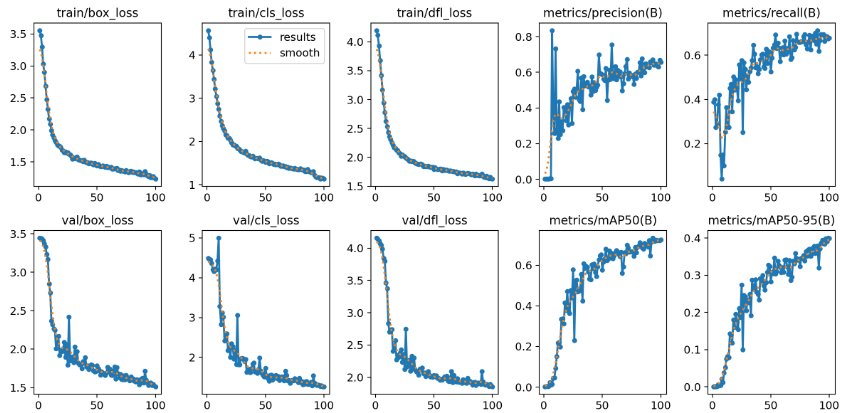

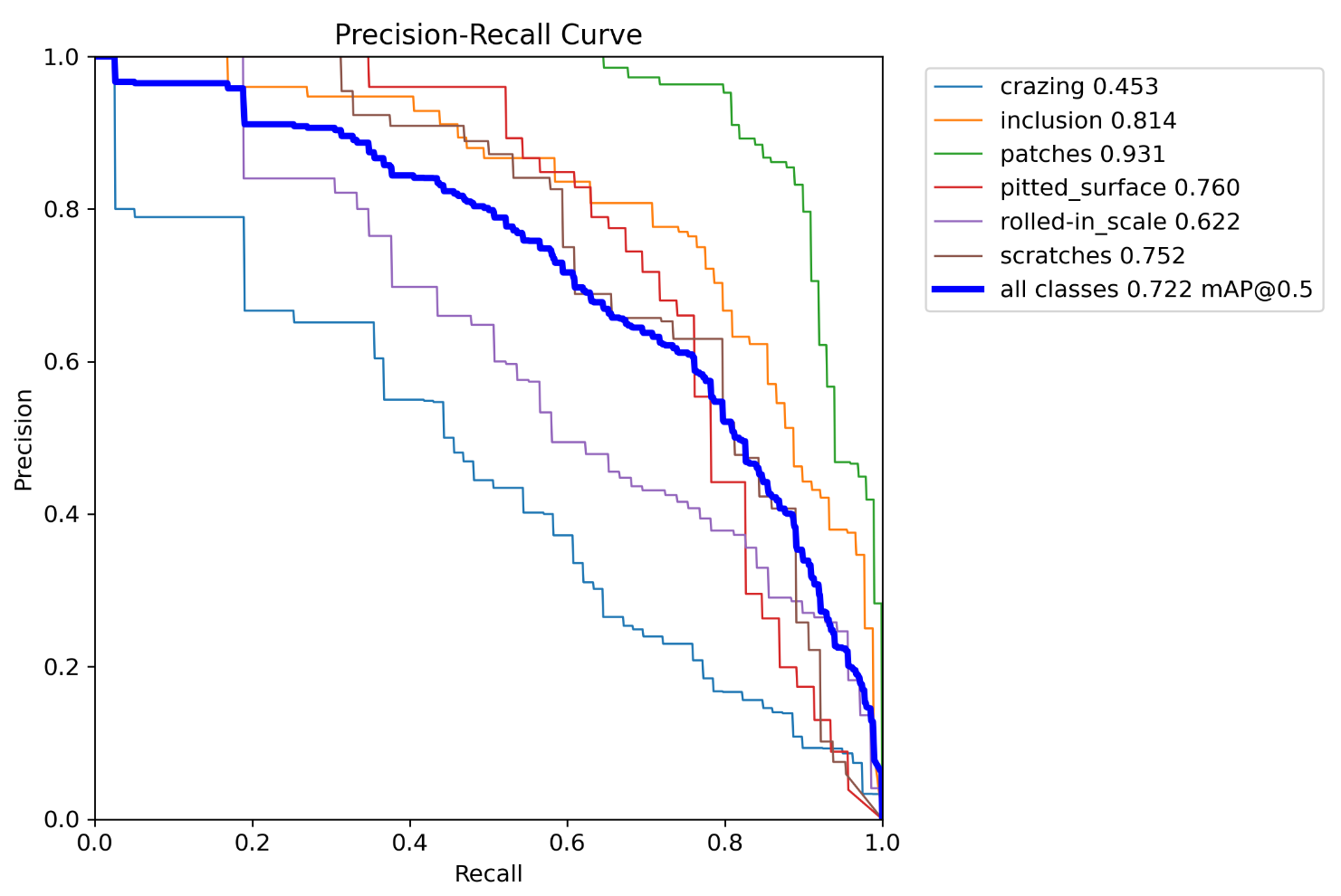

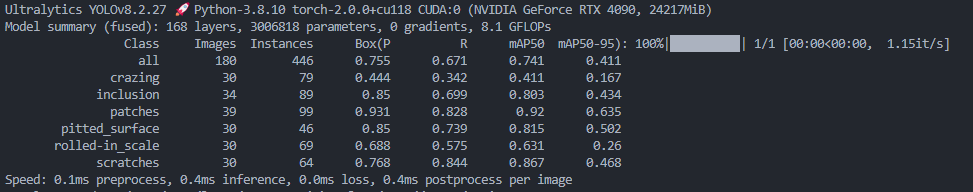

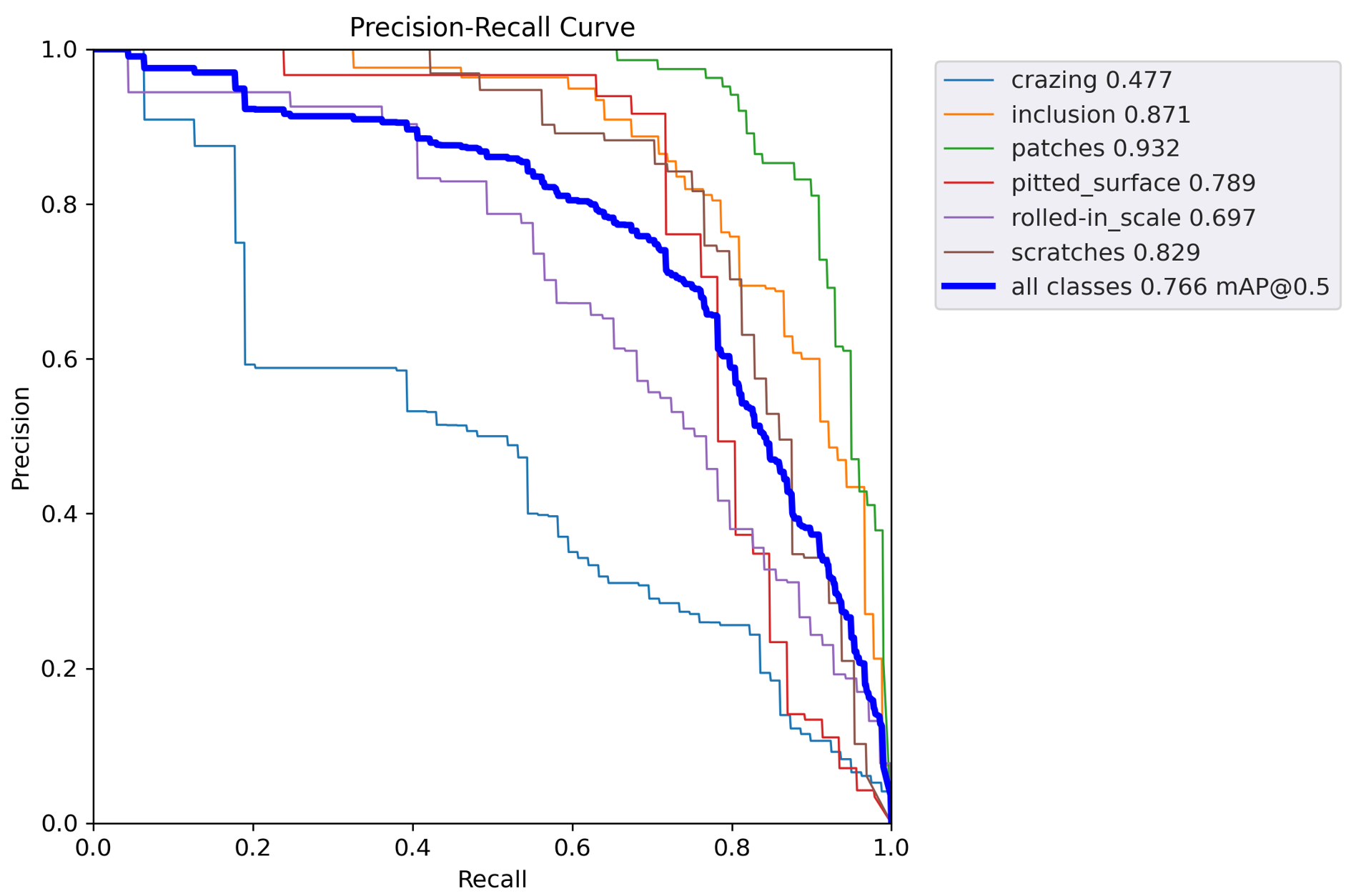

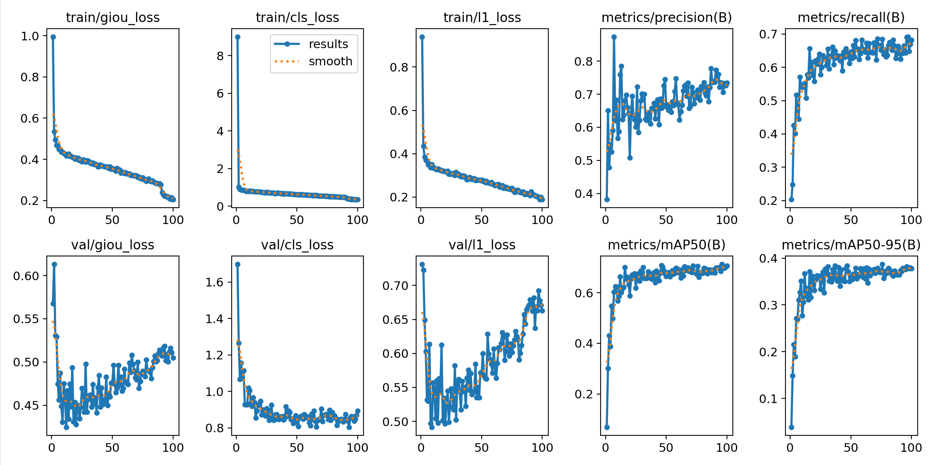

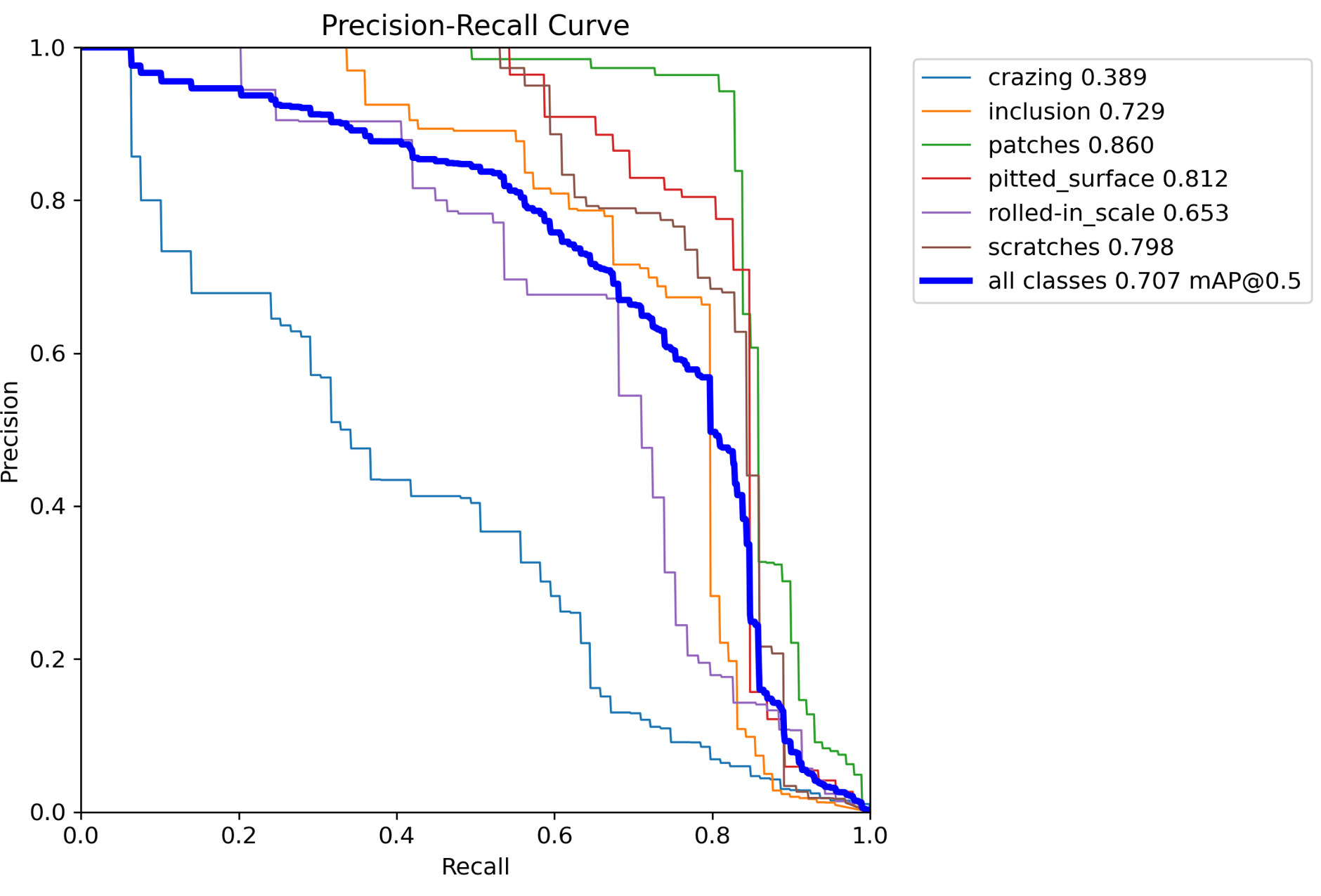

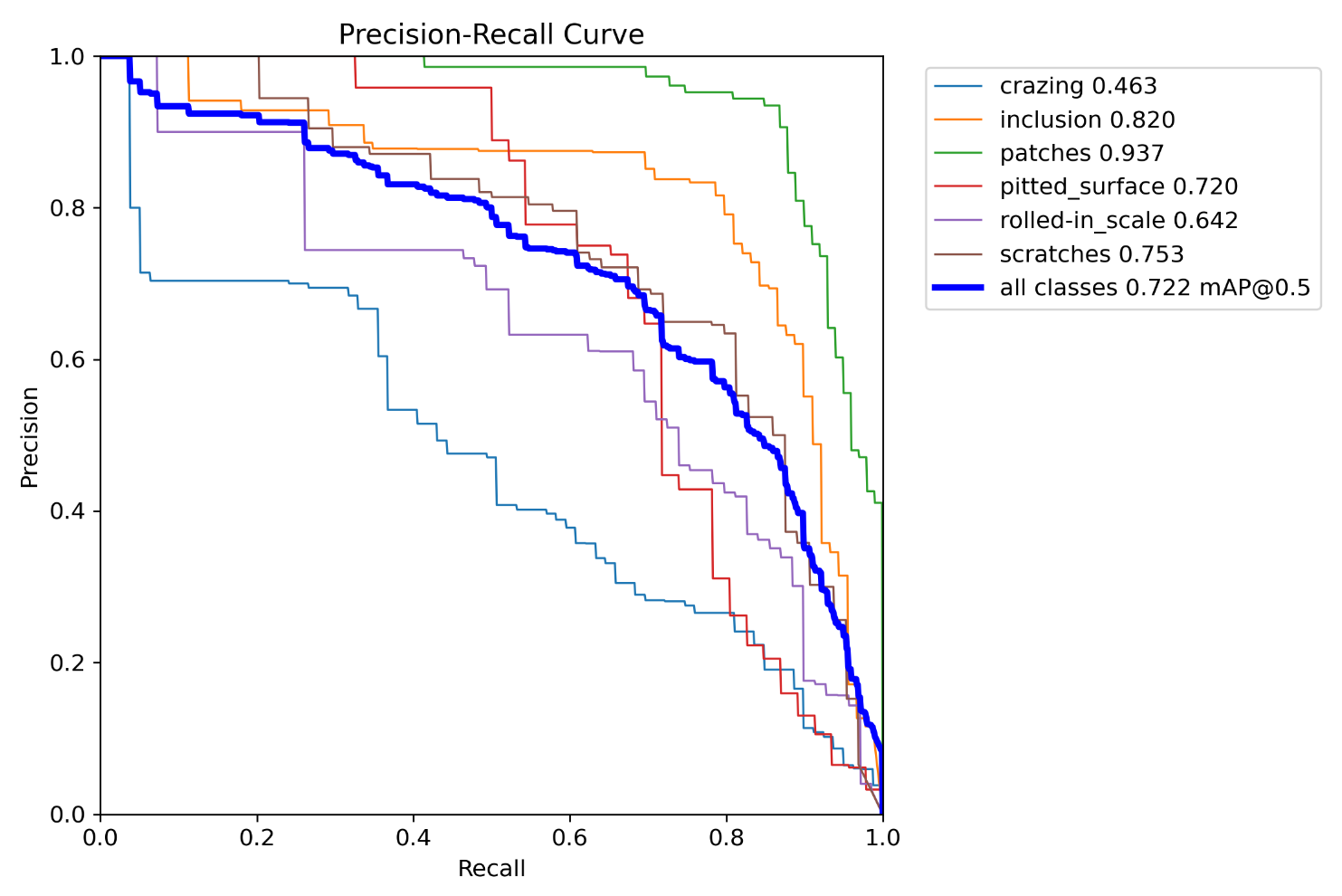

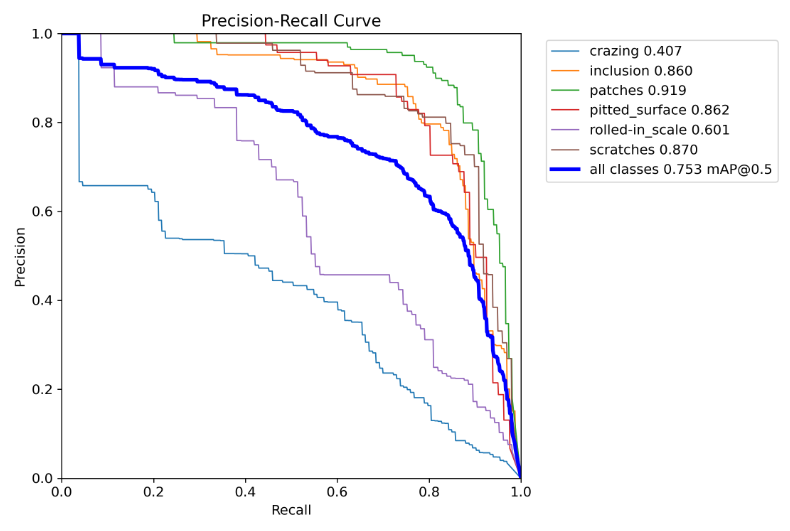

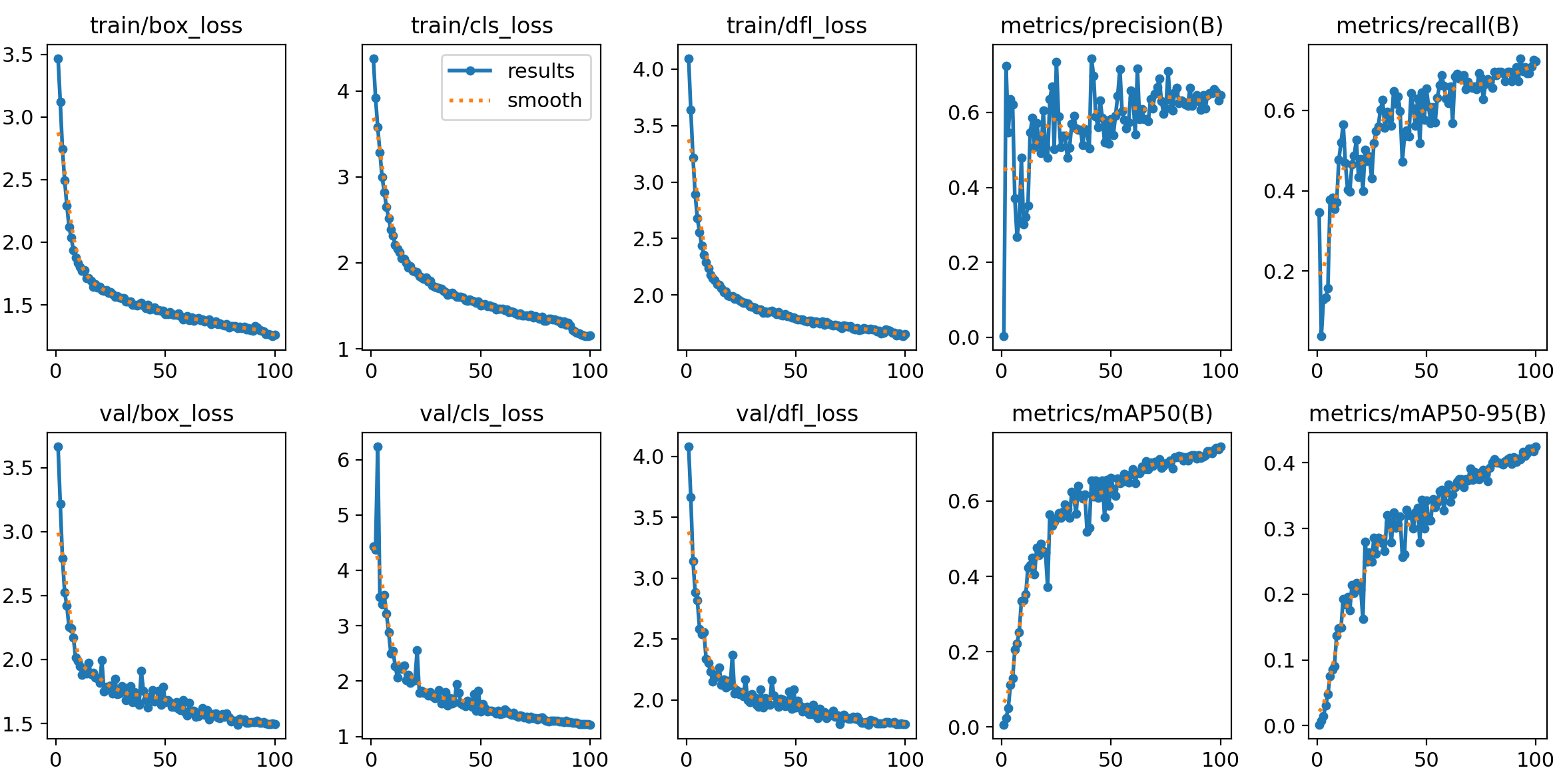

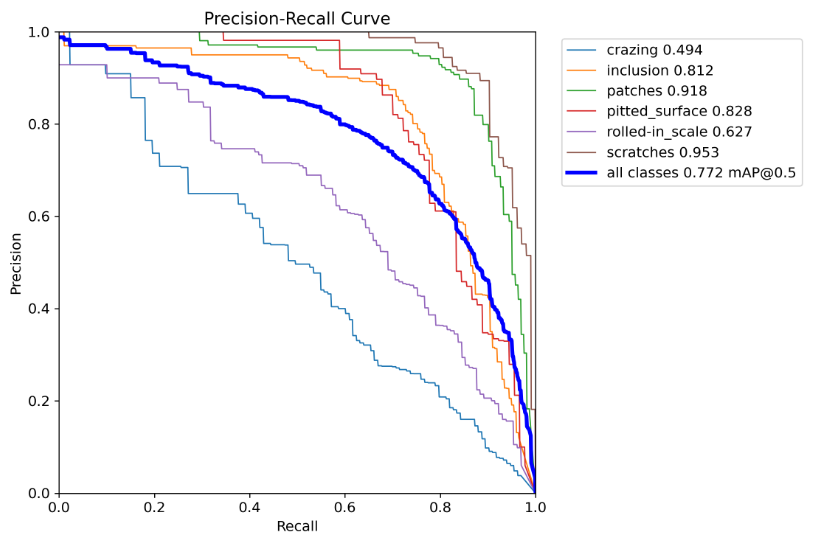

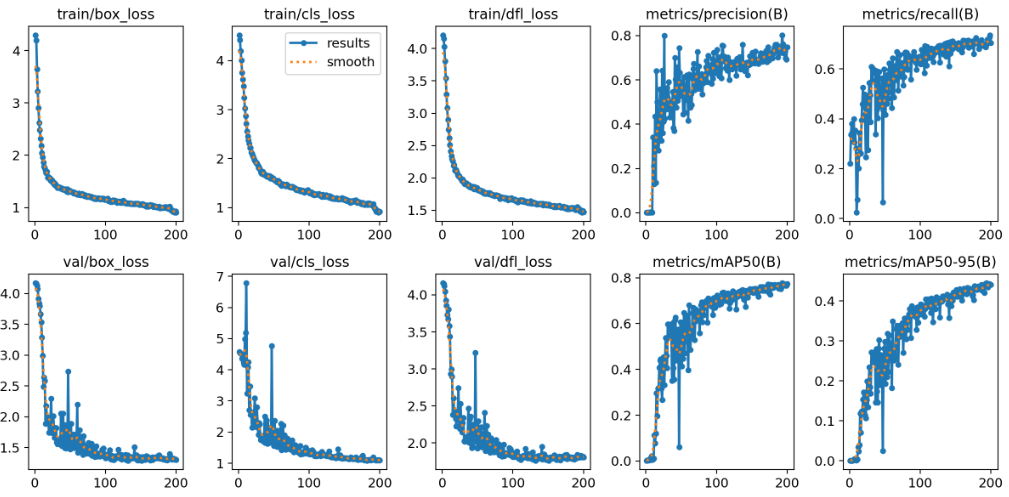

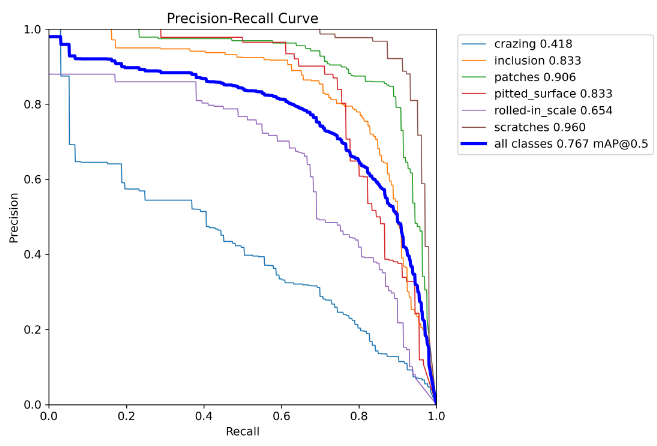

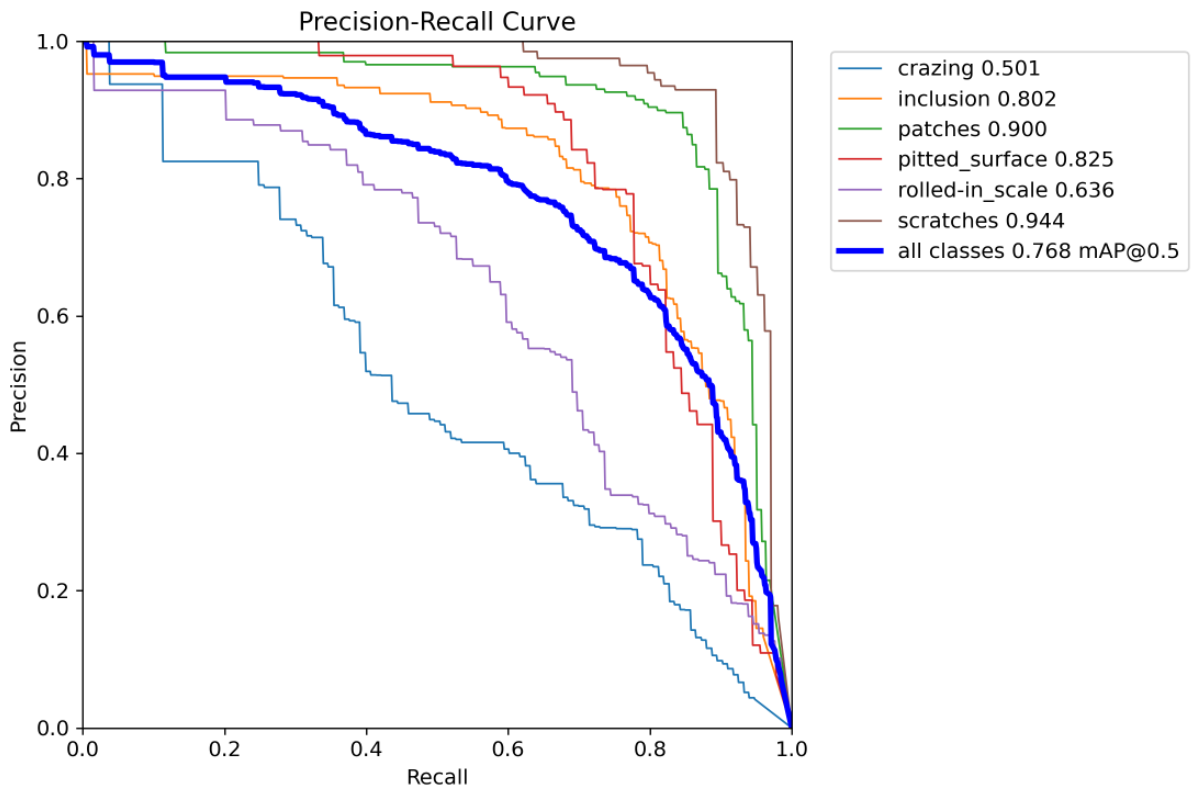

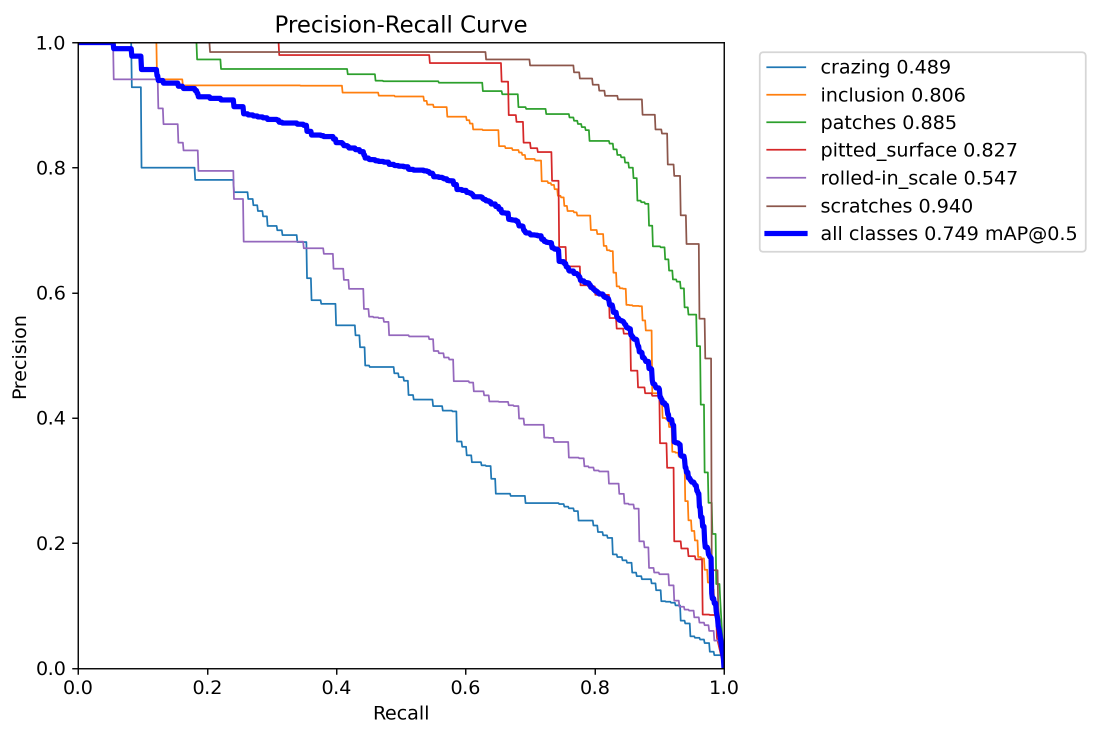

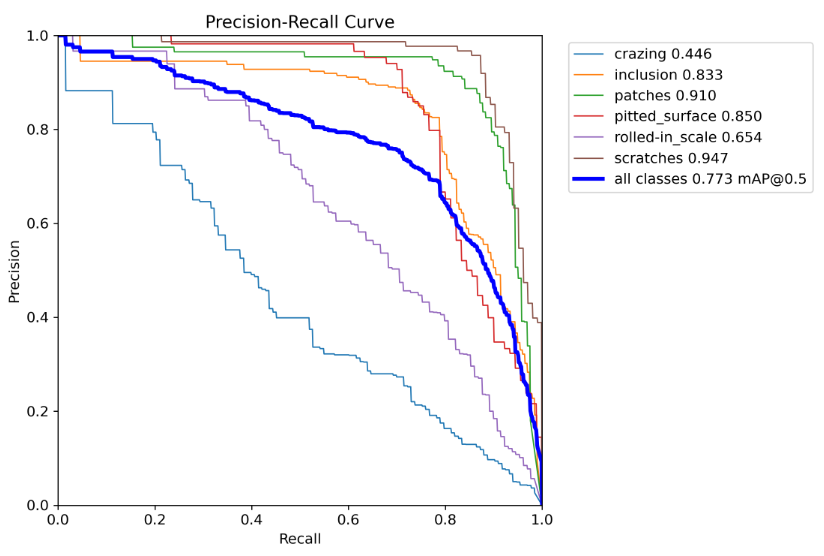

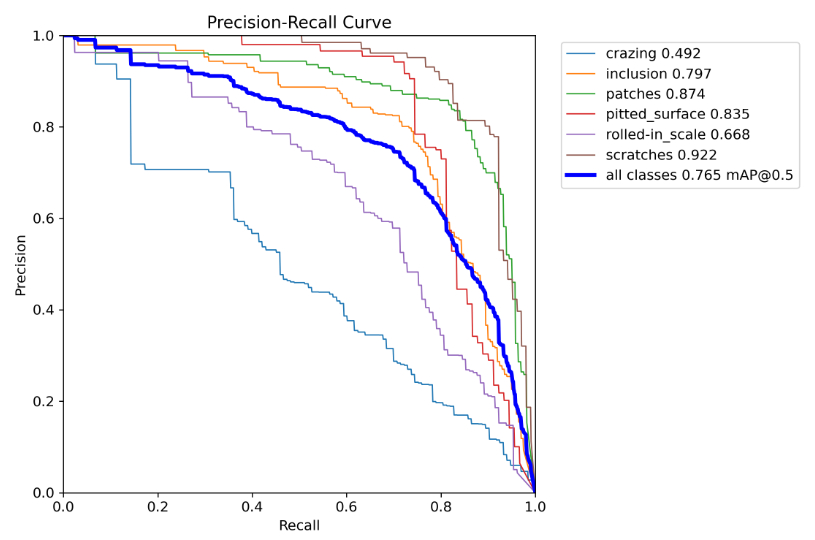

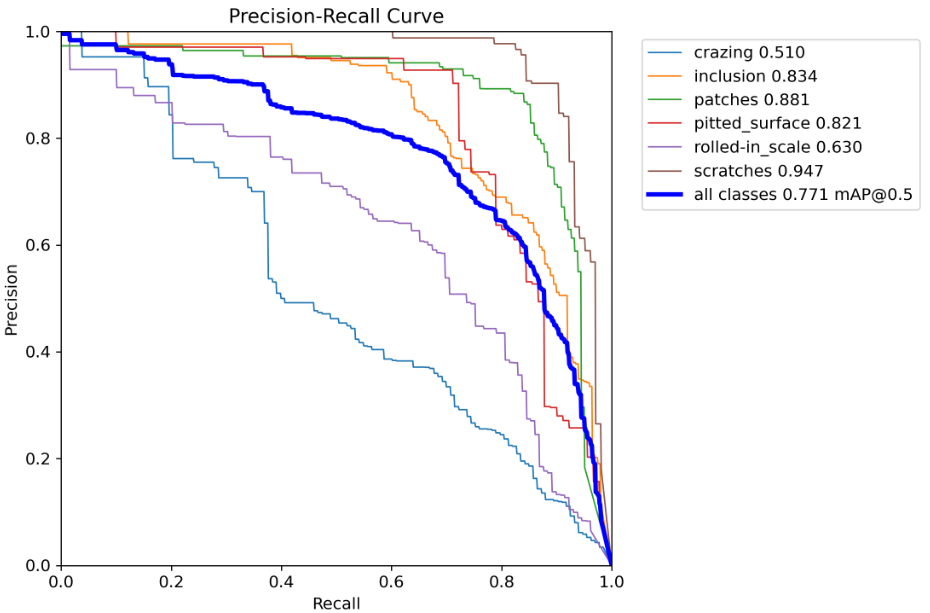

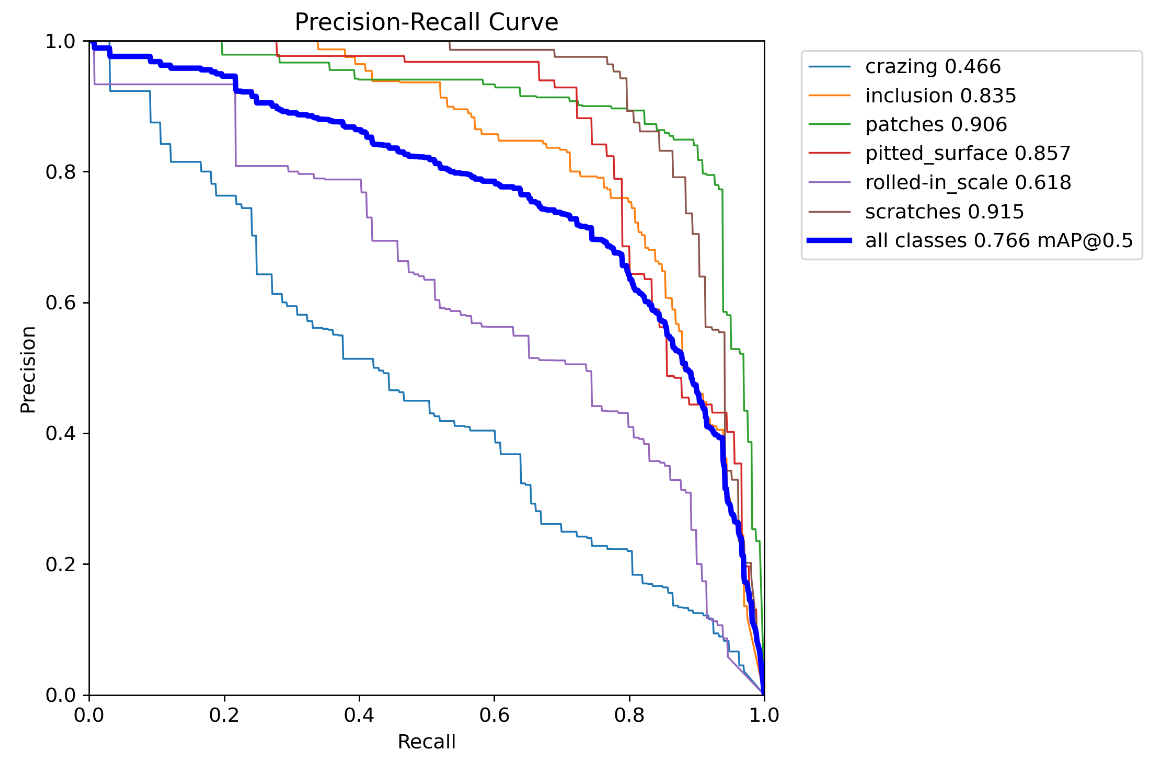

原版V8(72.2)

条件

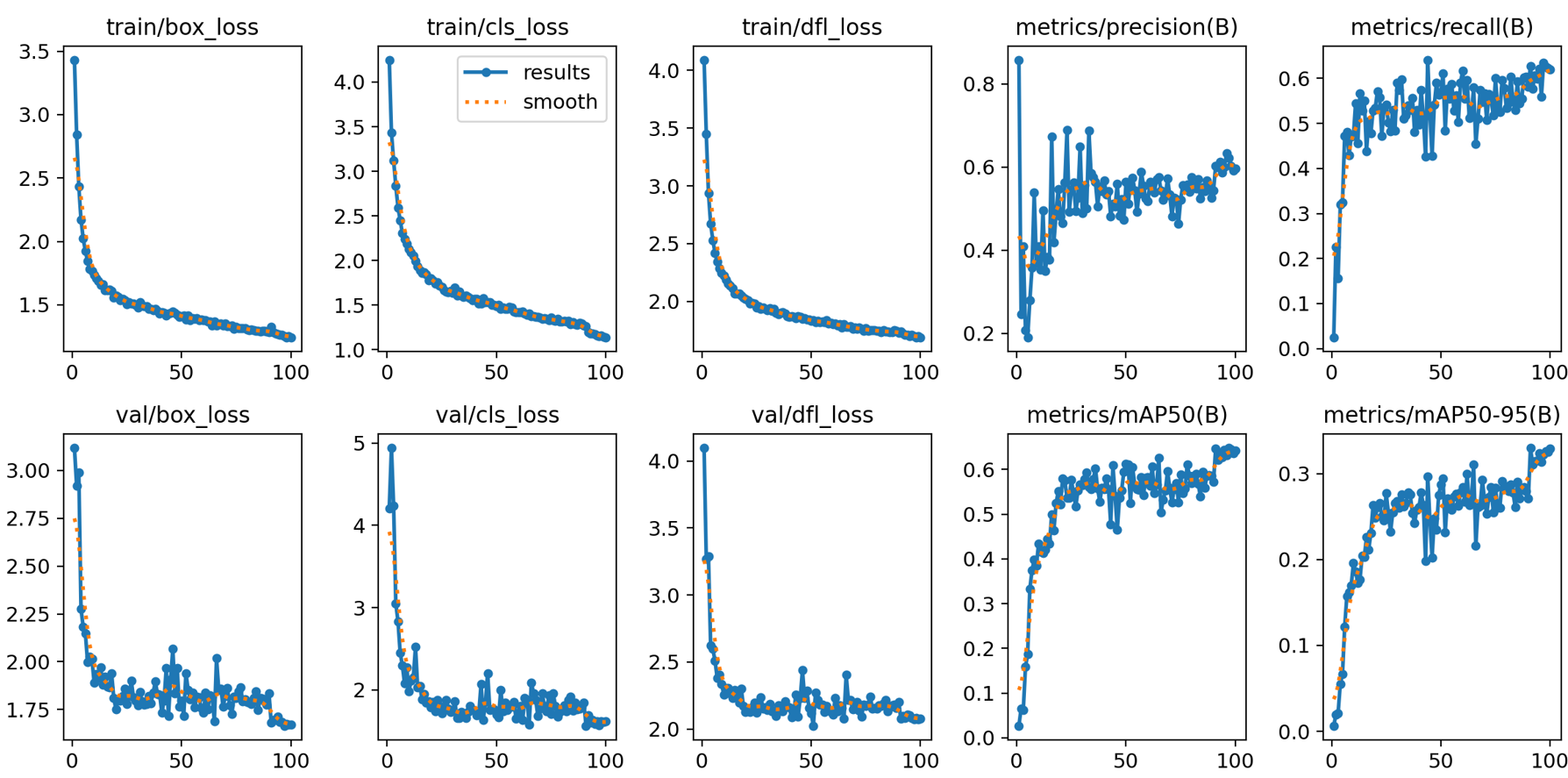

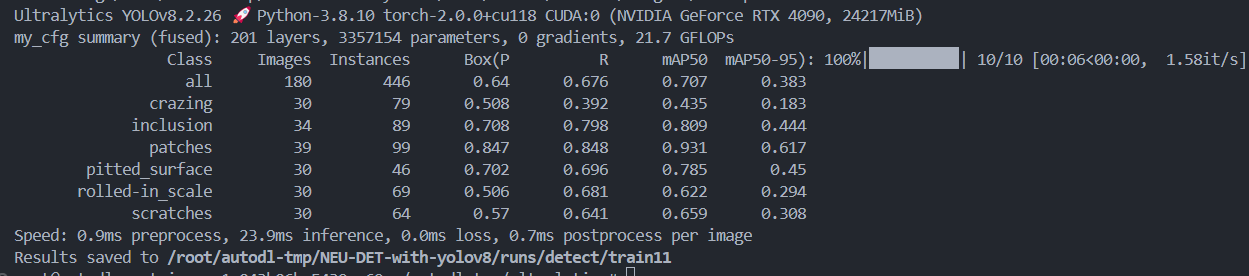

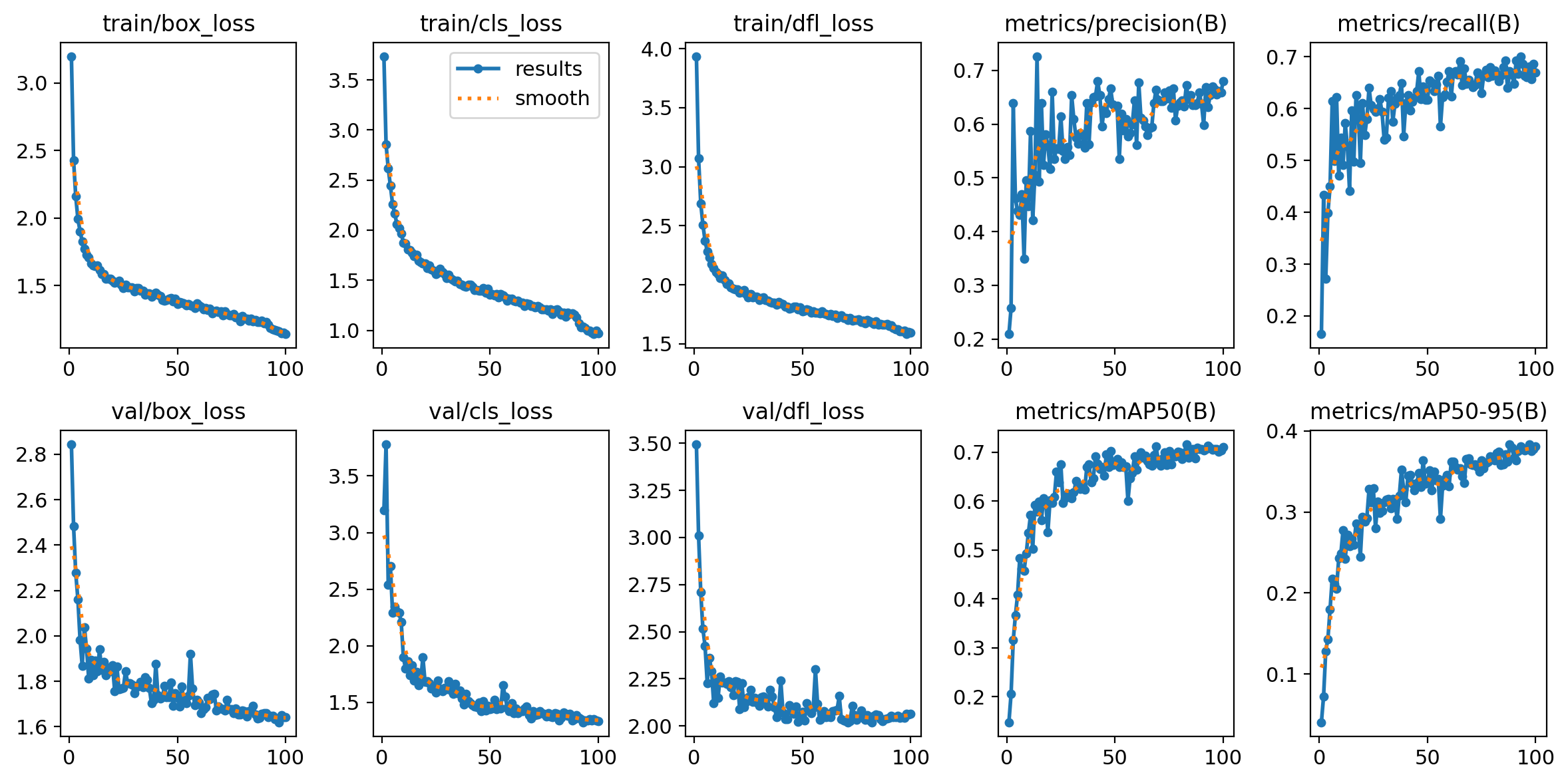

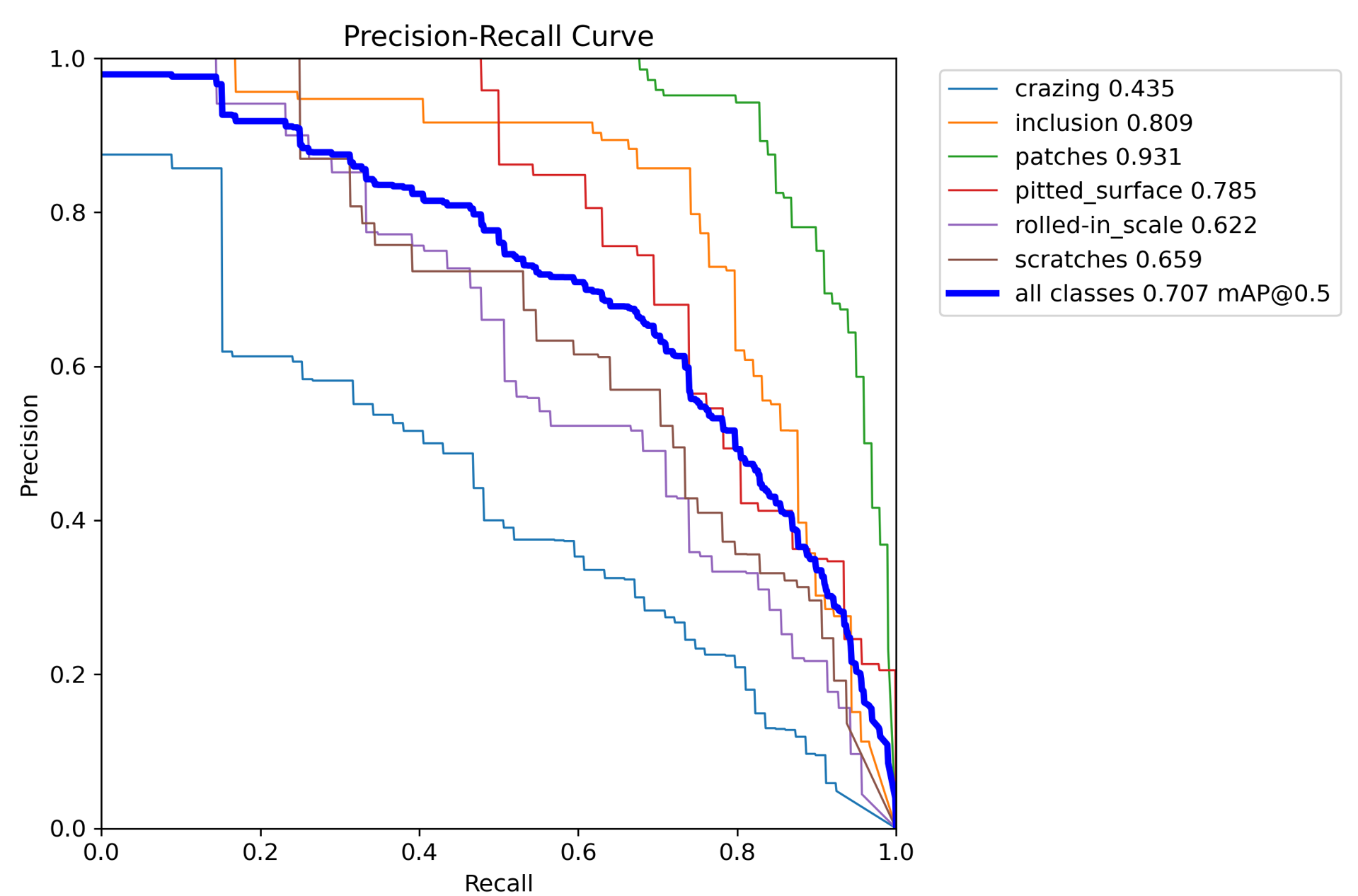

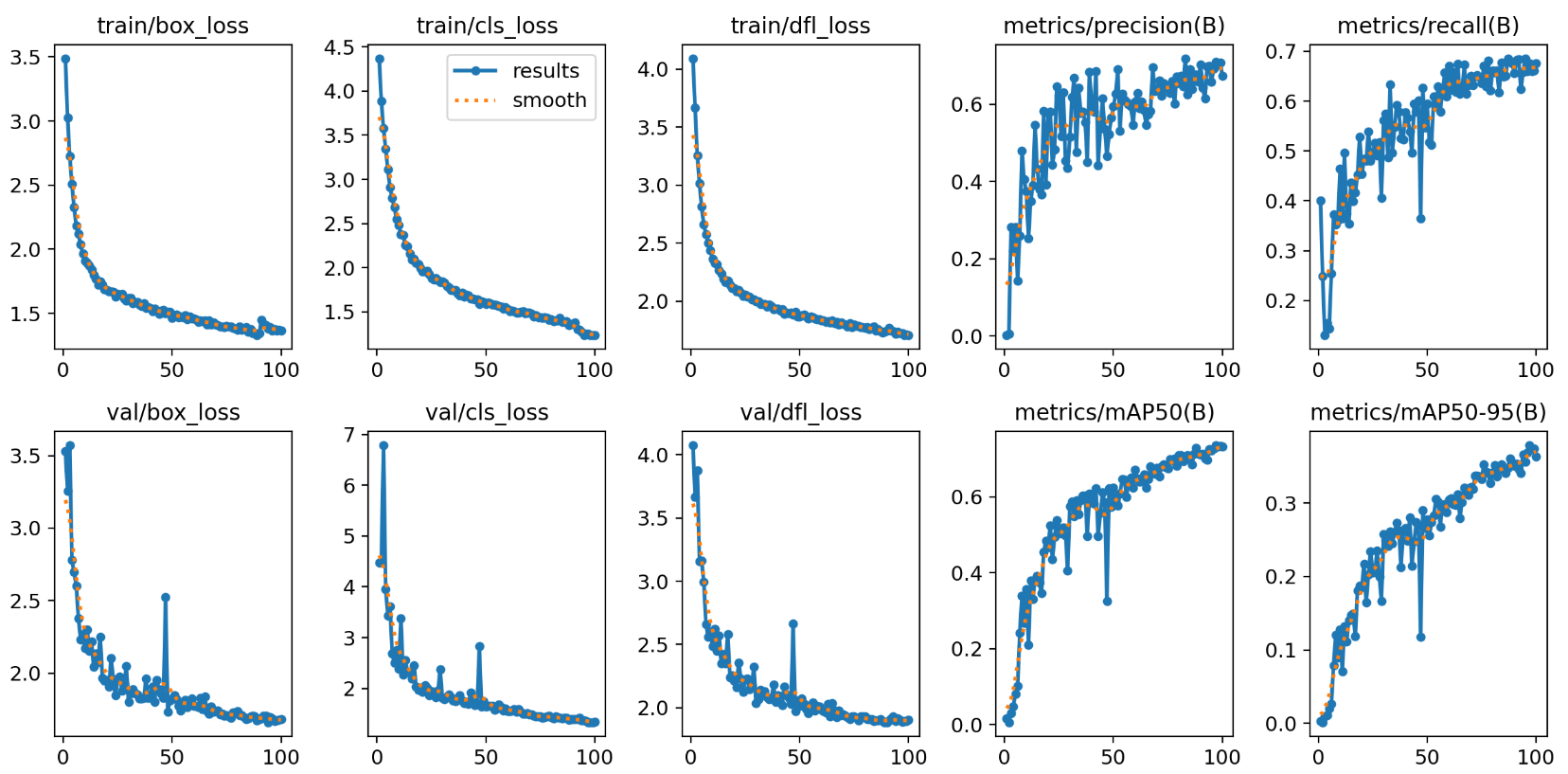

epcoh =100,batch =-1,model=yolov8n

结果

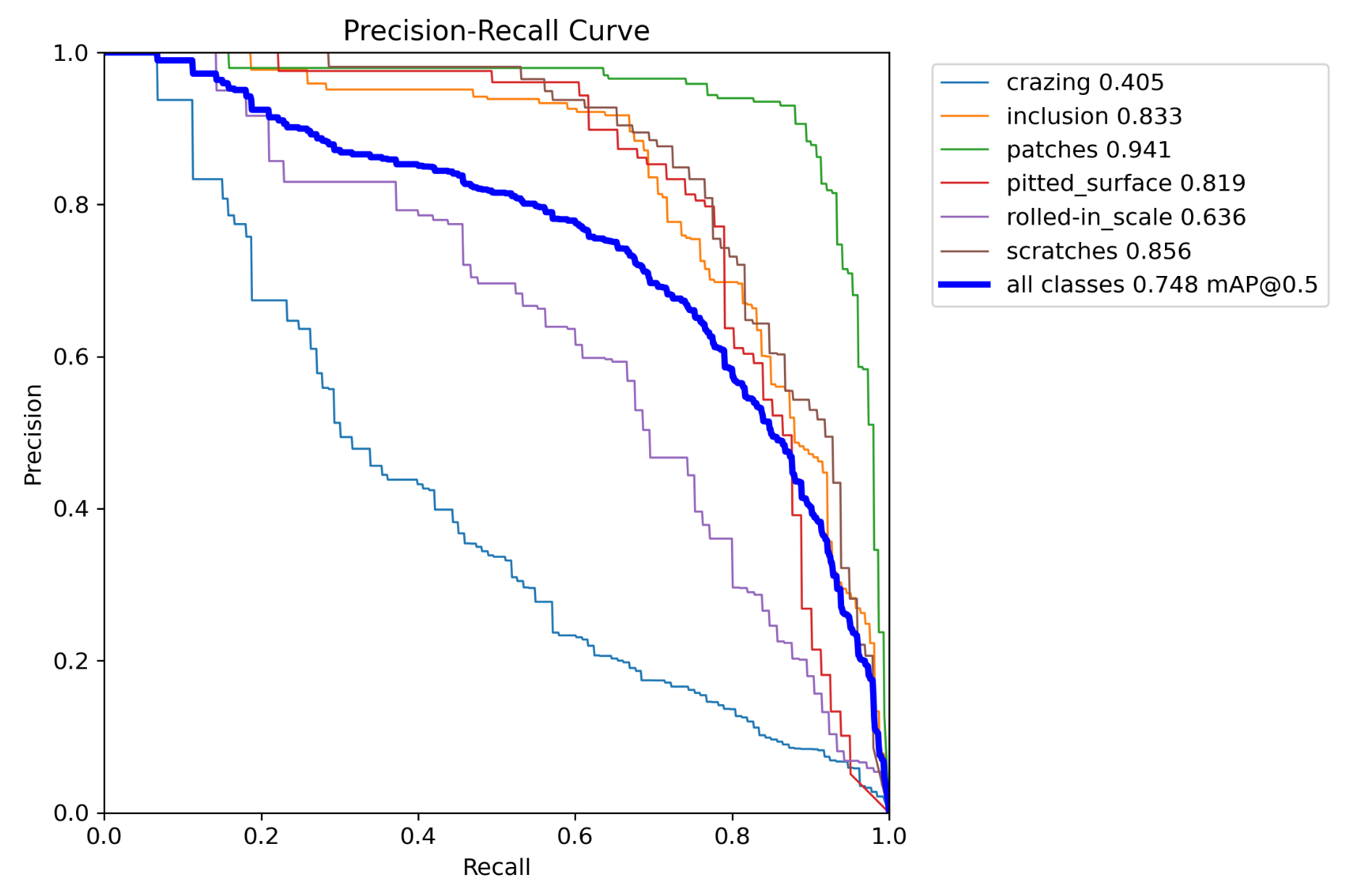

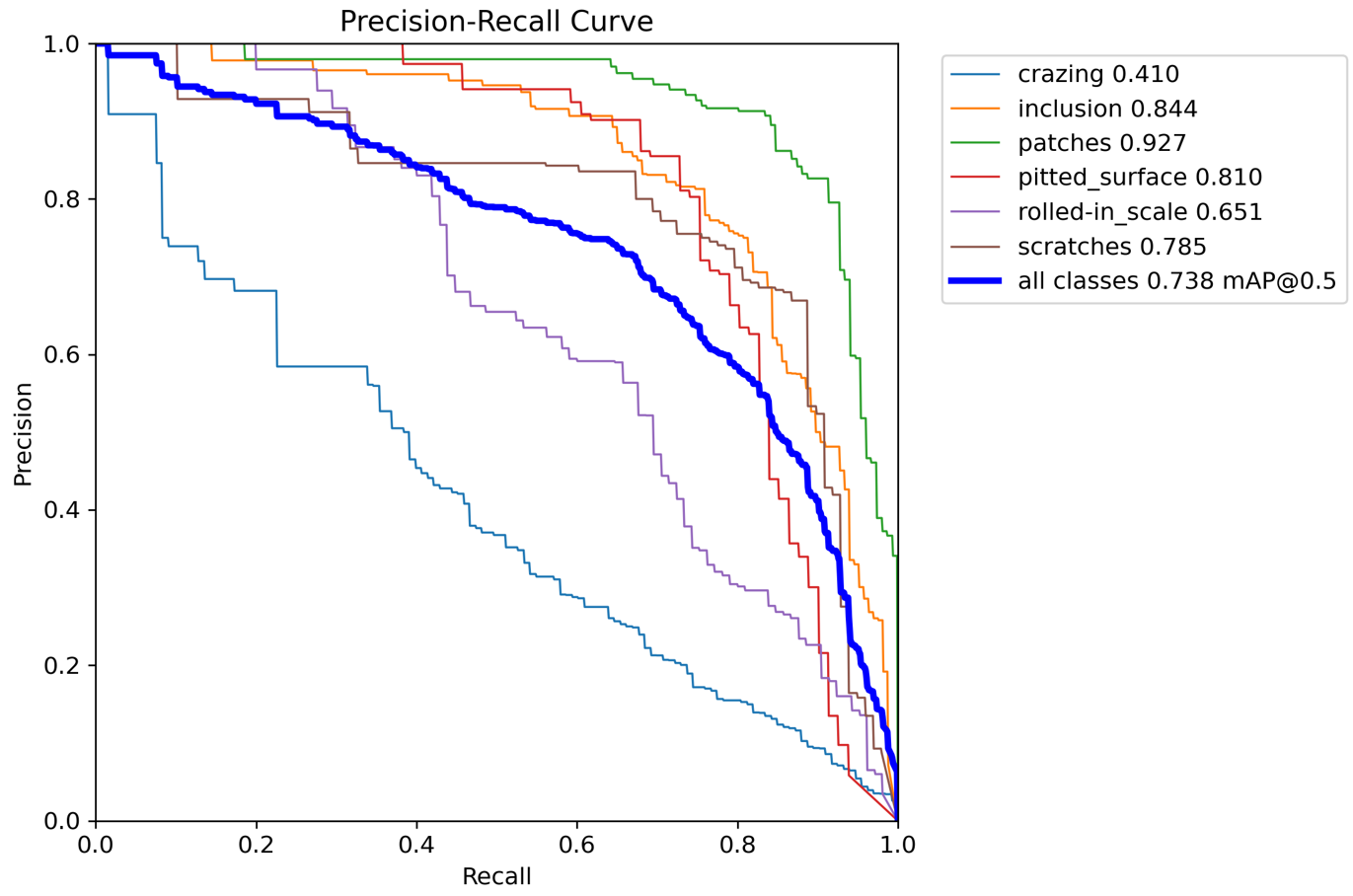

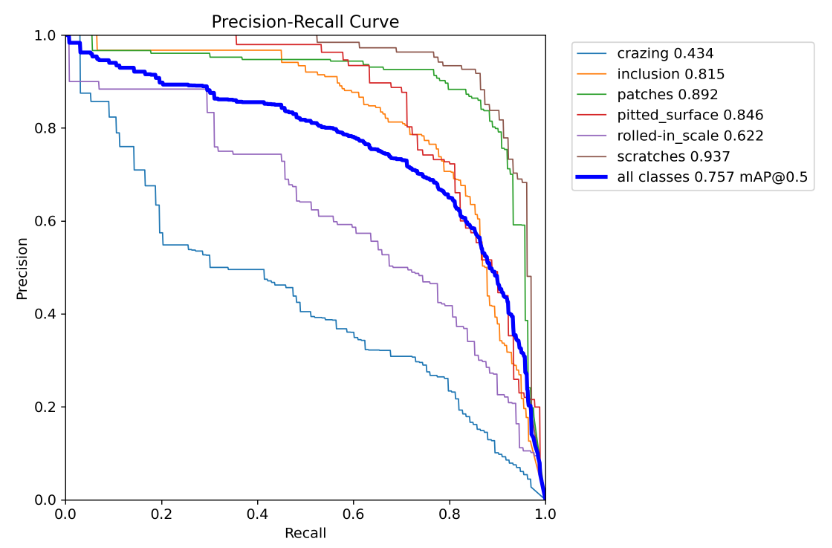

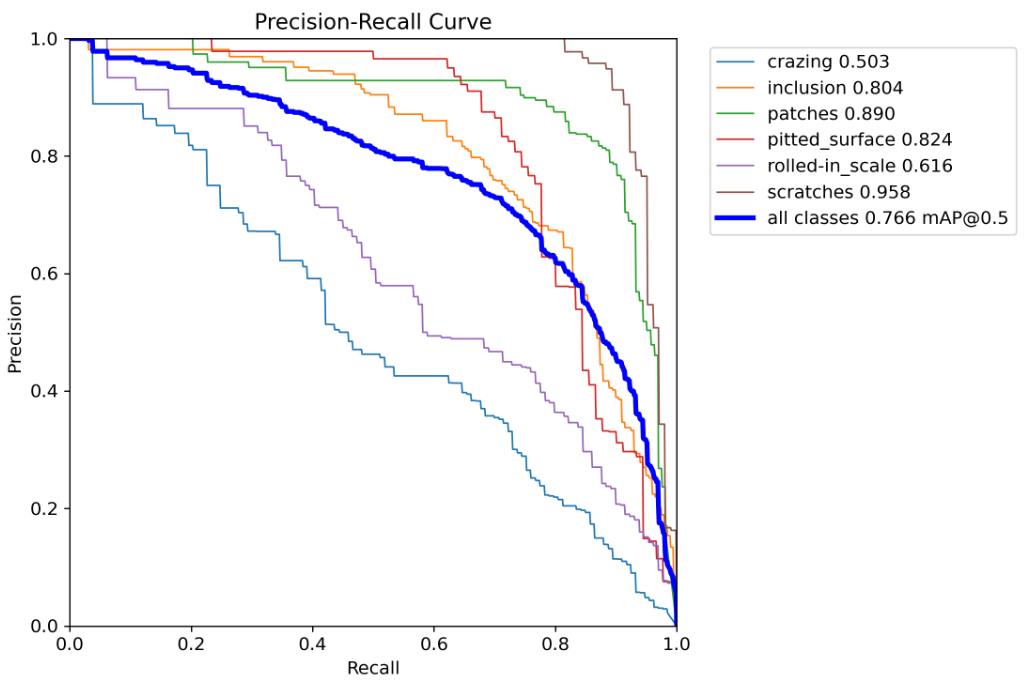

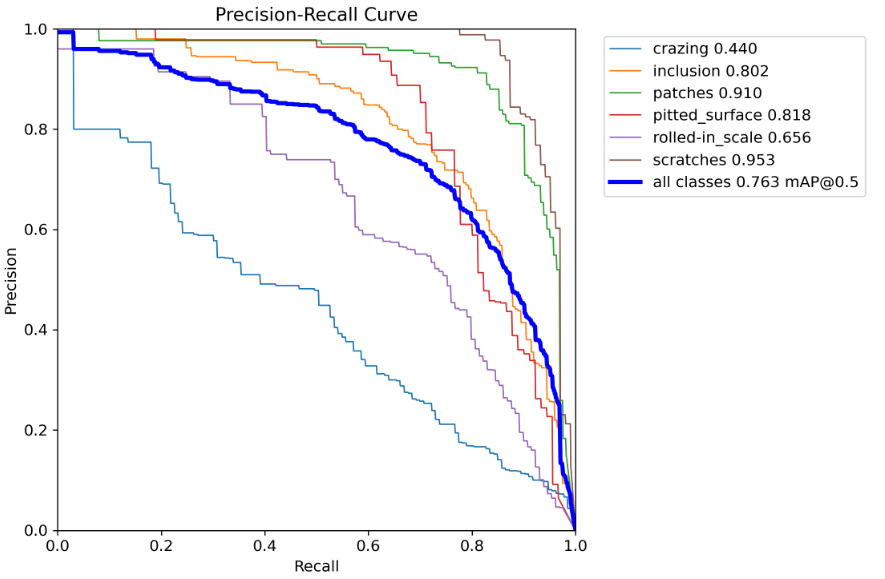

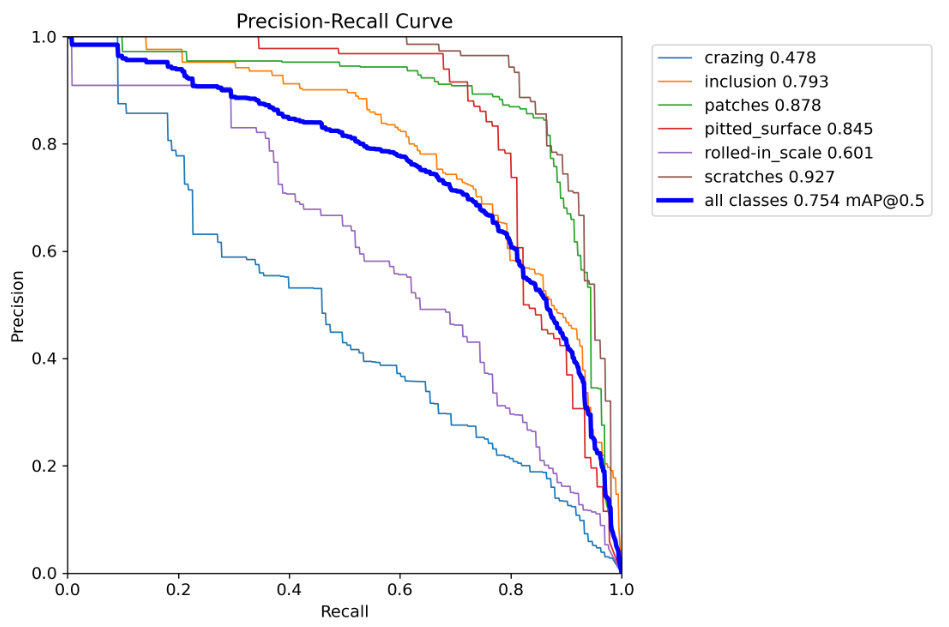

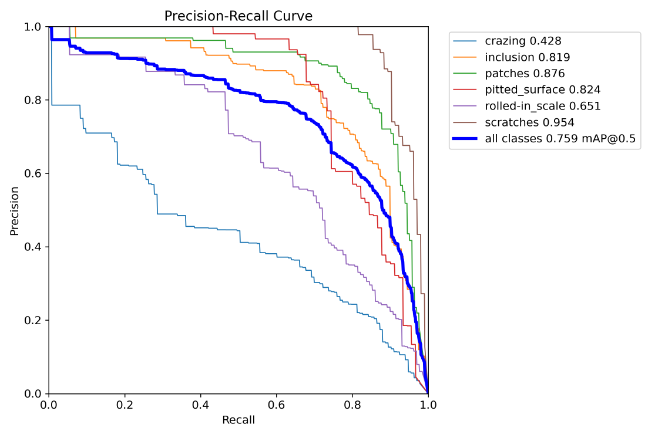

PR_recall

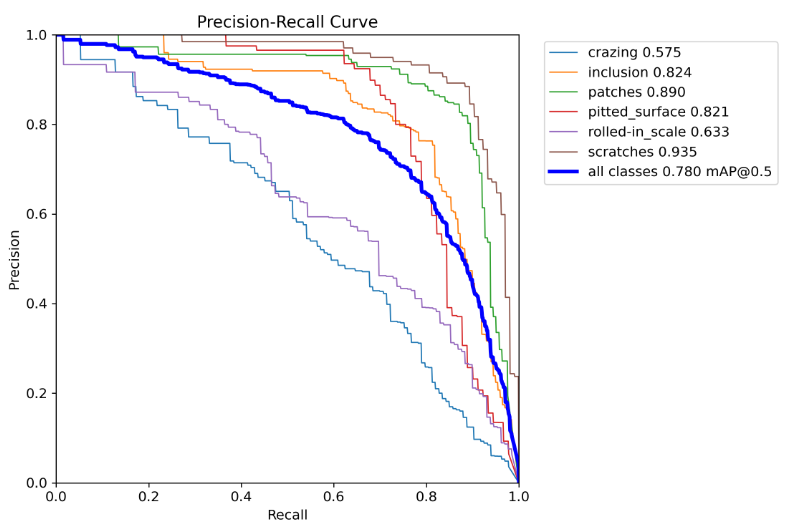

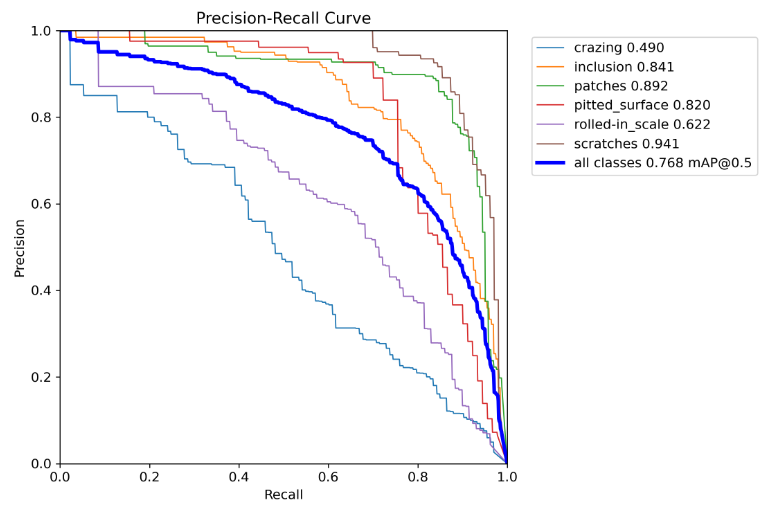

条件2

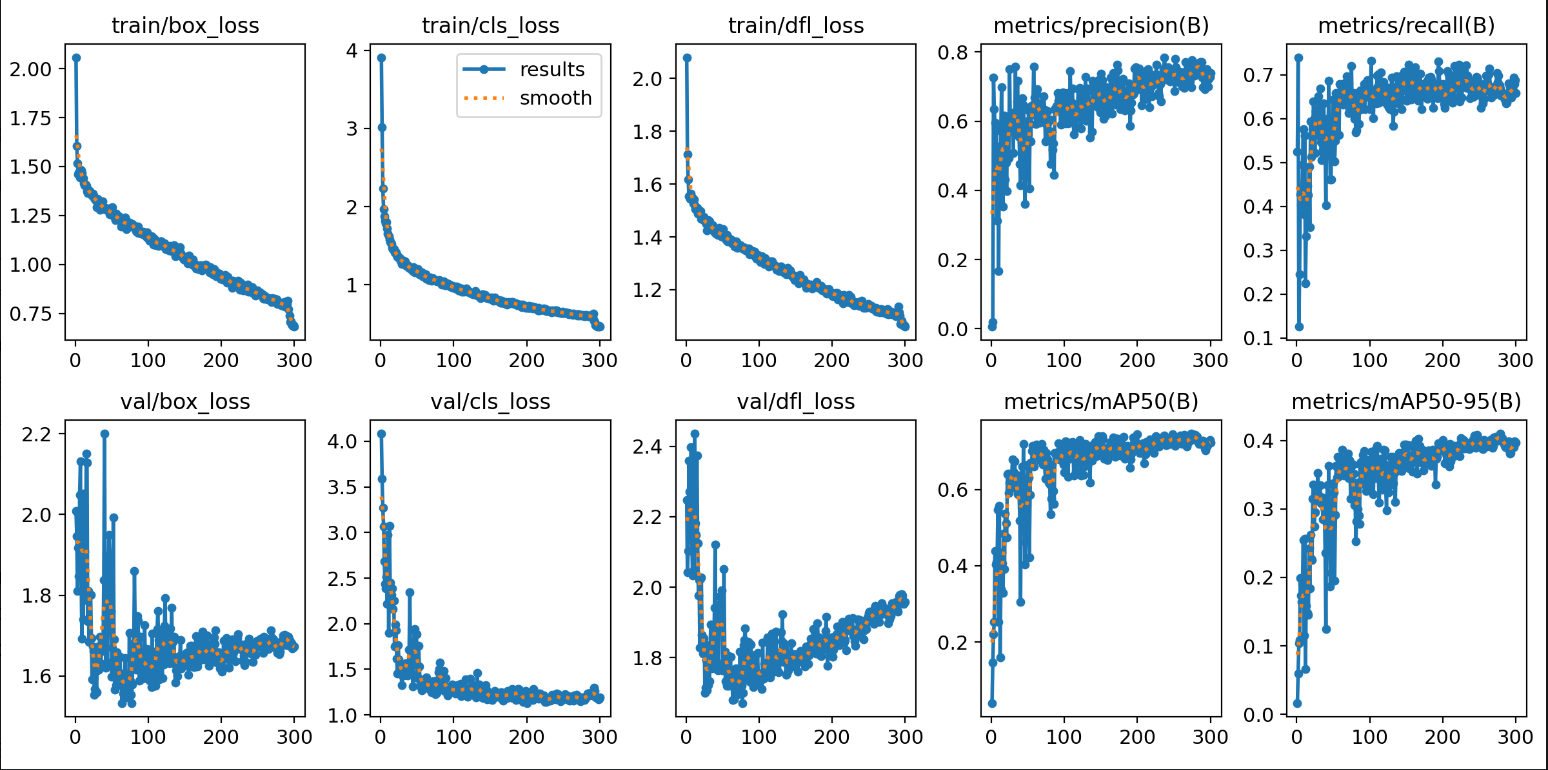

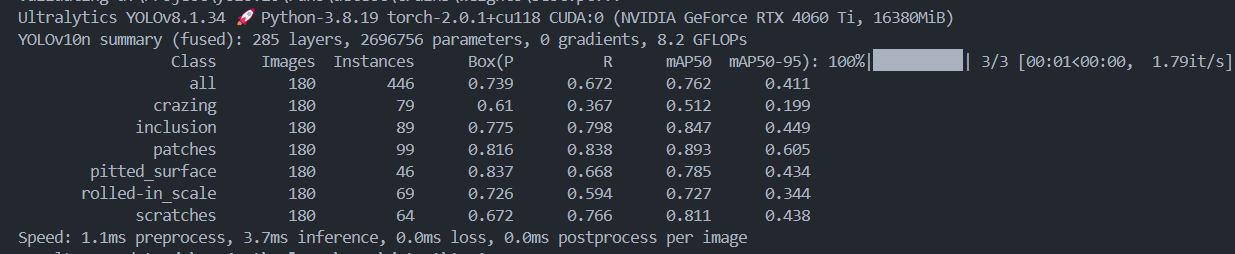

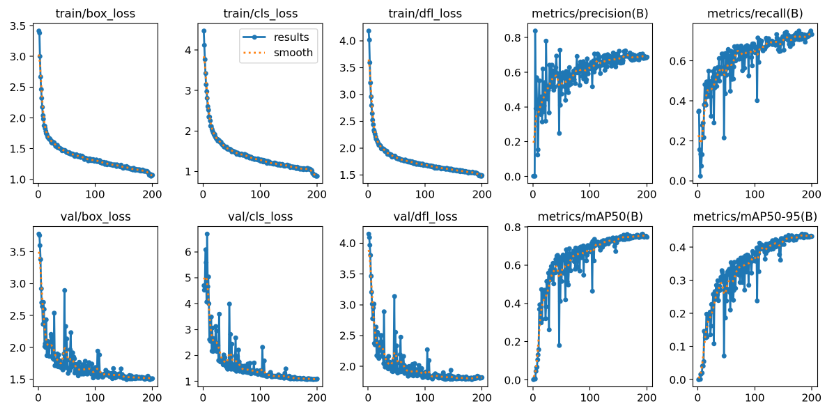

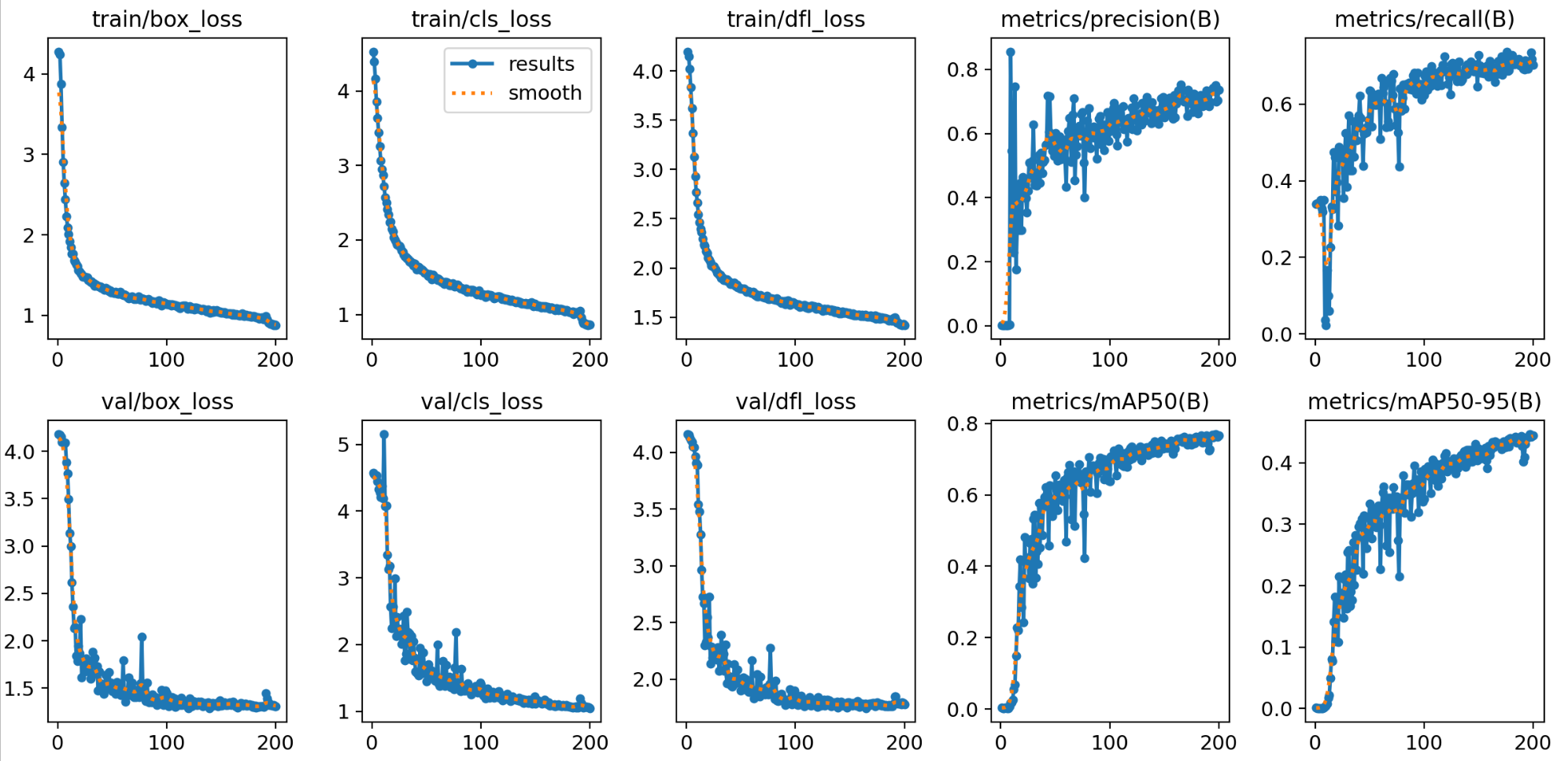

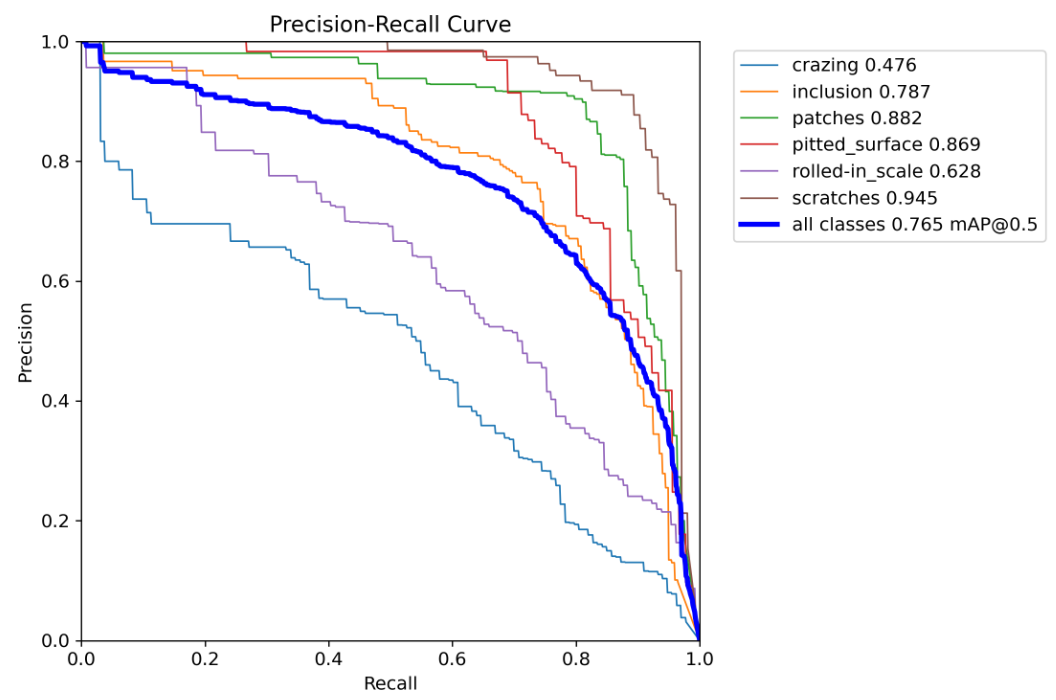

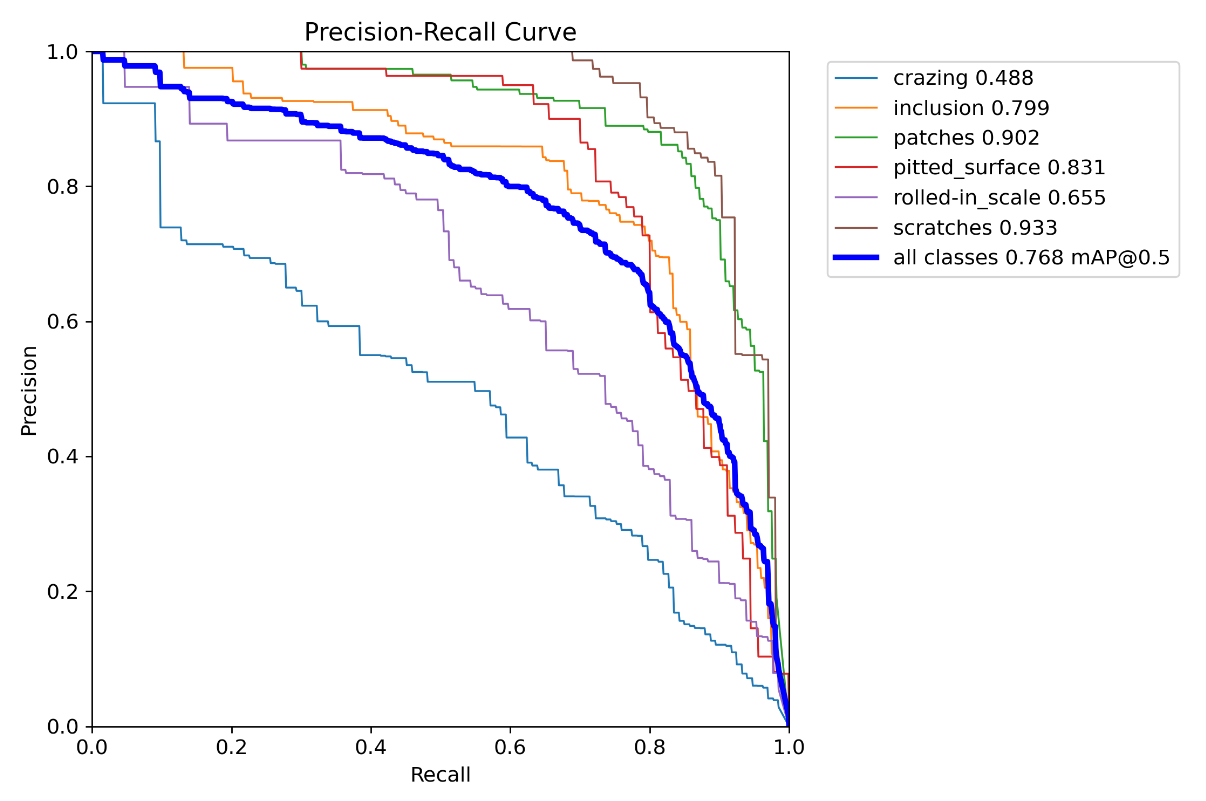

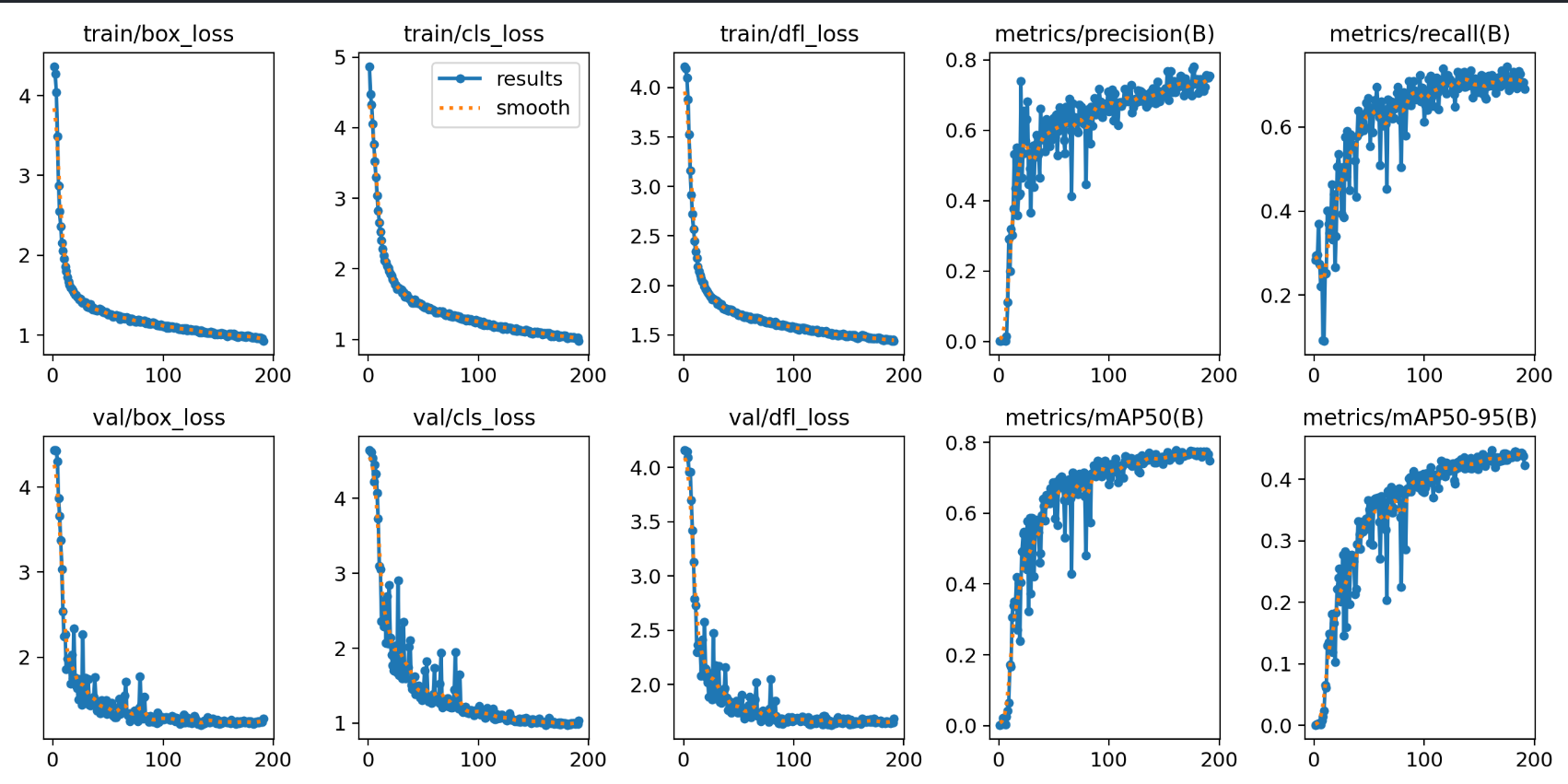

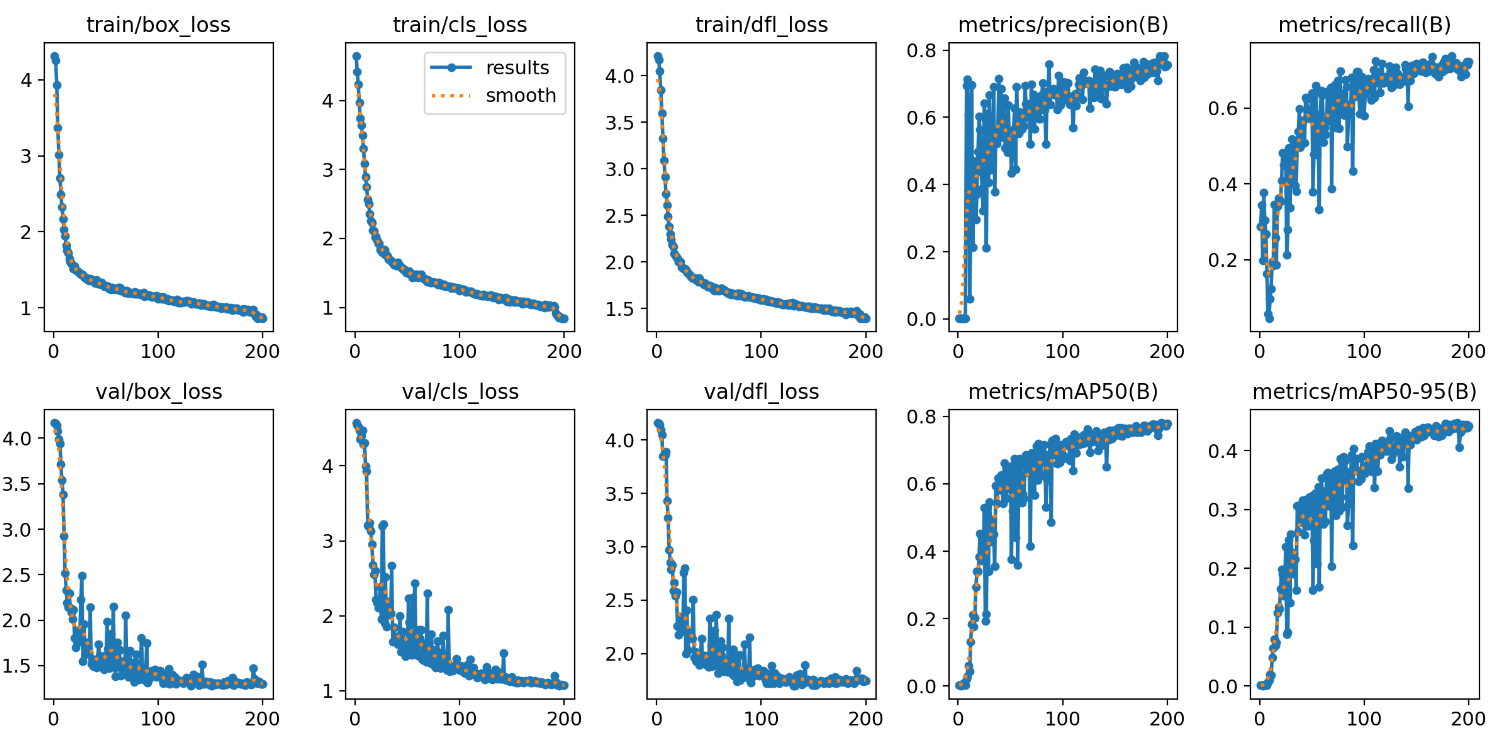

epoch = 300,其余一样

结果

1

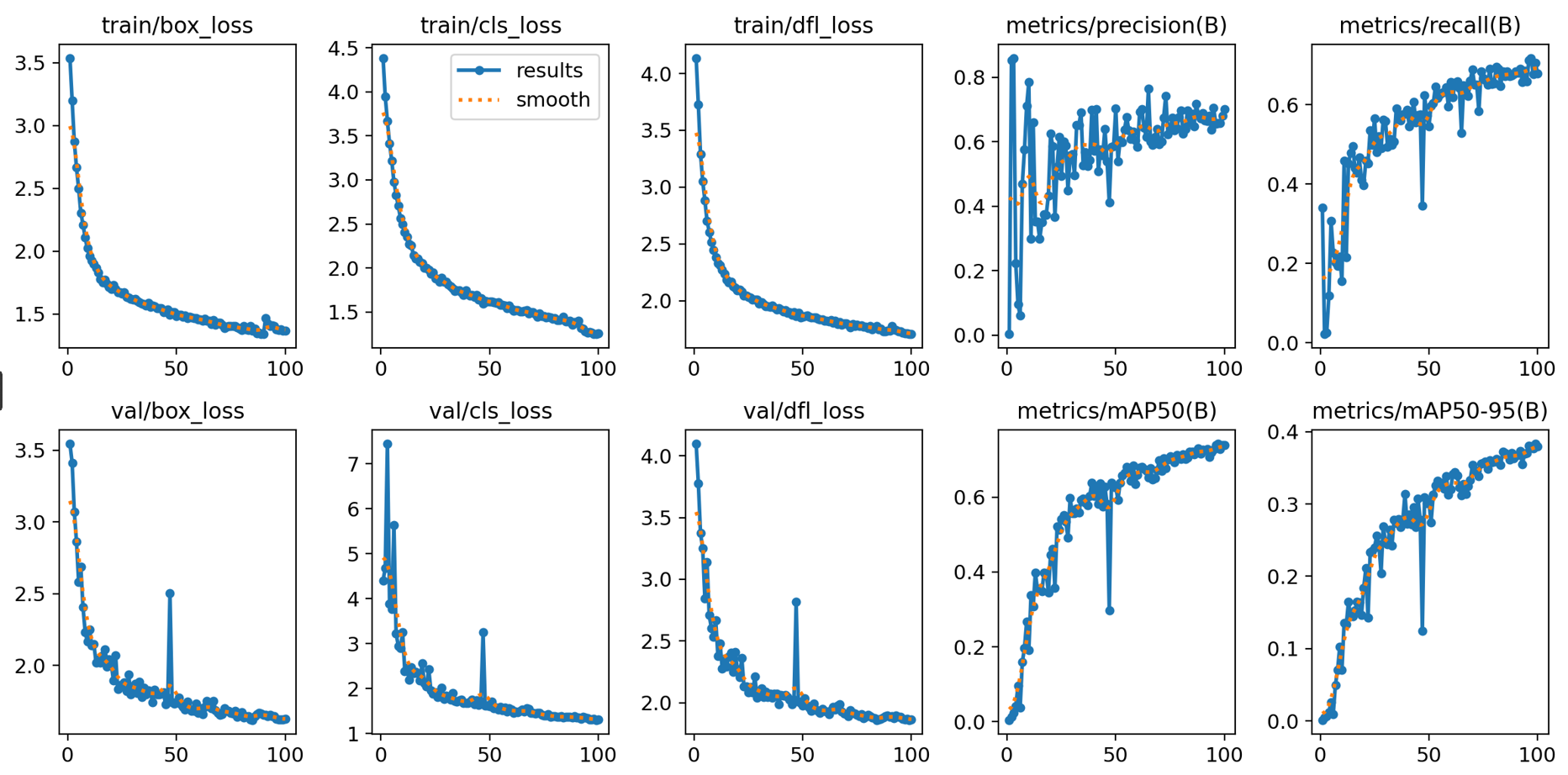

2metrics/precision(B) metrics/recall(B) metrics/mAP50(B) metrics/mAP50-95(B)

0.73849, 0.65913, 0.72279, 0.39697,

PR_recall

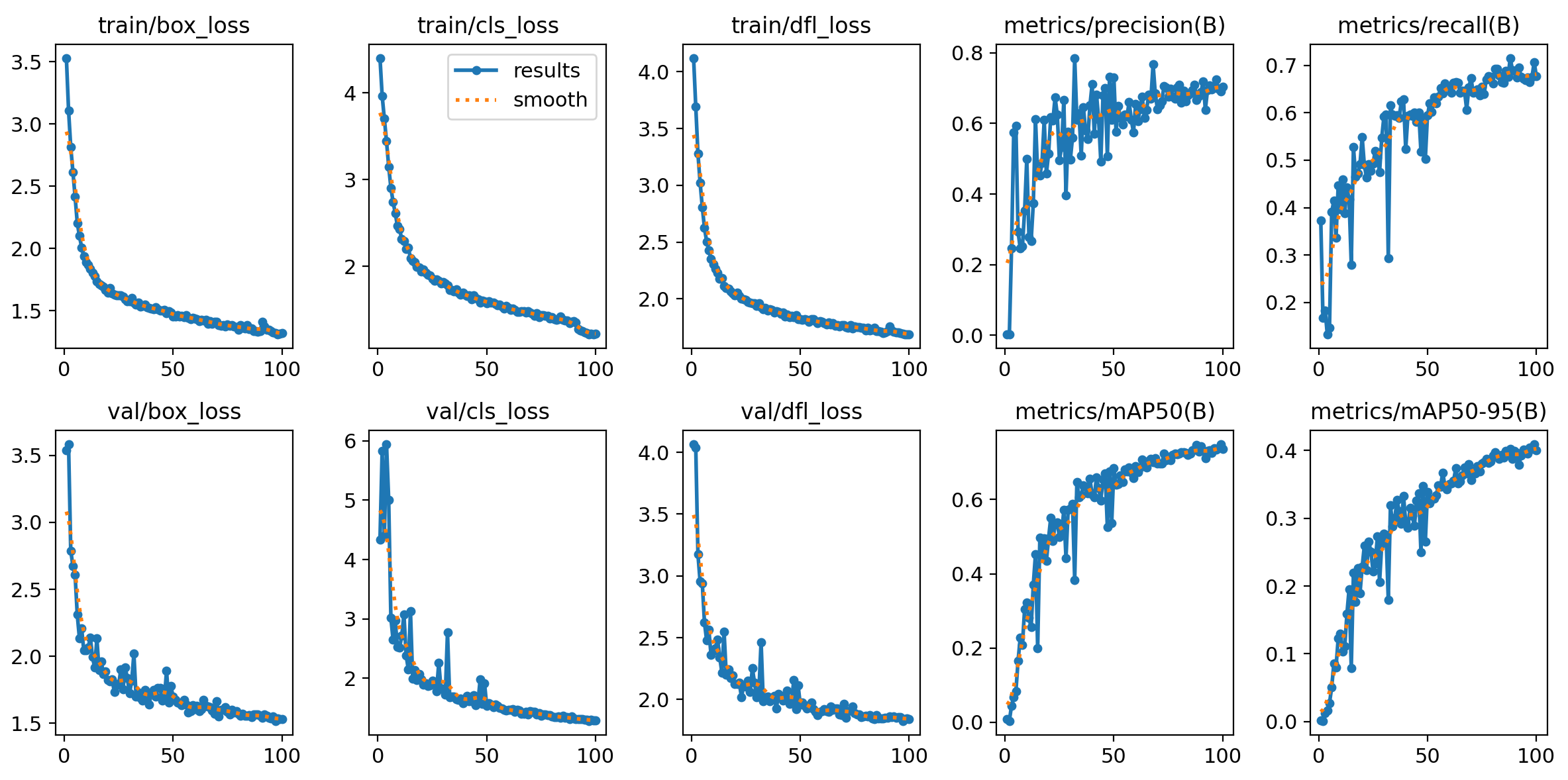

可以看到当训练轮次增多的时候map反而降低了,验证损失波动比较大,合适的eopoch应该在100-150左右,

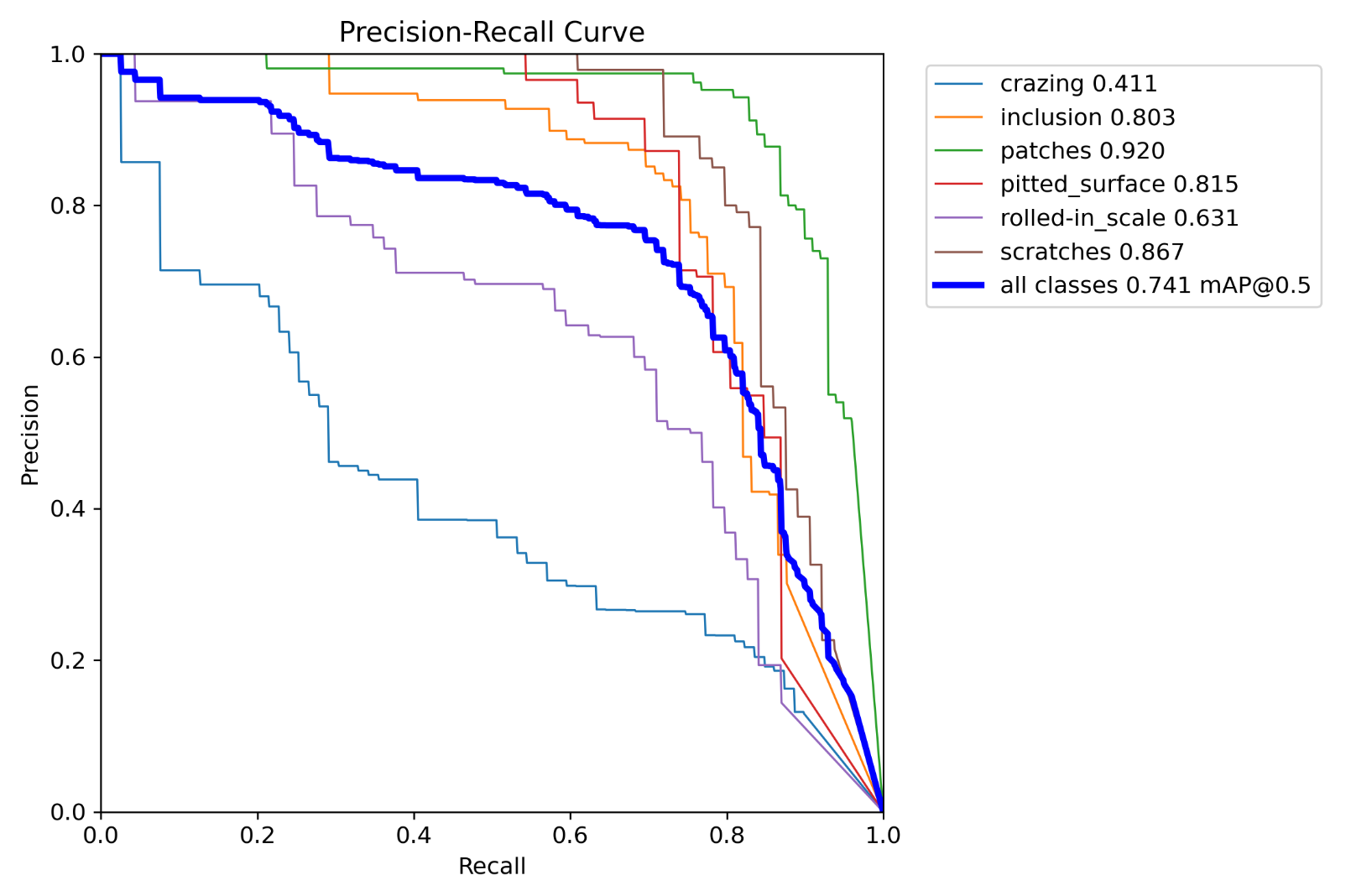

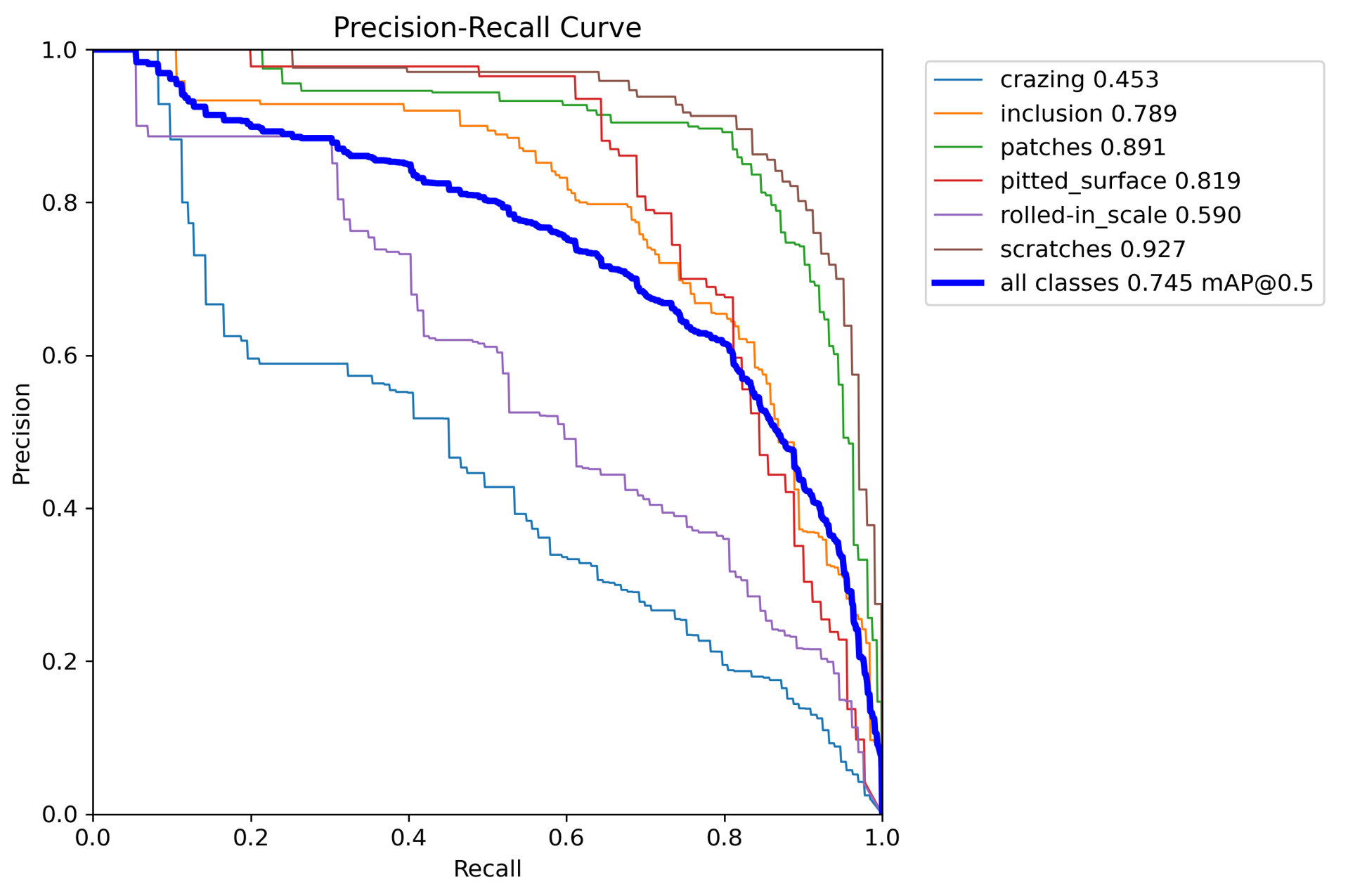

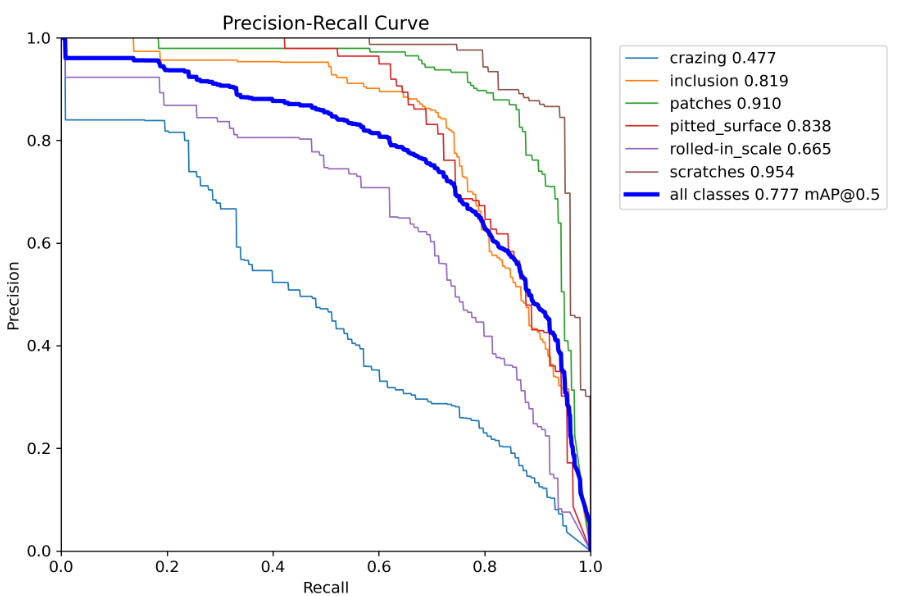

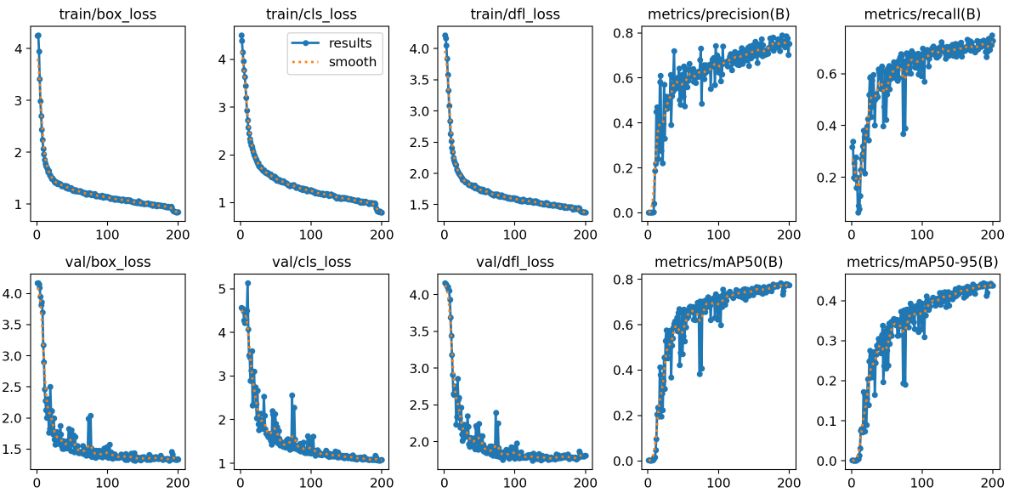

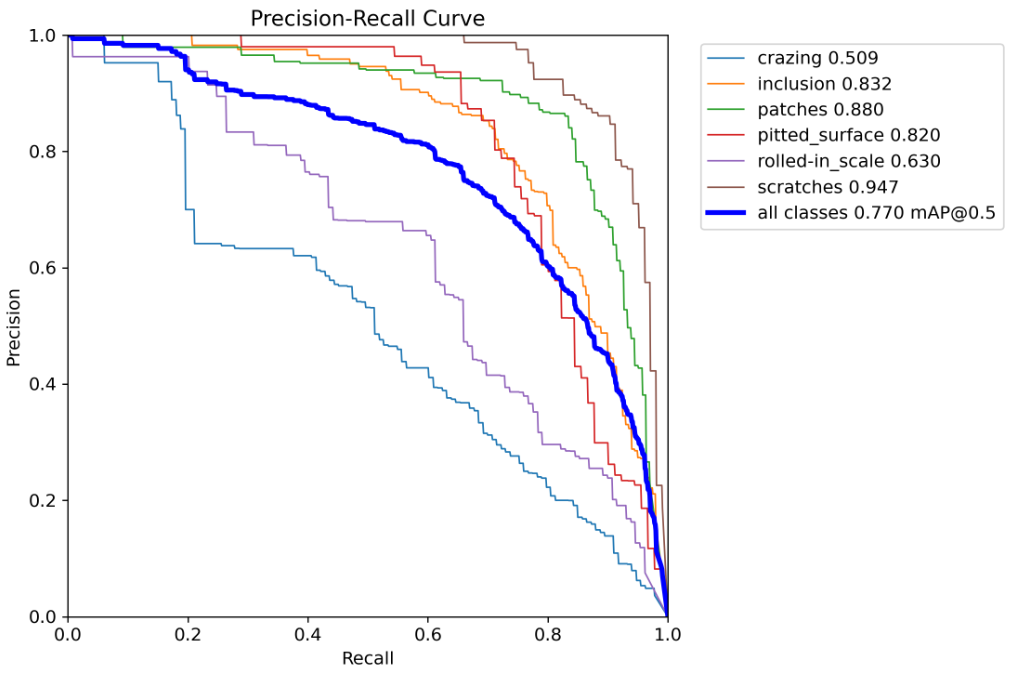

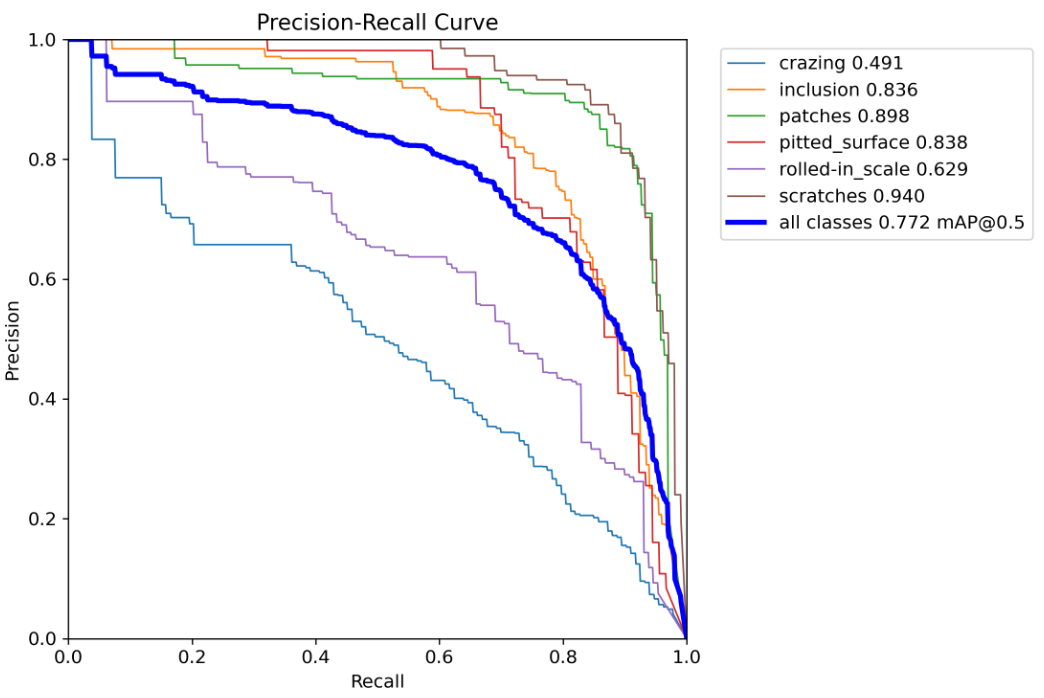

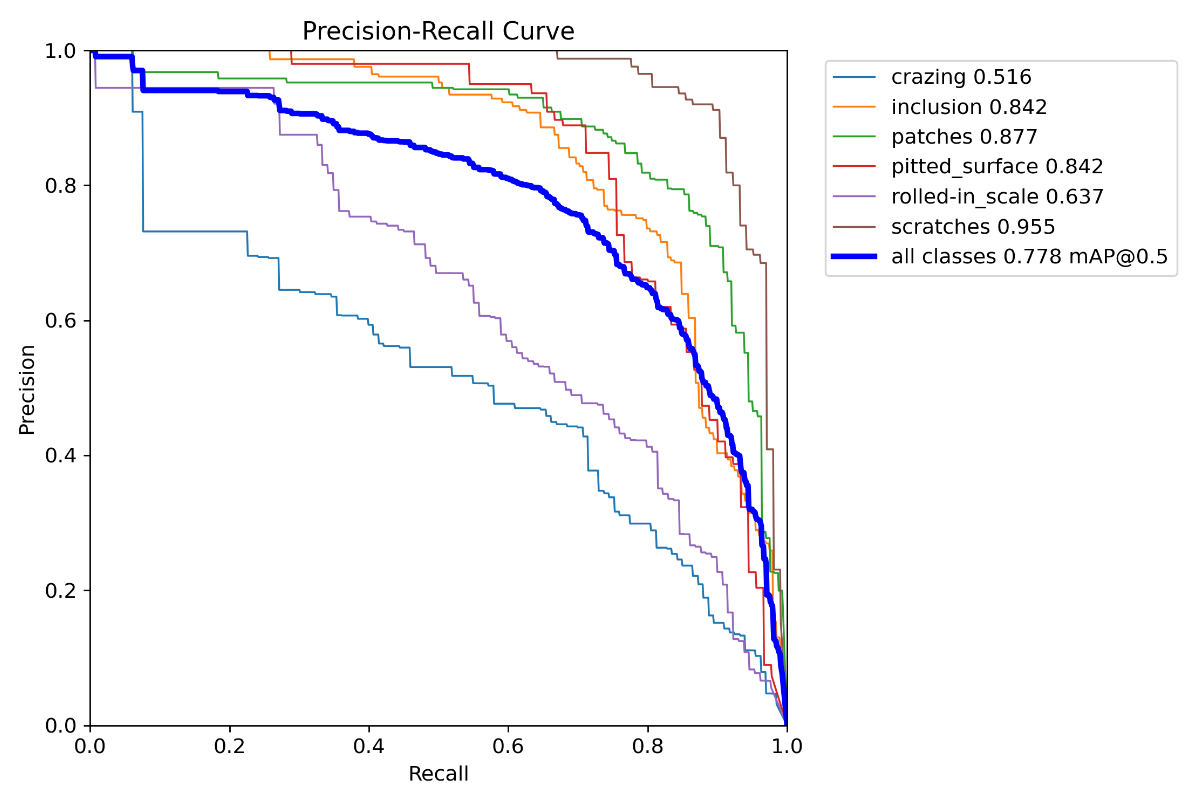

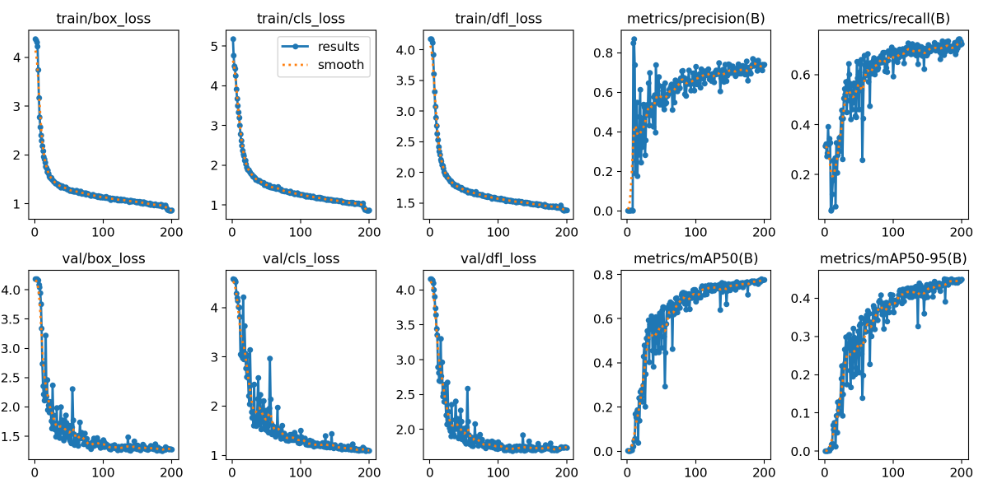

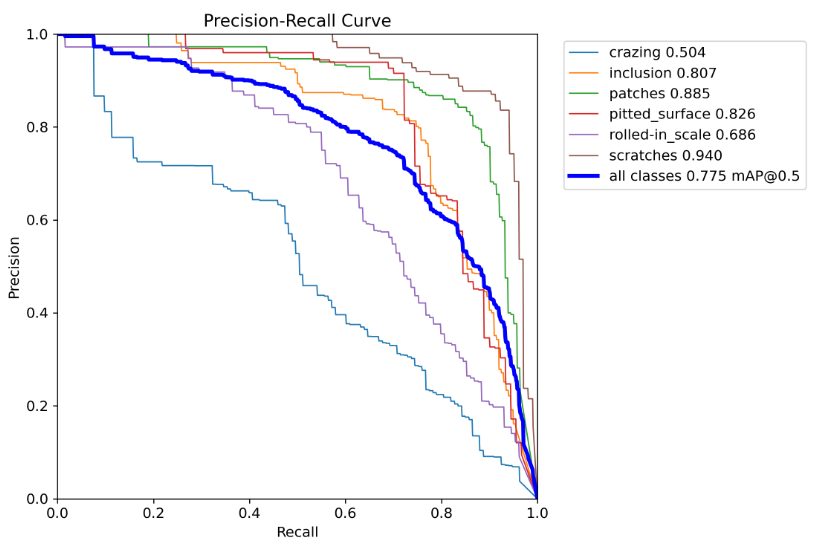

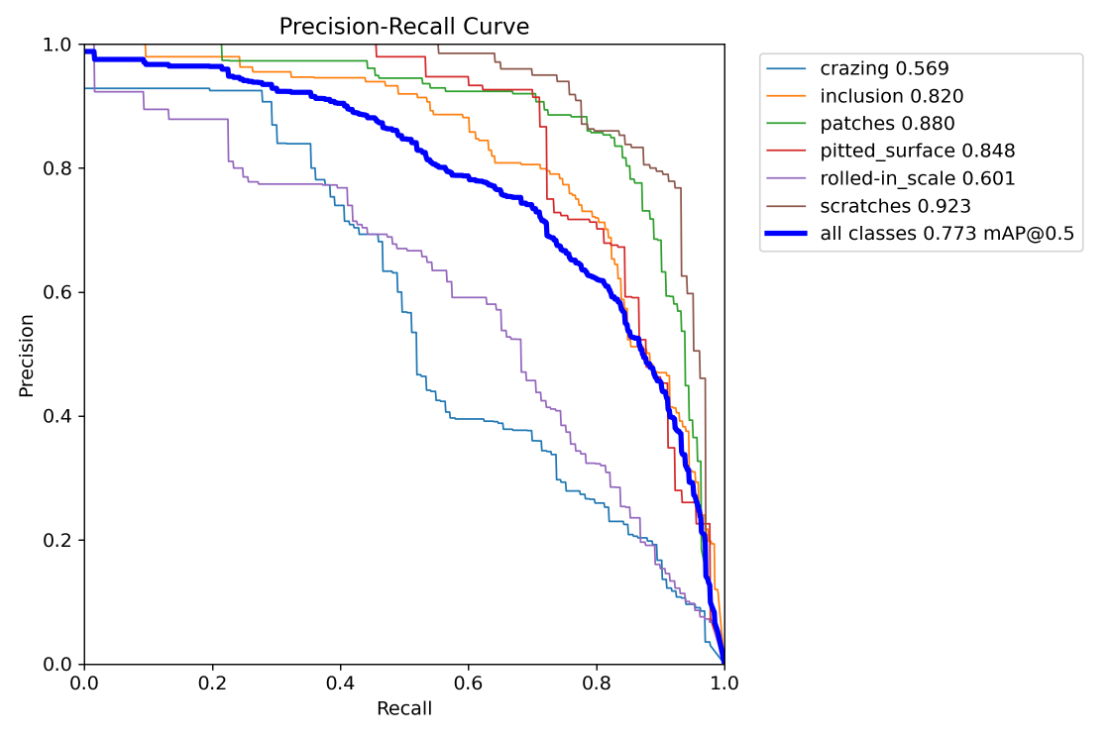

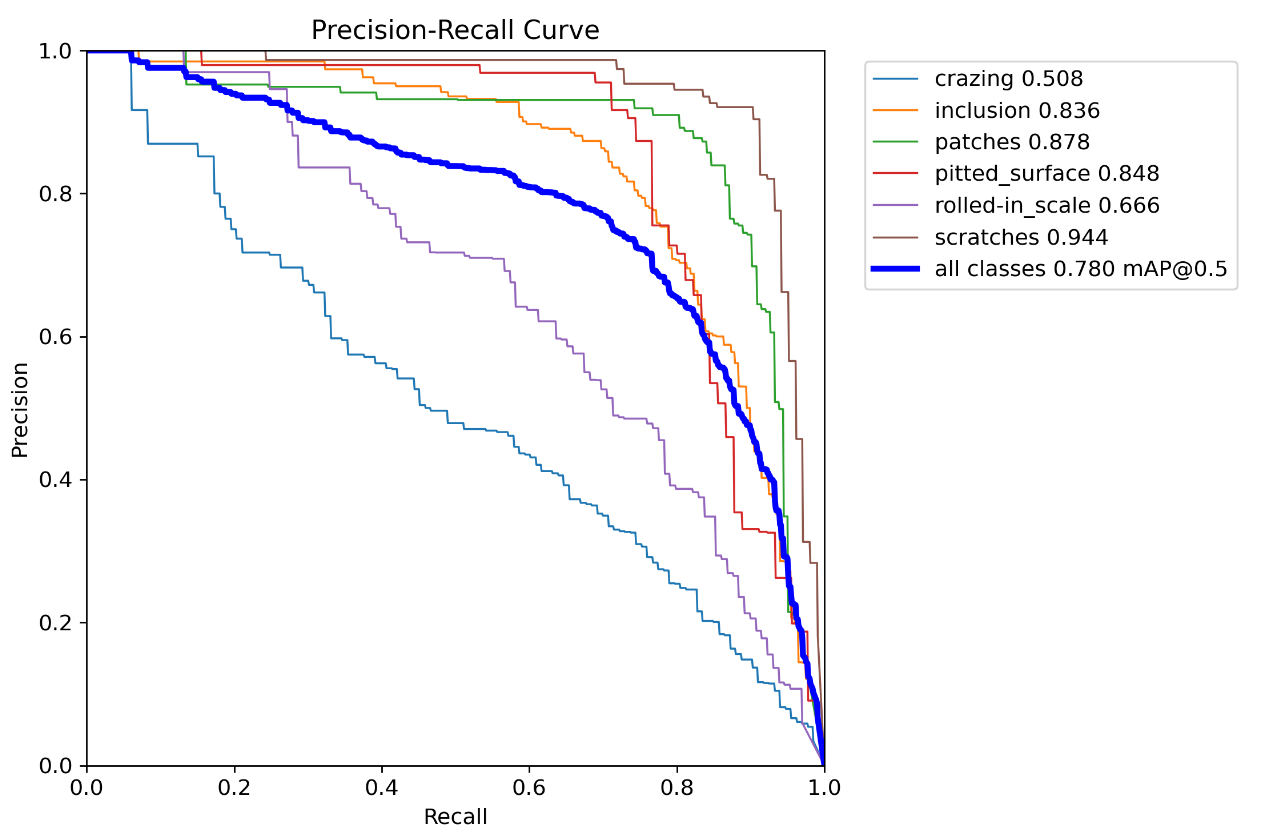

加入SwinTransformer(76.4)

条件

epoch = 265,batch = -1,model=yolov8n

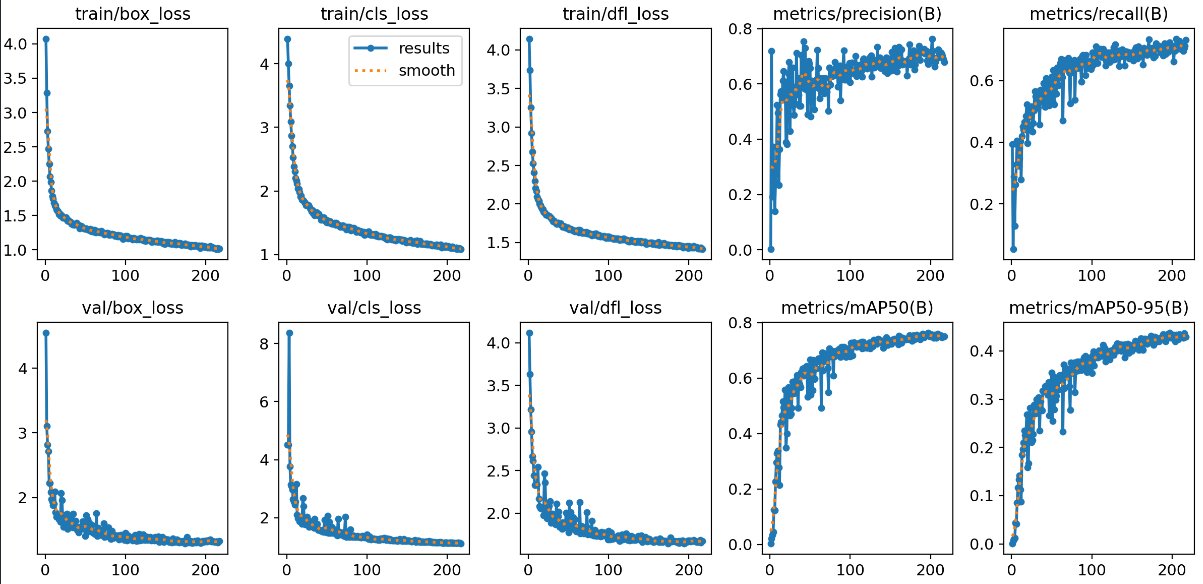

结果

1

2metrics/precision(B) metrics/recall(B) metrics/mAP50(B) metrics/mAP50-95(B)

0.68036 0.72664 0.75465 0.39744

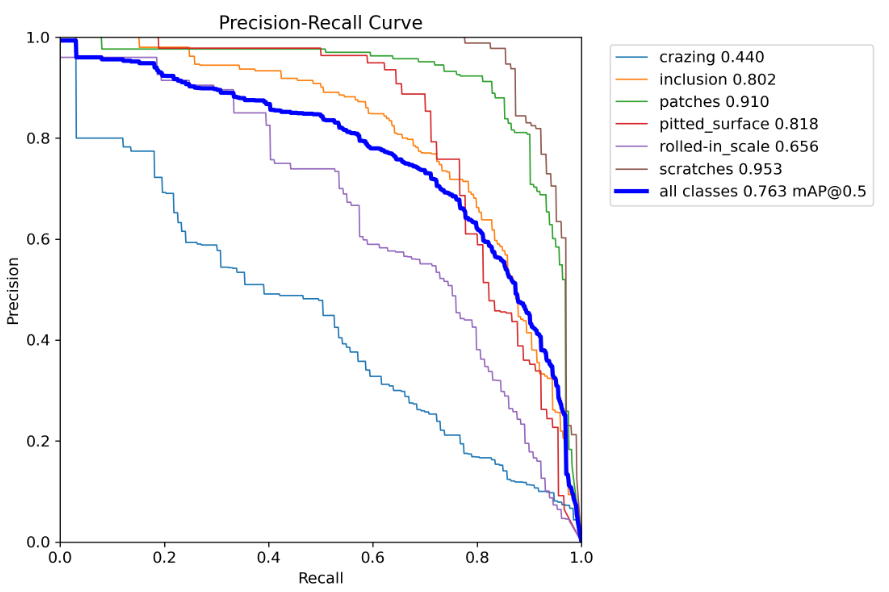

PR_recall

Confusion Matrix Normalized

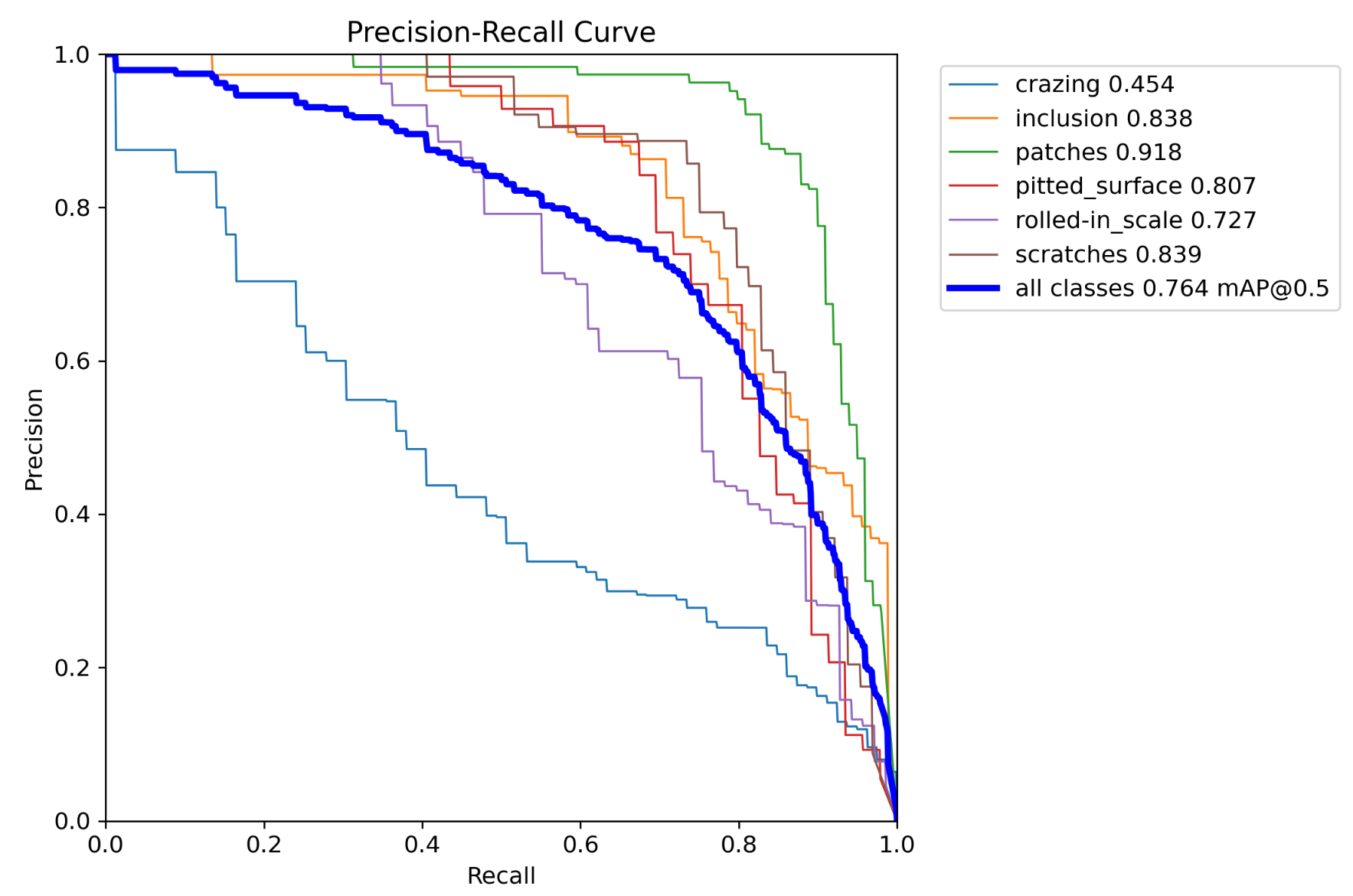

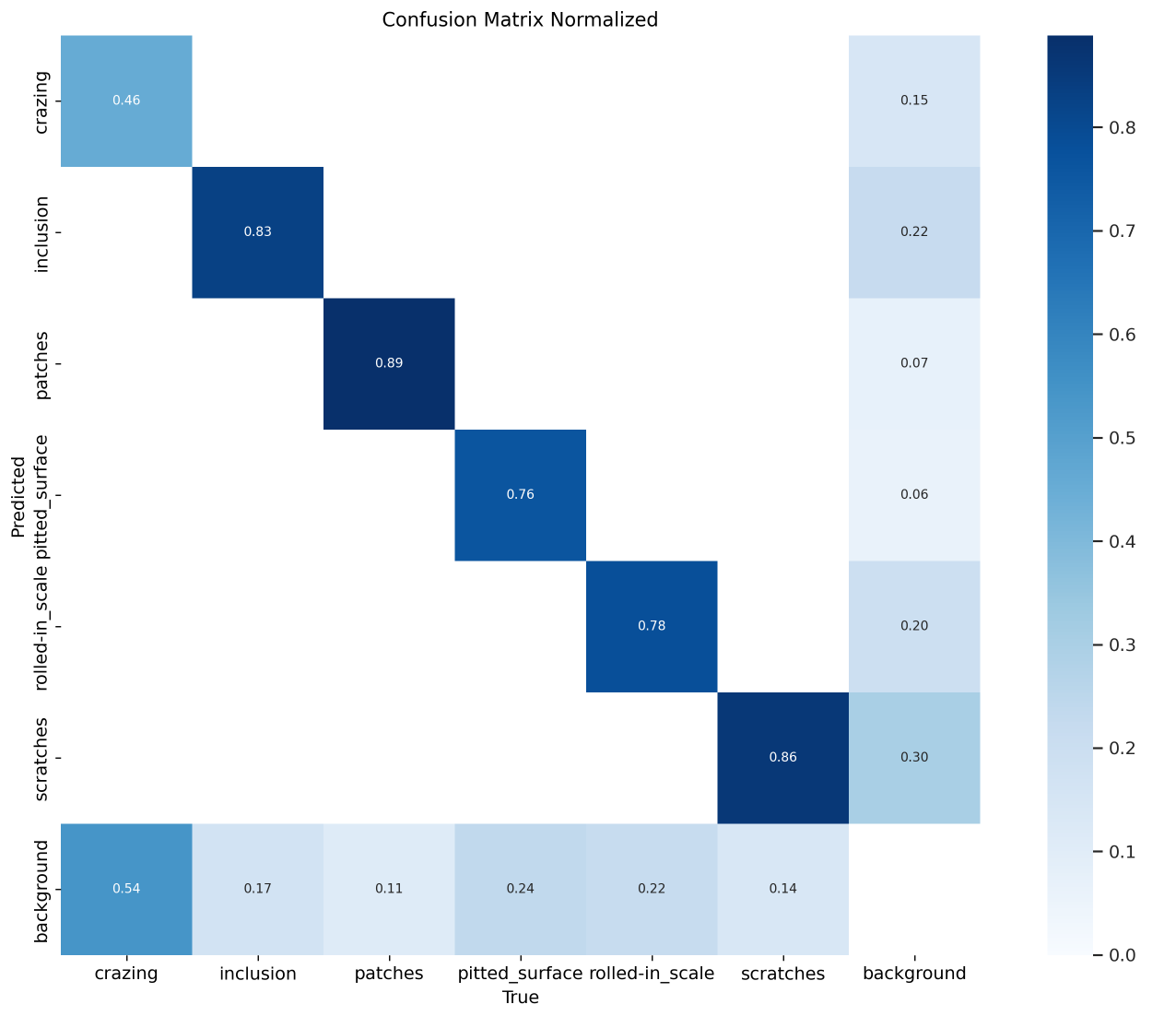

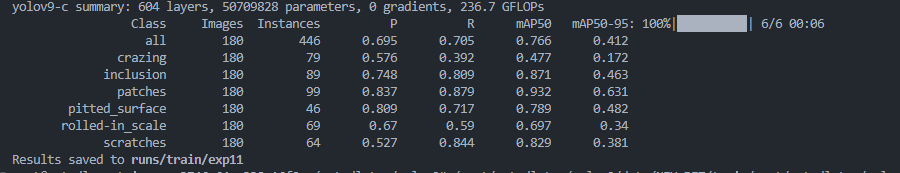

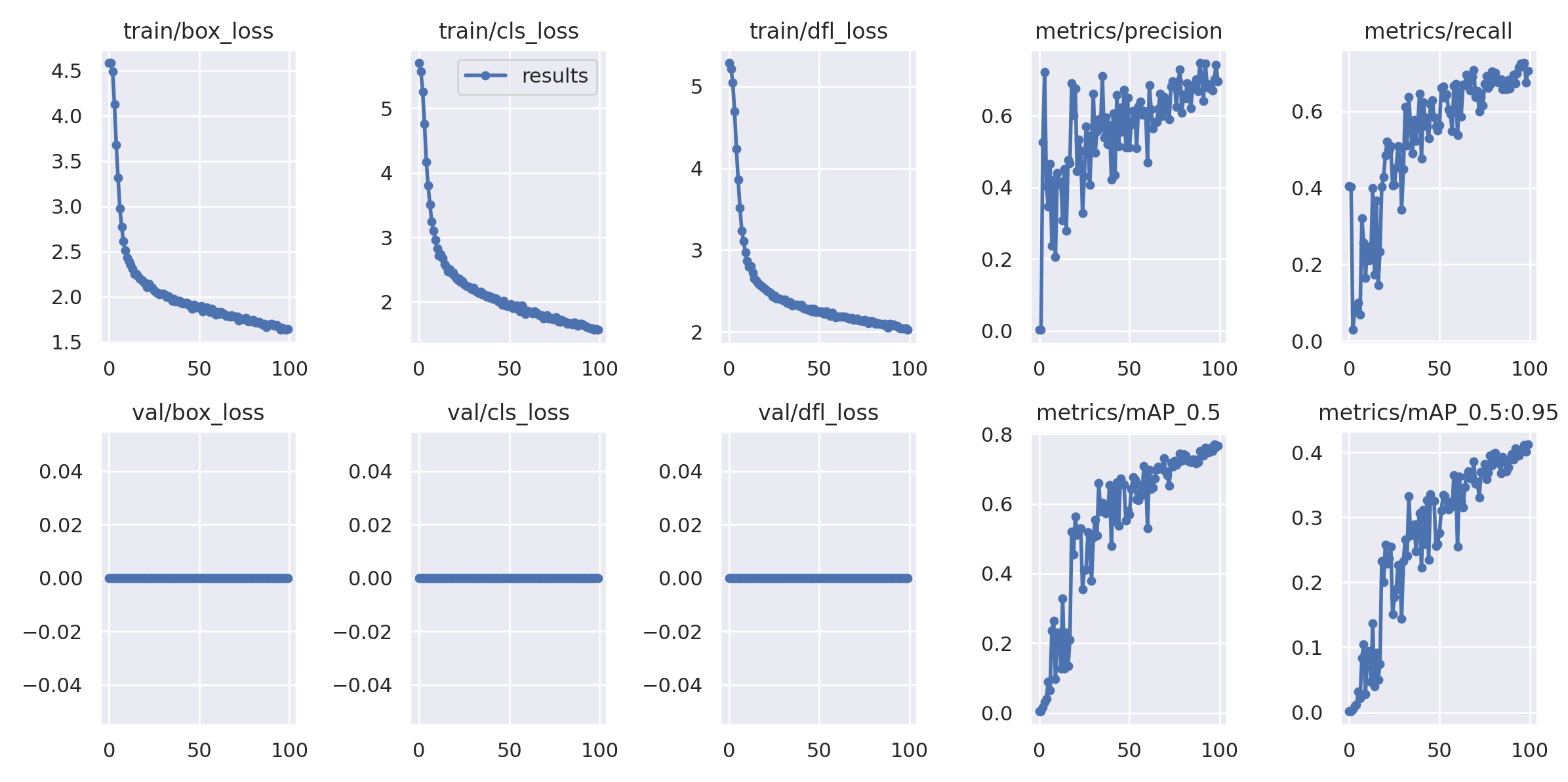

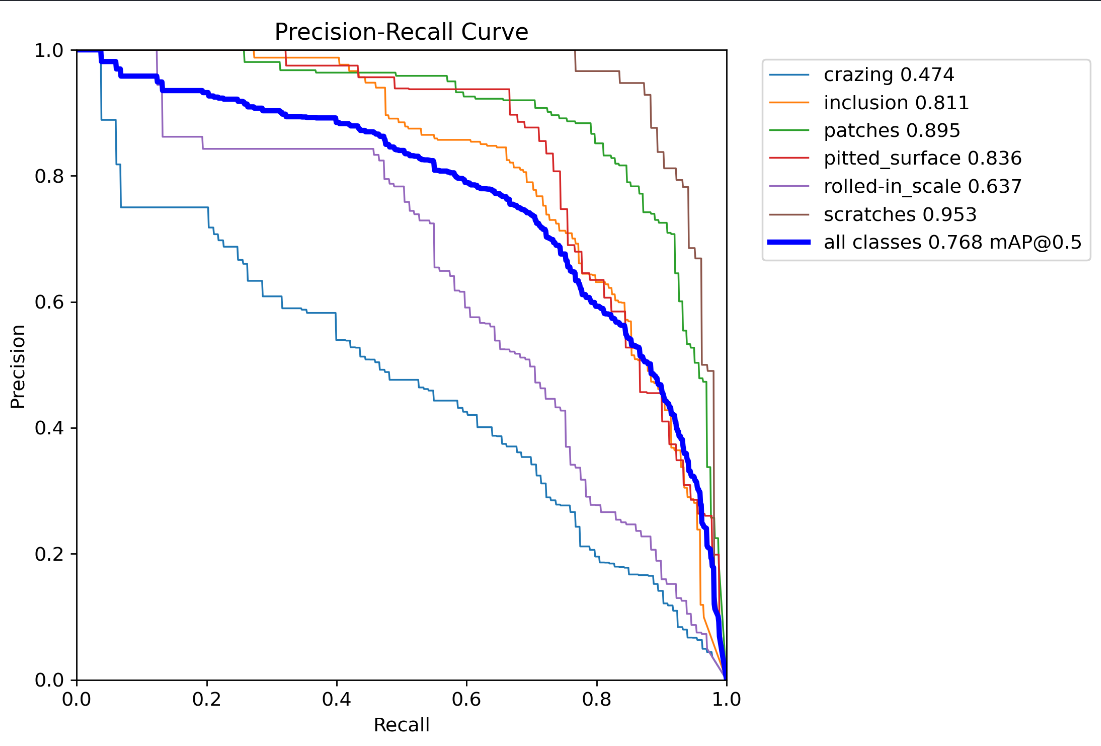

V9(和v8怎么一样?)

条件

epcoh=100,batch=-1,model=yolov9-c

结果

不知道为什么loss都是0?

PR_recall

map和v8一模一样?,不过v9模型比v8大,训练时间也更长

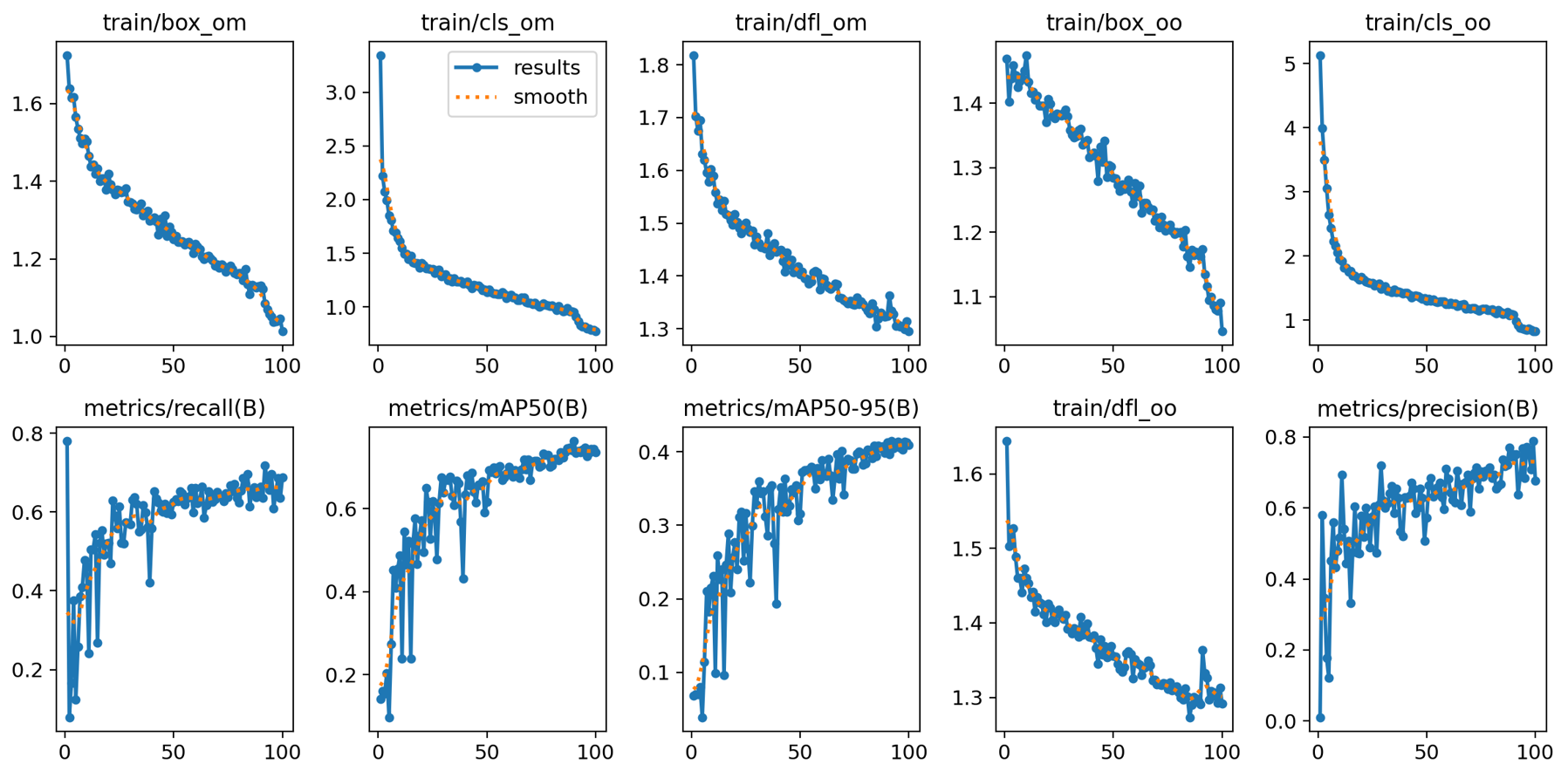

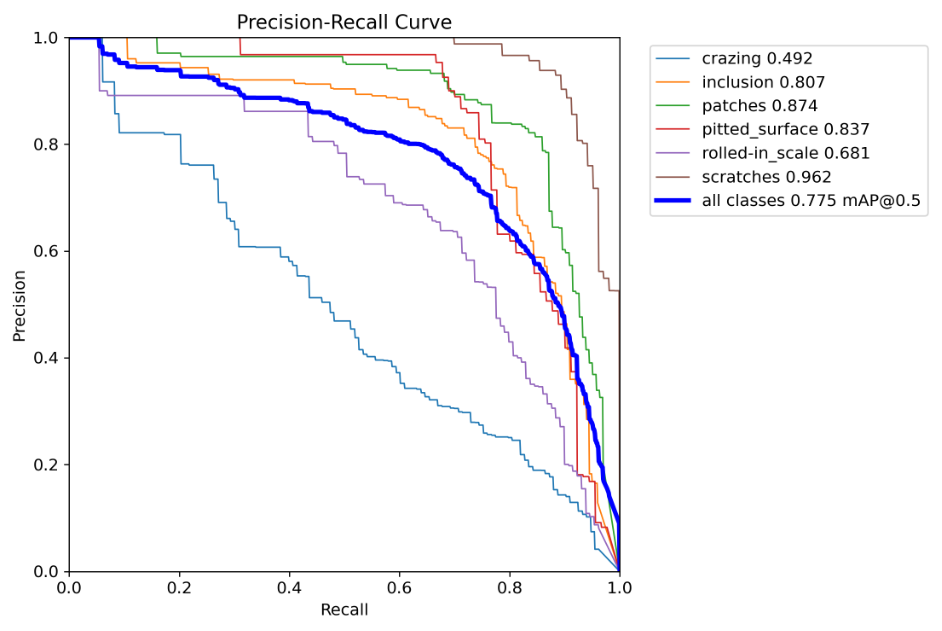

V10(和V8V9没什么变化)

条件

epoch=100,batch=-1,model=yolov10n

结果

PR_Recall

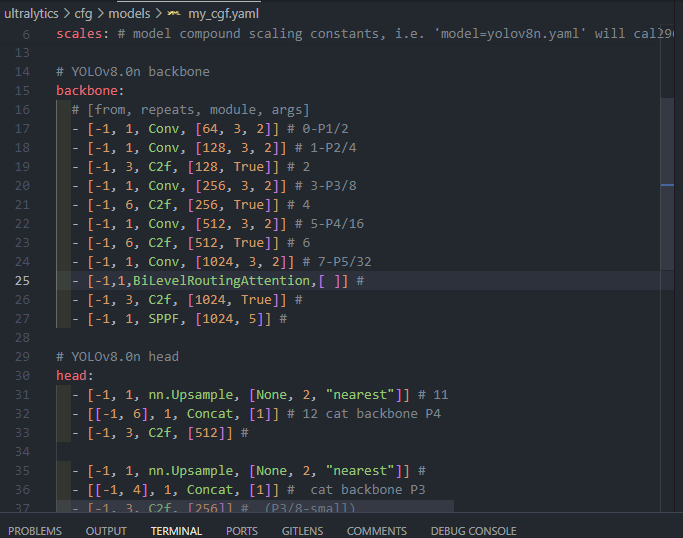

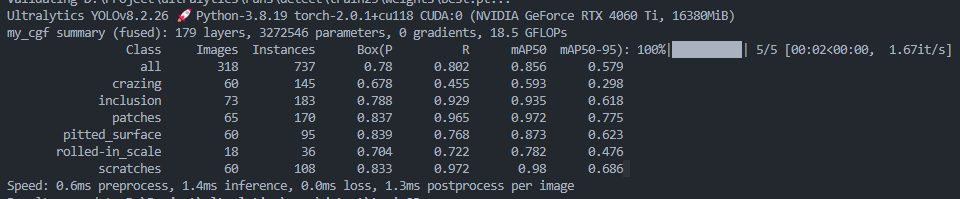

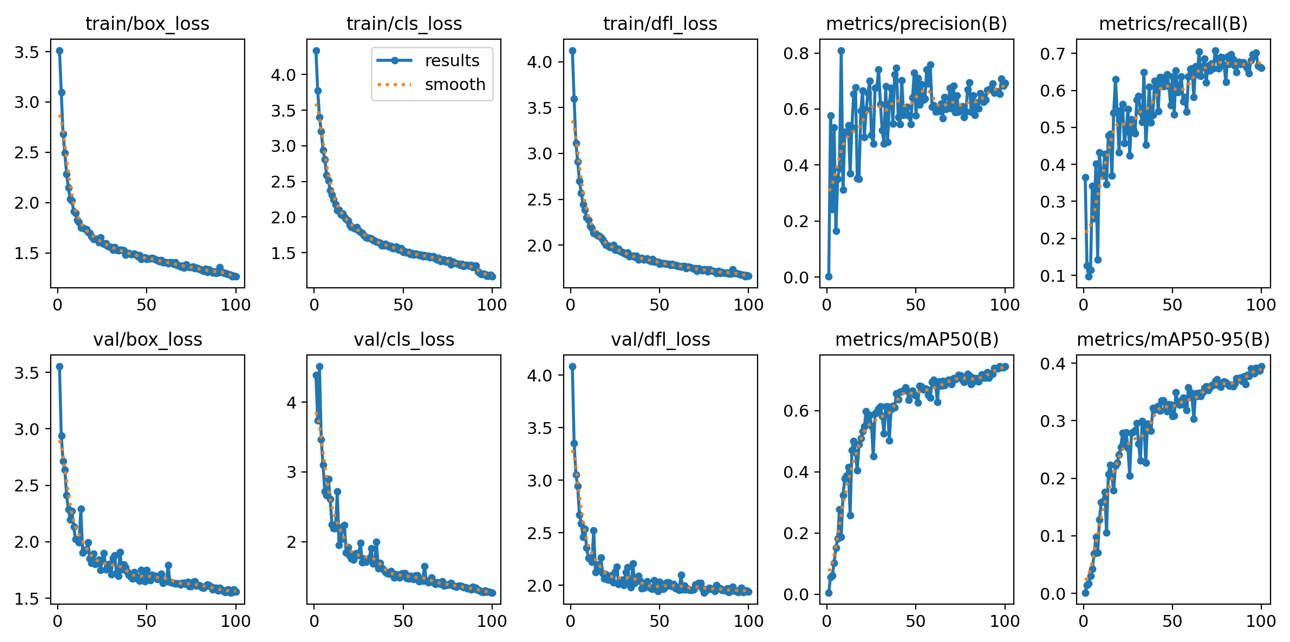

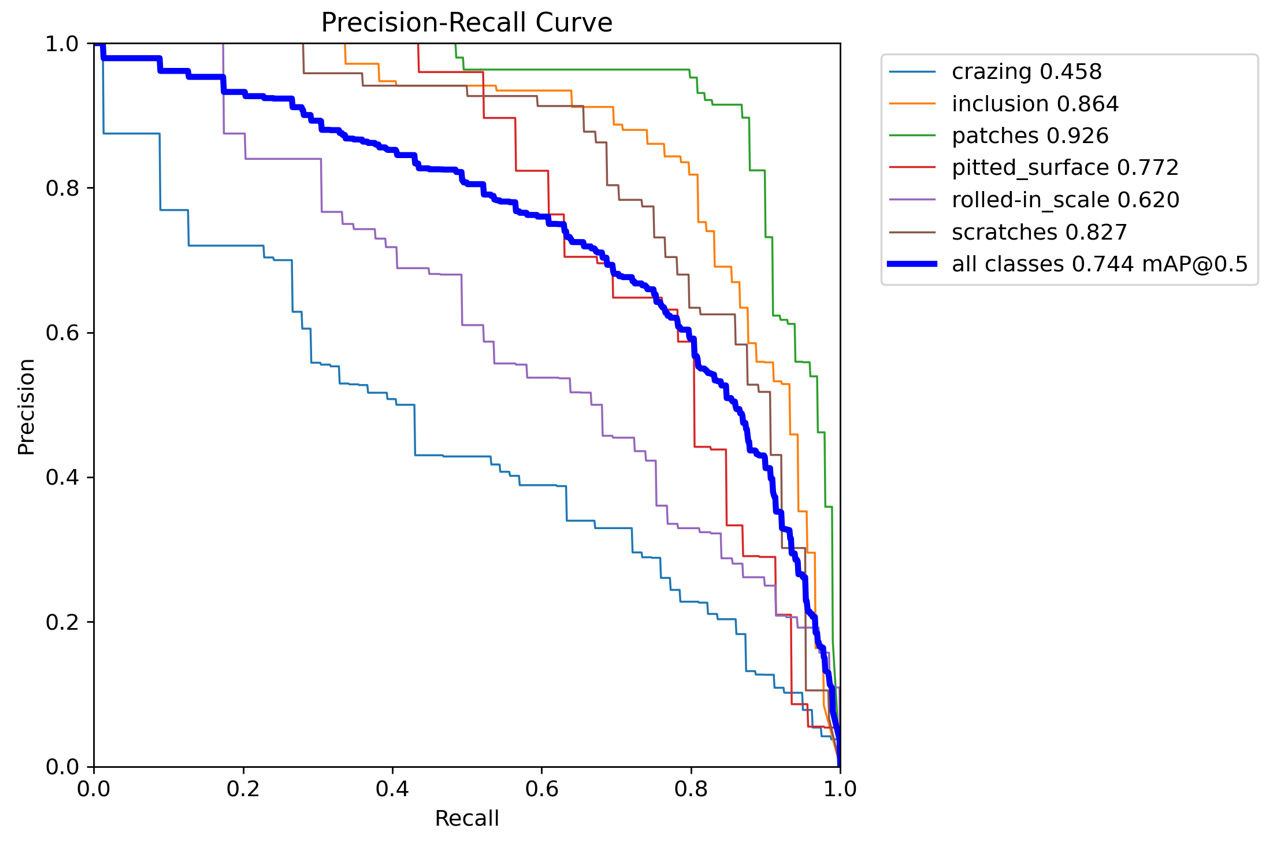

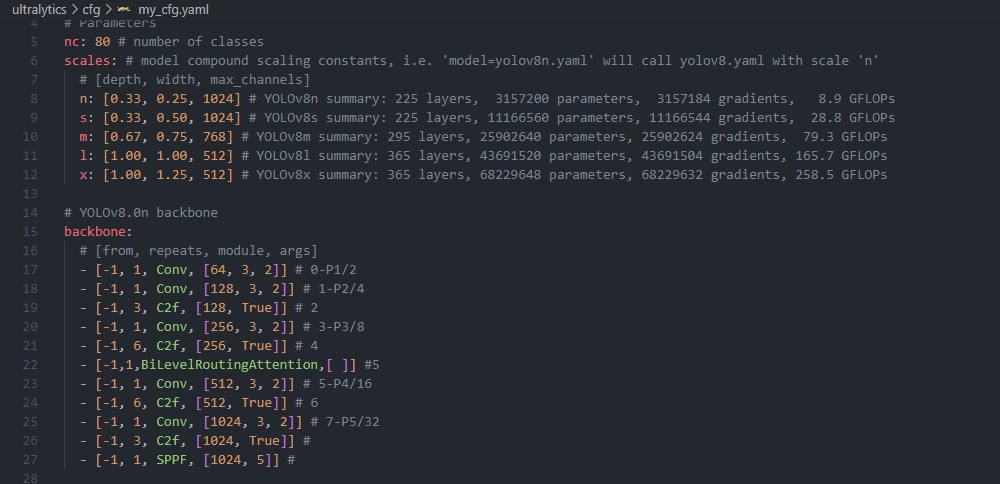

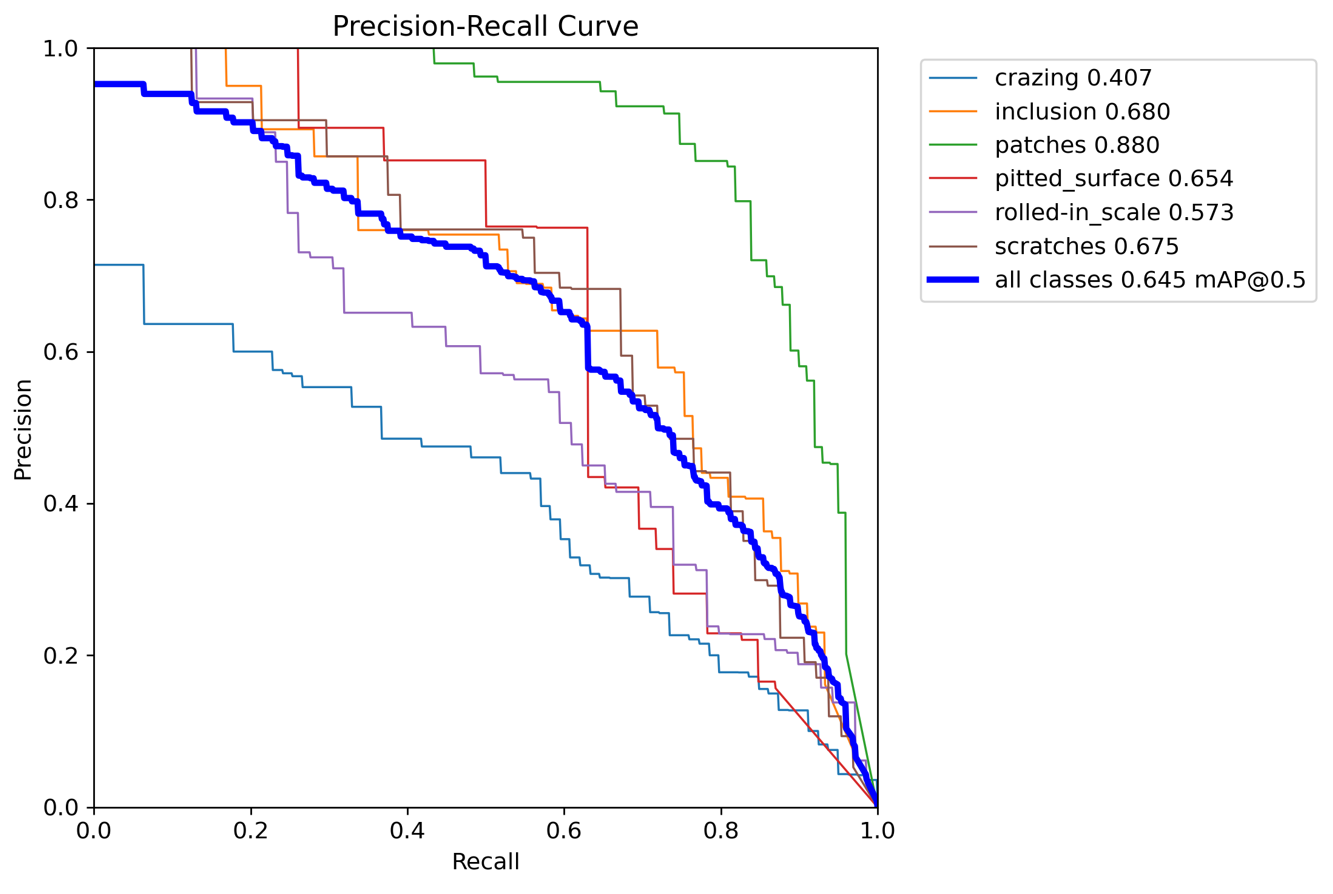

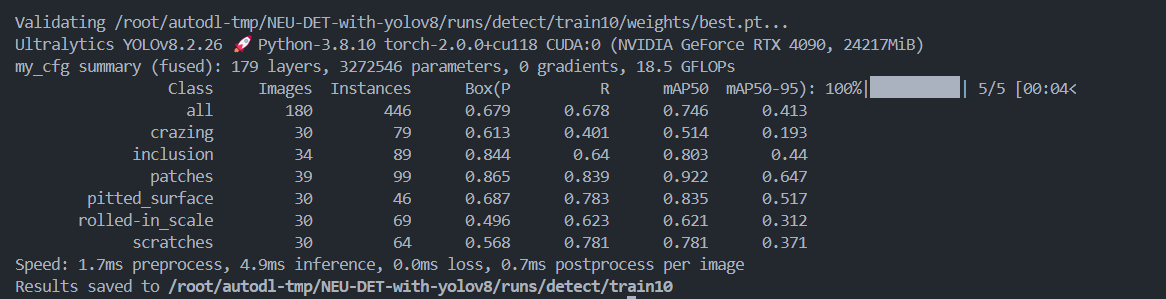

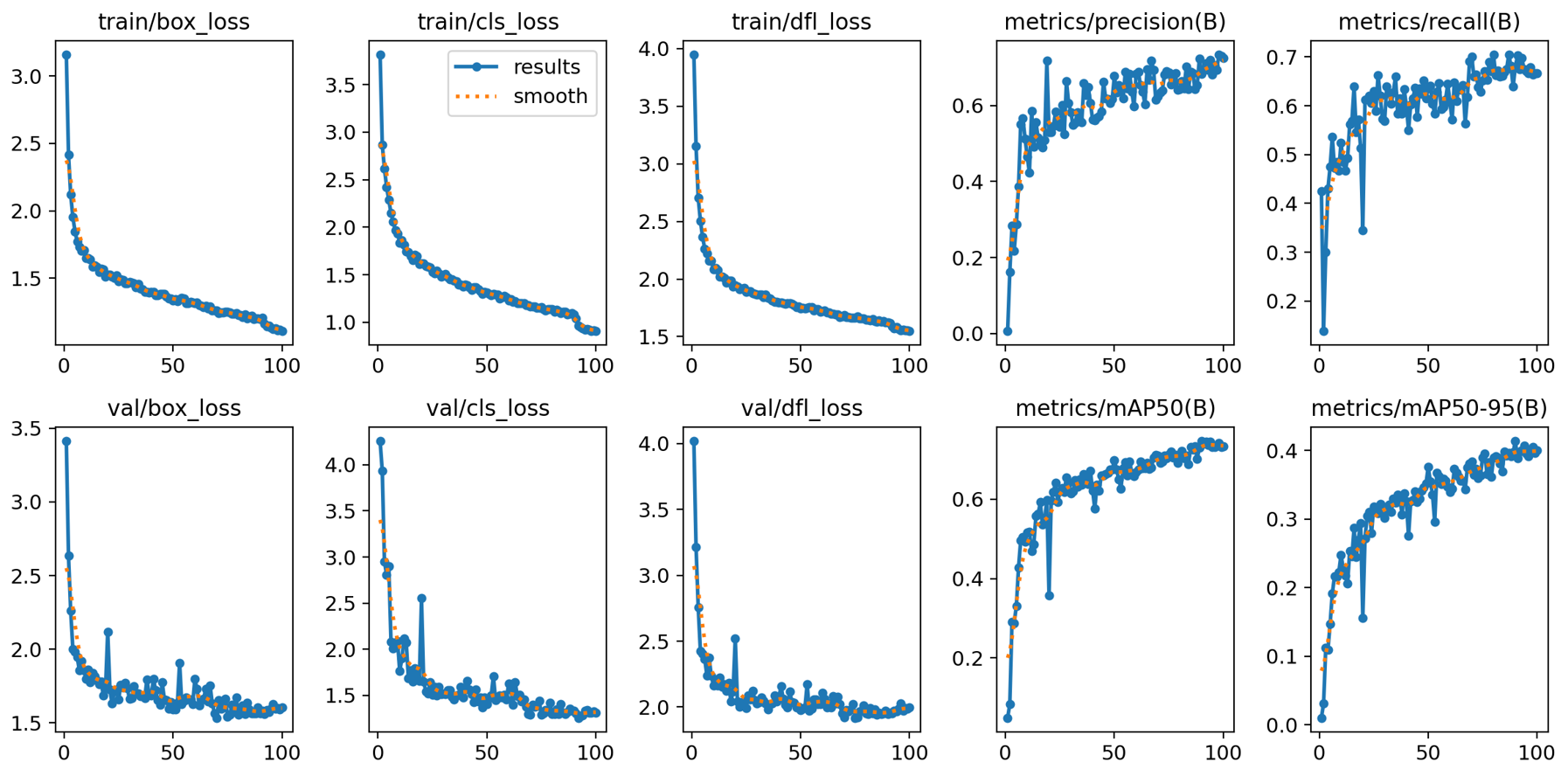

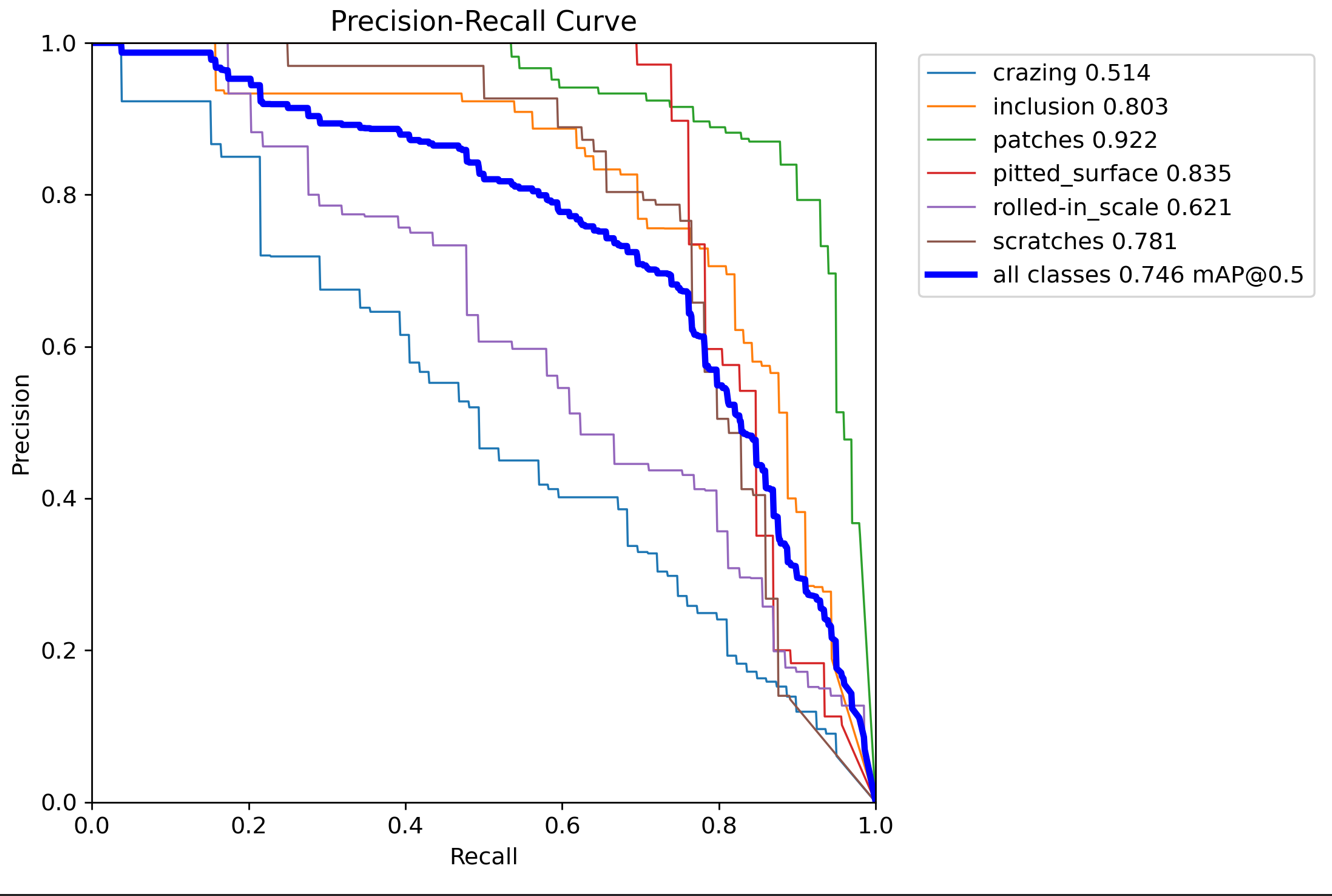

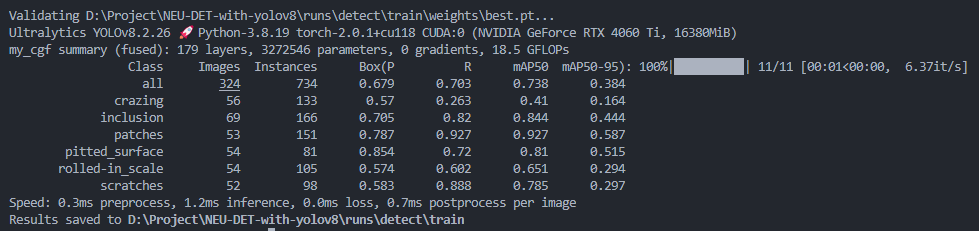

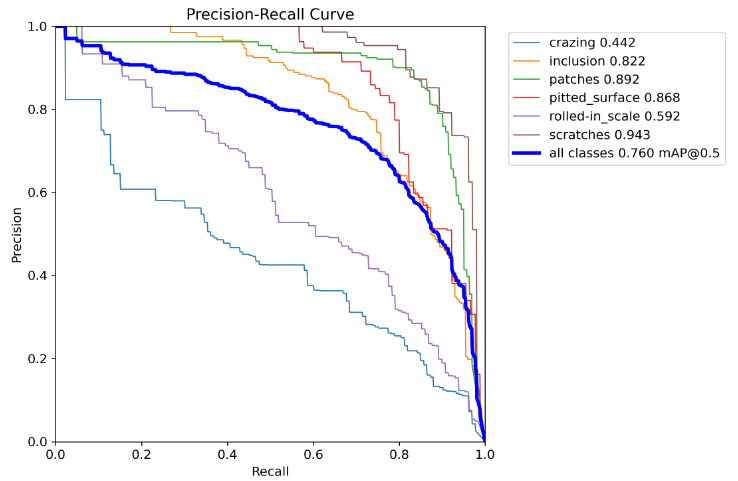

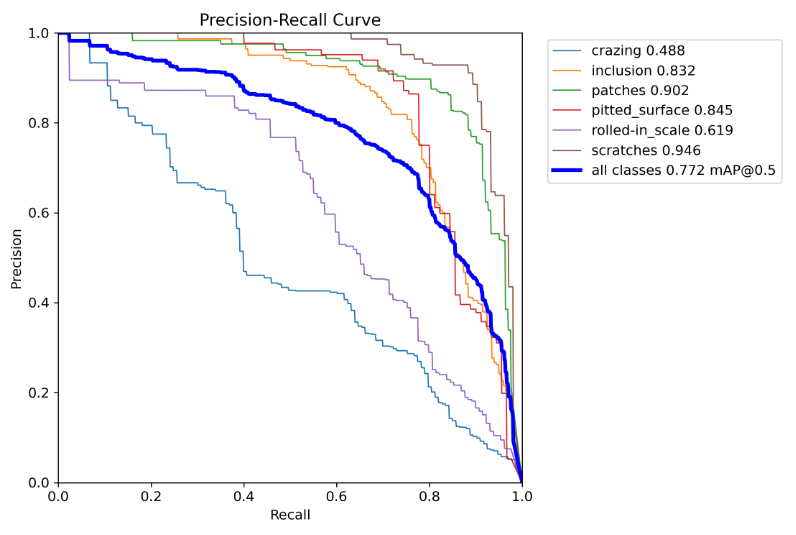

V8加入BRA注意力(74.4)

配置文件<加入到第八层>

条件

epoch=100,batch=-1,model=yolov8n

结果

PR_Recall

疑问

注意力主要是加在了backbone之中,换下位置效果会不会不一样呢?

计算量

加入BRA后计算量增大了非常多,可以看到是18.5 GFLOPS

BRA注意力换位置到第五层(降点)

配置文件

结果

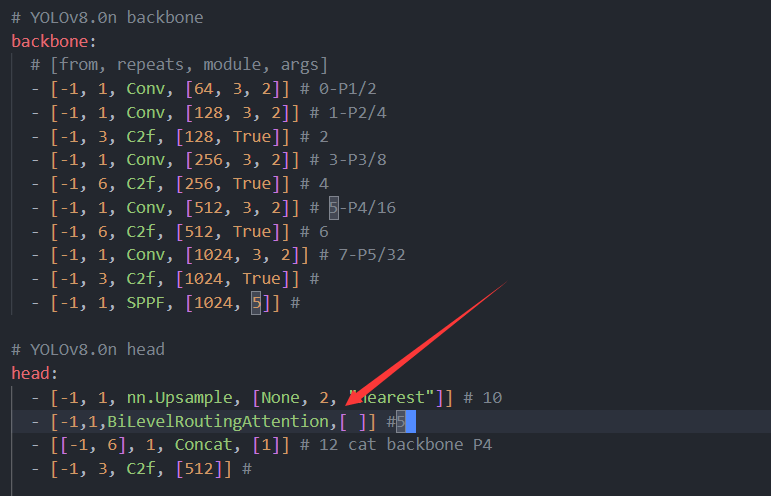

BRA注意力换到11层上采样后面(降点)

配置文件

结果

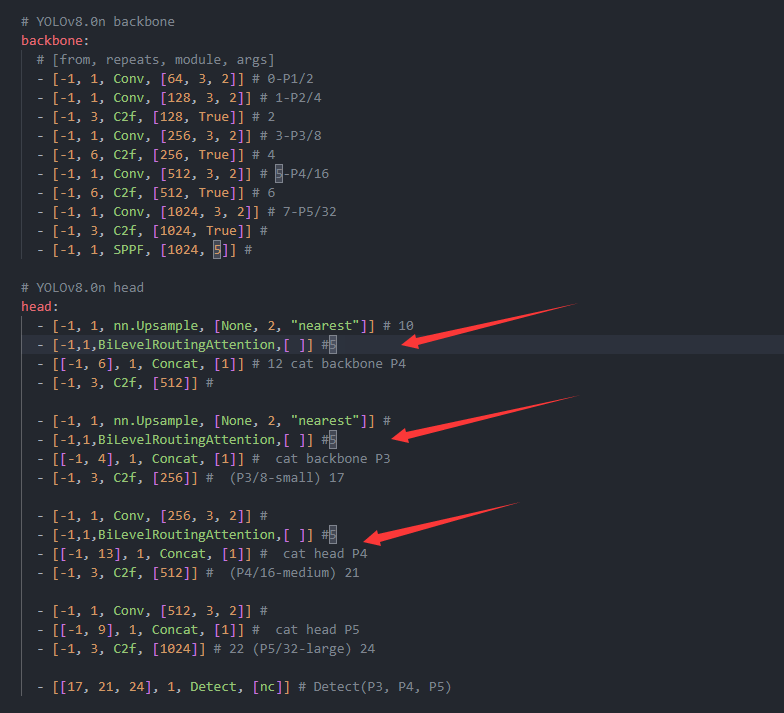

加入三层BRA注意力(降点)

配置文件

结果

结论

加入三层BRA后计算量达到了21GFLOPS,准确率依旧是降点

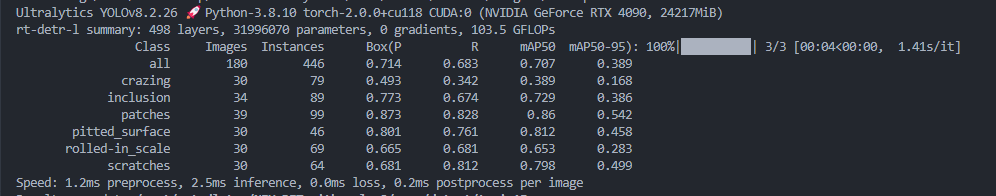

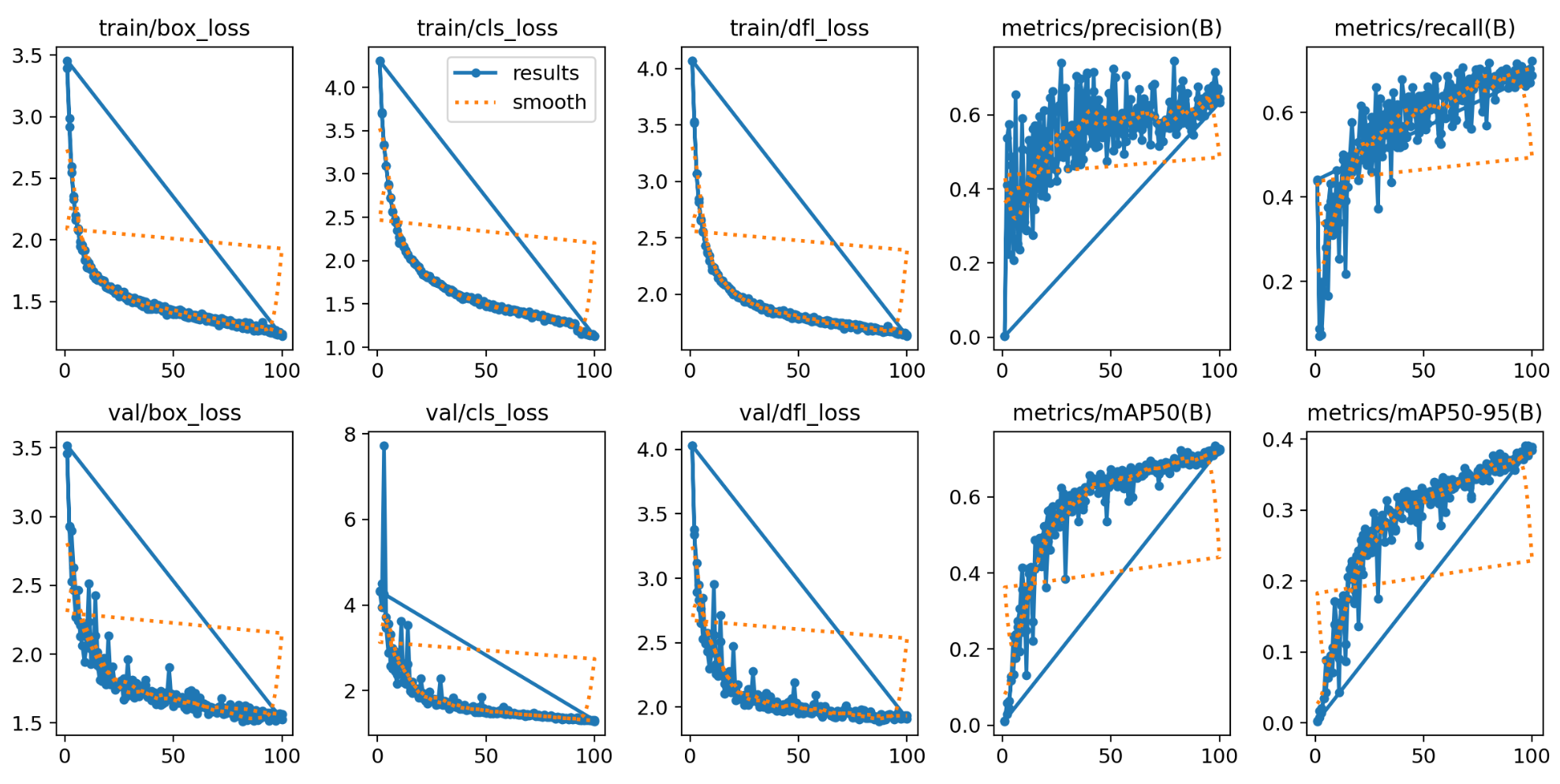

RT-DETR(降点,过拟合)

条件

epoch=100,batch=32,model=redetr-l

结果

结论

从结果图上看很明显出现了过拟合现象,个人觉得是数据集太小了

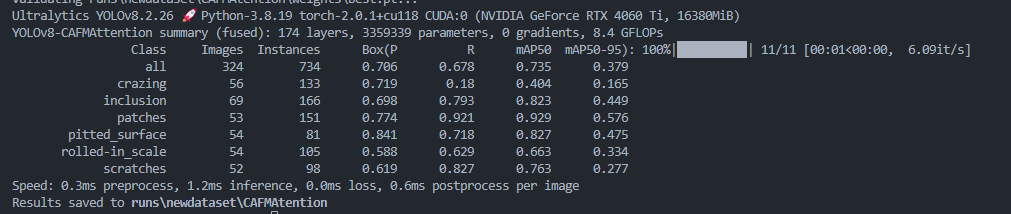

加入CAFM注意力(72.2)

件

和上面一致

结果

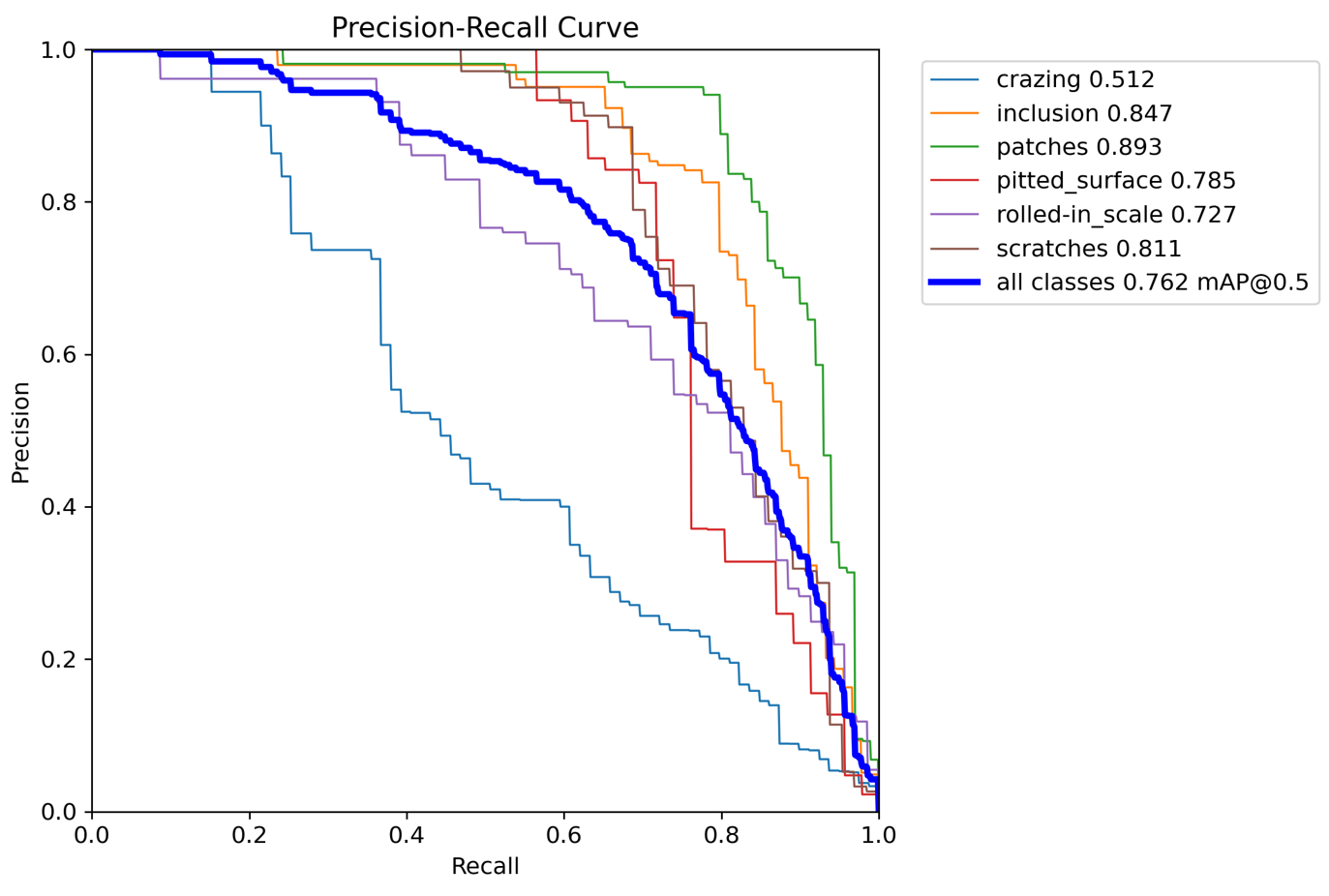

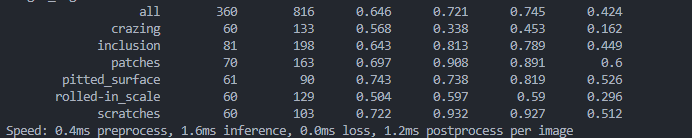

第二种划分数据集训练,1297训练325验证

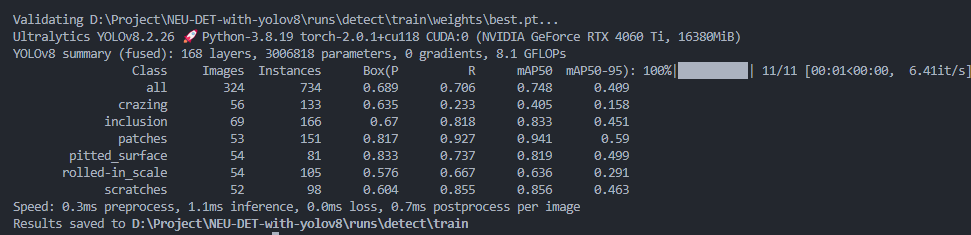

原版v8(74.8)

条件

和之前v8一致

结果

加入Bra注意力(73.8) +shapeiou(74.8)

条件

和之前一致

结果

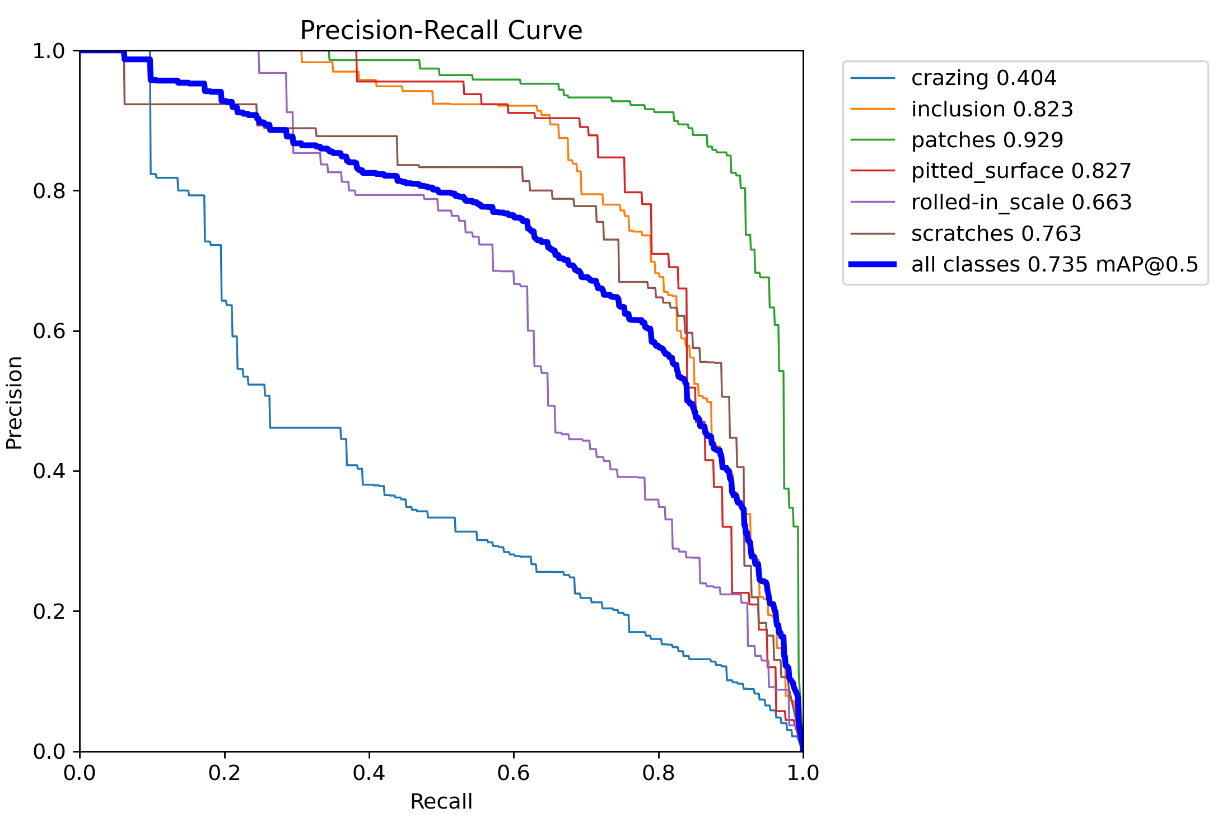

加入CAFM注意力(73.5)使用预训练权重(75.3)

条件

一致

结果

加入预训练权重

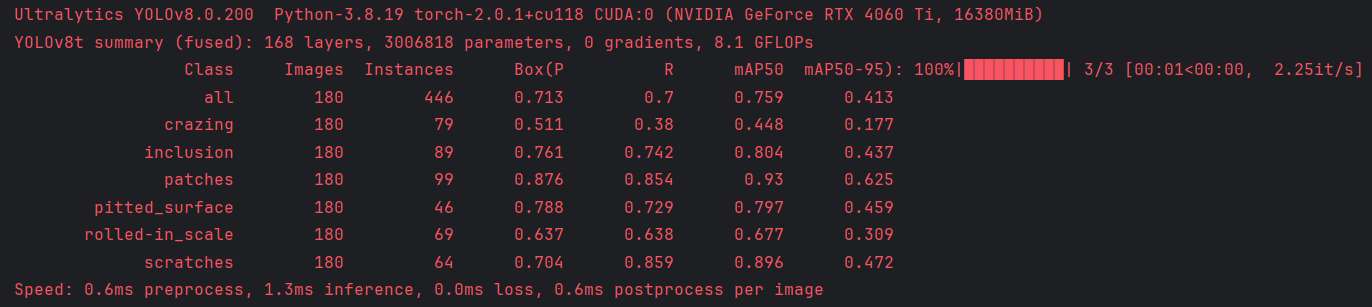

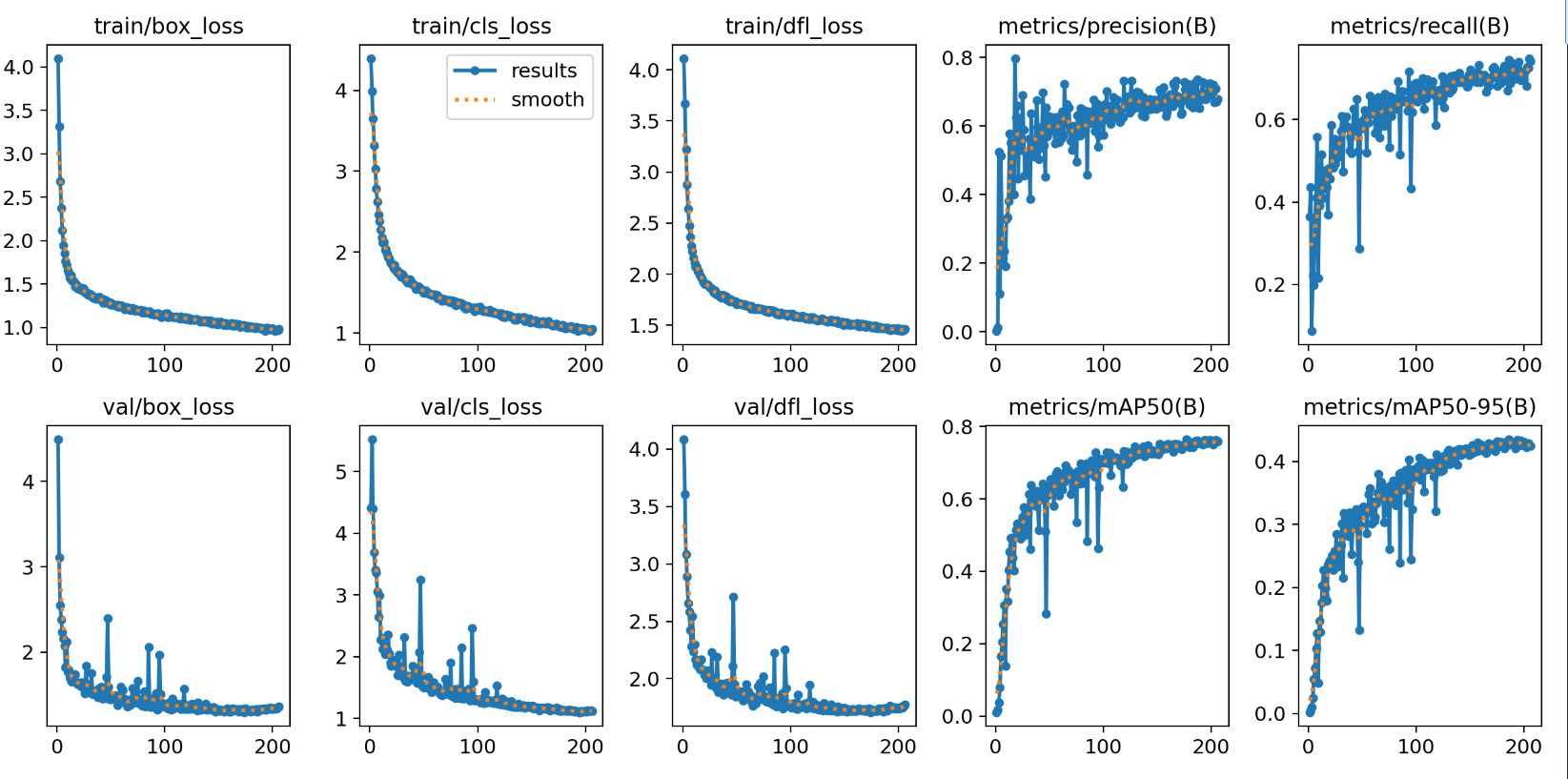

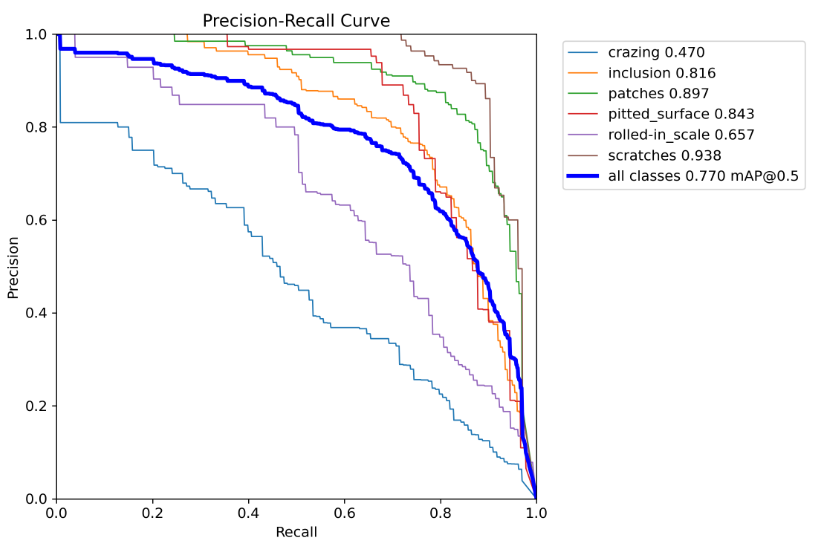

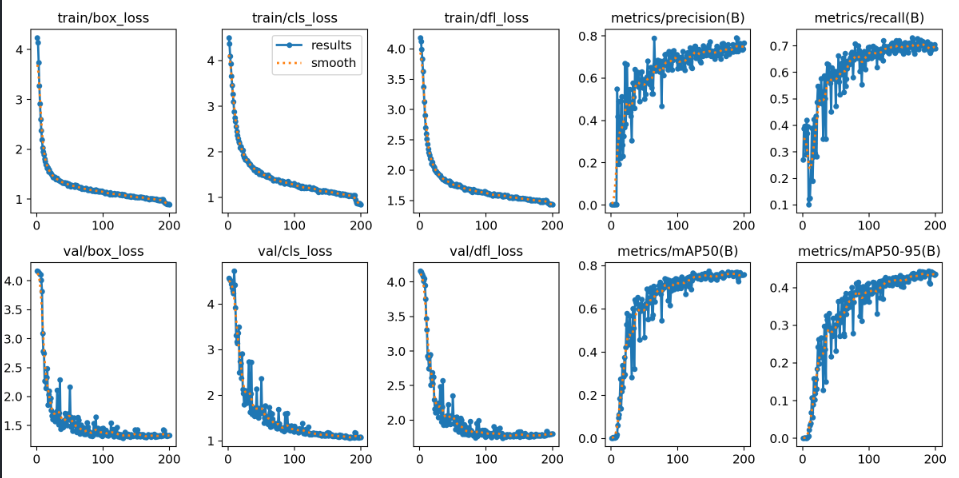

8:2比例划分数据集训练

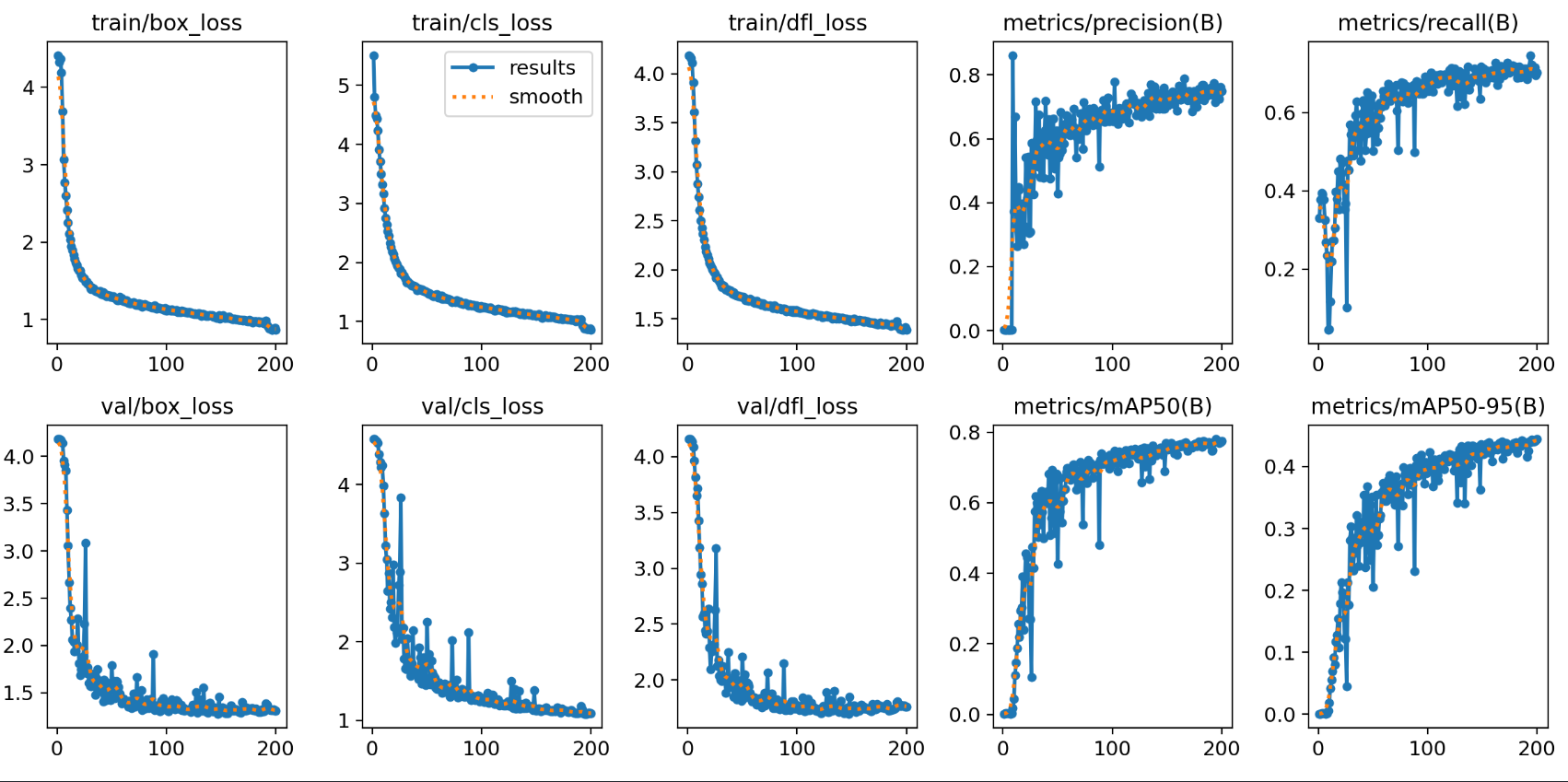

原版V8,不加预训练权重(74.5)200epoch(76)

条件

一致,4060ti

结果

206epoch

YOLOV10(70.8)↓

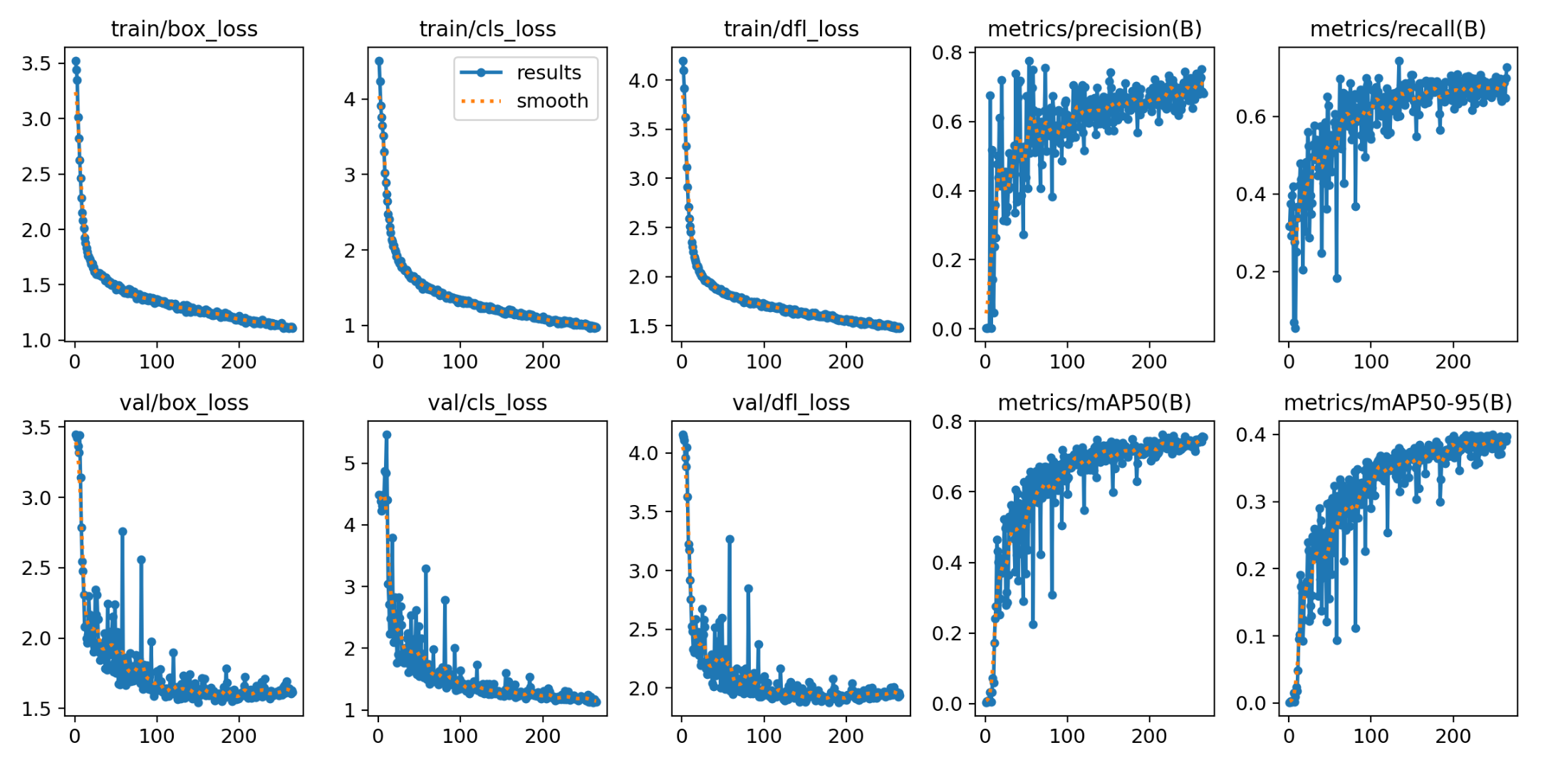

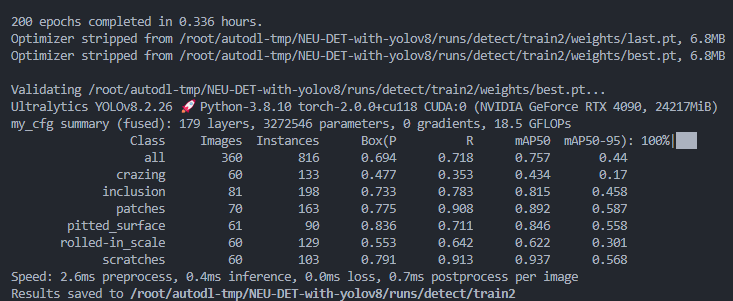

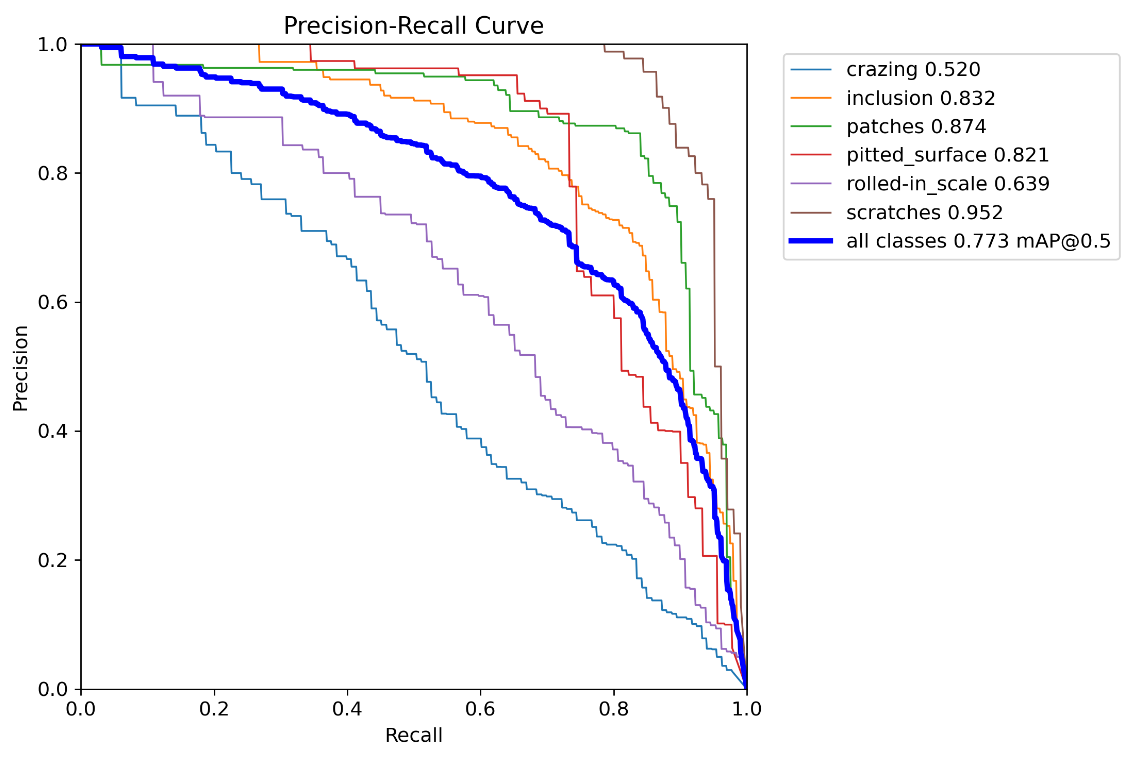

BRA注意力+ShaopeIoU(75.7)

条件

200epoch,4090ti

结果

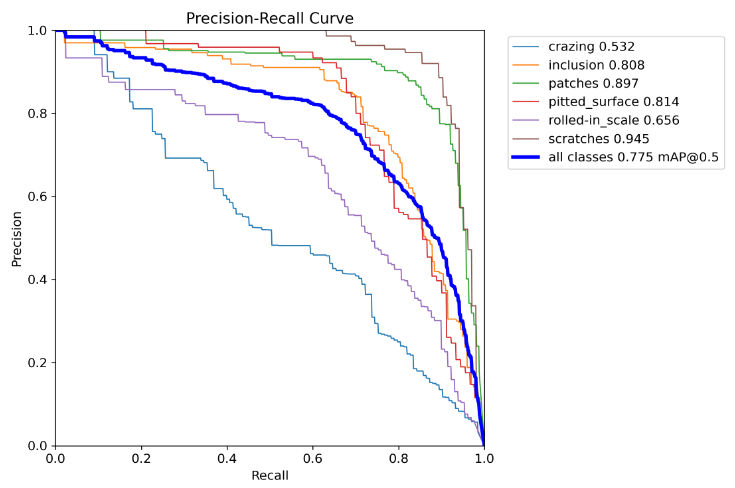

CAA注意力(76.3)

条件

217epoch

结果

FastEMA(76.6)

条件

250epoch

结果

CAA(76.3)

条件

200epoch

结果

MDCR(75.4)

200epoch

OSRAAtention(75.9)

200epoch

结果

HybridTokenMixer(77.2)

200epoch

结果

PKIBlock(77.7)

200epoch 还未收敛,300epoch降到75.5??

结果

Shift-ConvNets (76.7)

200epoch

结果

DAF_CA(77)

200epoch

结果

MODSConv(76.5)

200epoch

结果

MODSLayer(76.8)

200epoch

结果

详细结果在服务器exp13

DualConv(76.5)

200epoch

结果

详细结果在服务器exp14

MSPA(77.2)

200epoch

结果

详细结果在服务器epx15

msblock(73.9)↓

c2f_msblock(71.8)

SPPF_improve()↓

SPPF_UniRepLlk(77.5)↑

- 200epoch和250epoch都是同样的效果

CPMS(77.5)↑

结果

替换主干MobileNetV3+NWD_Loss(74.9)

结果

BRA注意力+PConv(76.8)↓

bra注意力来自BiFormer,动态稀疏注意力,PConv来自FasetNet旨在提高神经网络的计算速度和效率。

结果

替换PIOU损失函数后计算量18.2(77.8)↑

结果

加入3层PConv(76.3)↓

BRA+ODConv+PConv(74.7)↓

BRA+C2f_ODConv(77.2)↑

结果

V8加入BIFPN(75.1)↓

- 尝试换了几种损失函数都是降点

V8加入GAM注意力backbone(77.3)

结果

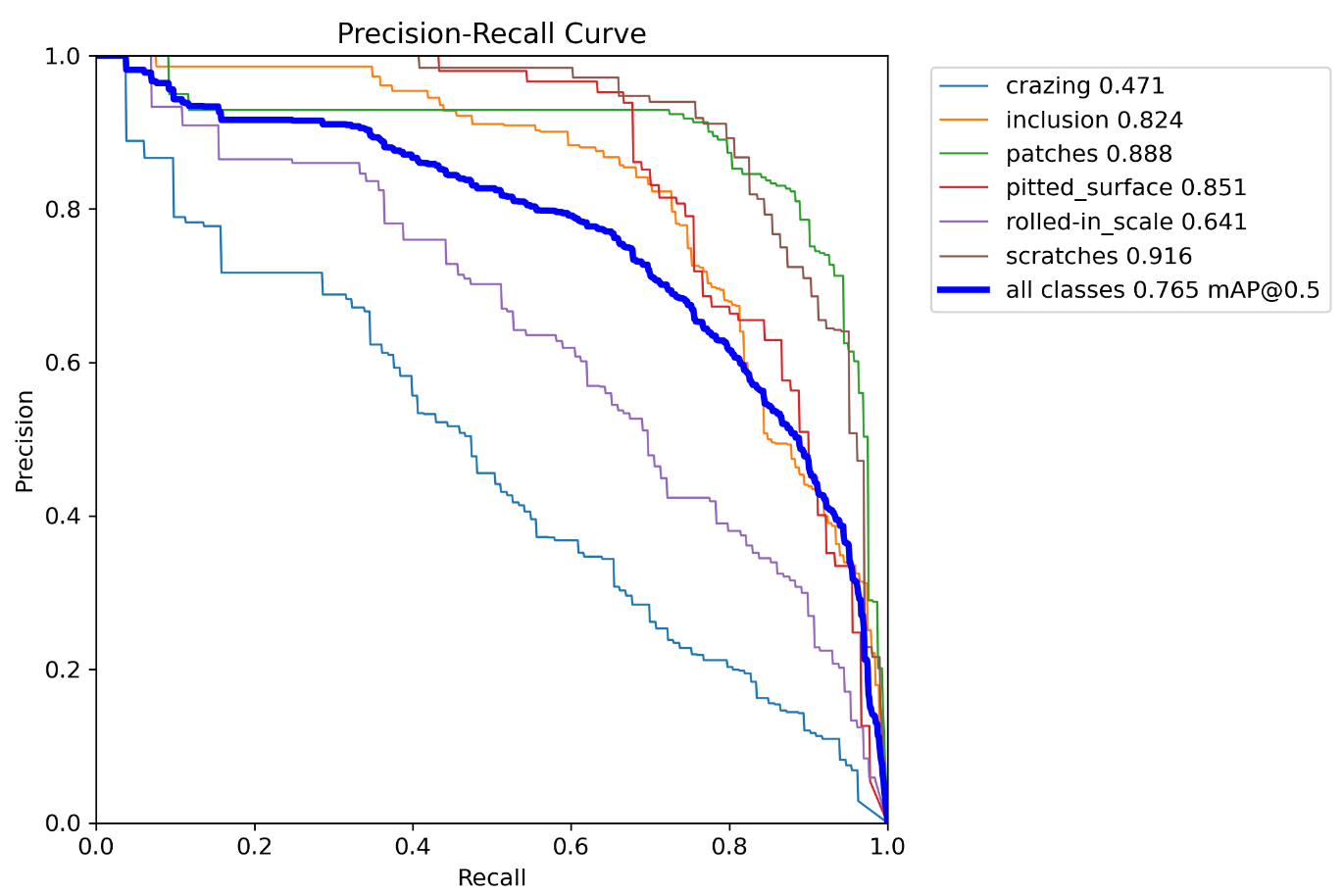

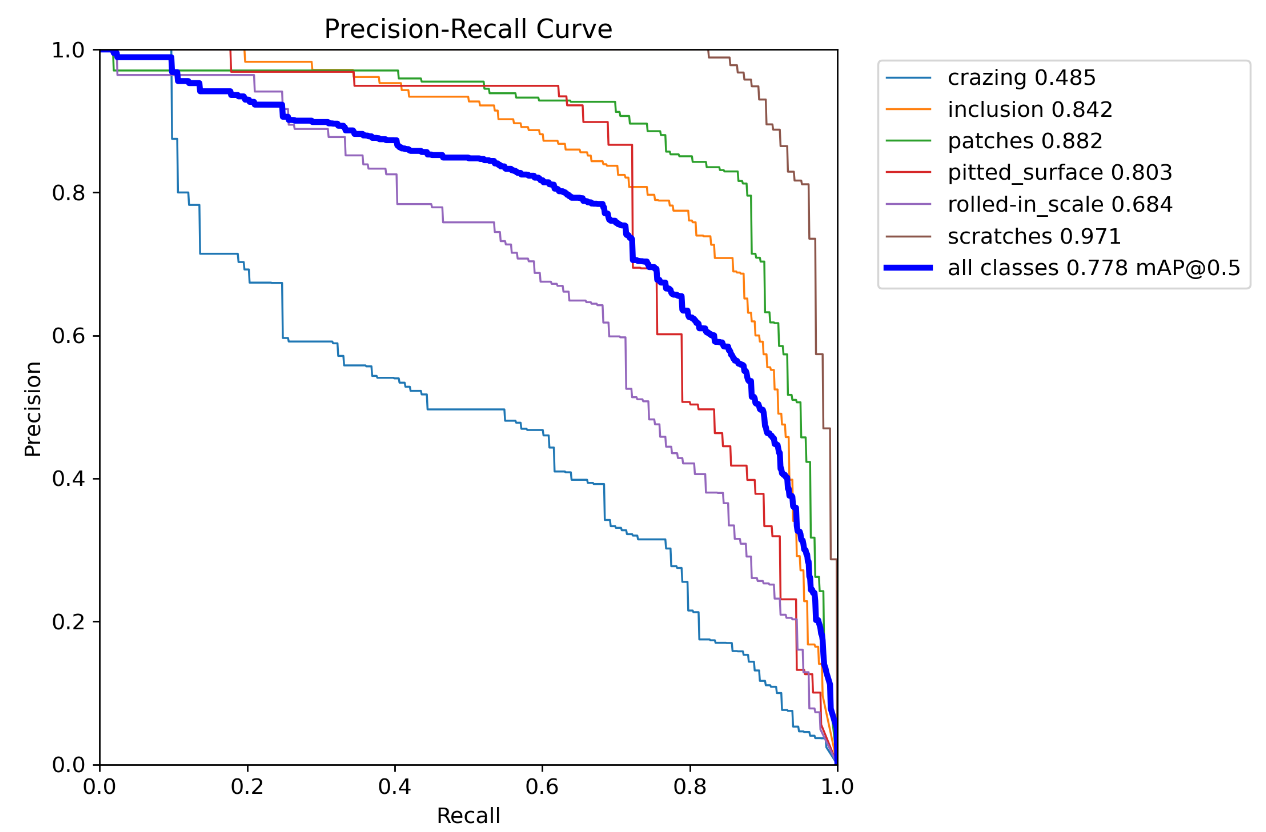

加在neck(78)↑(采用nwd损失)77.7

结果

在neck部分的所有c2f后面添加(76.3)

EMA注意力+PIoU2(75.2)↓

+WISEIOUV3(75.3)↓

我的组合

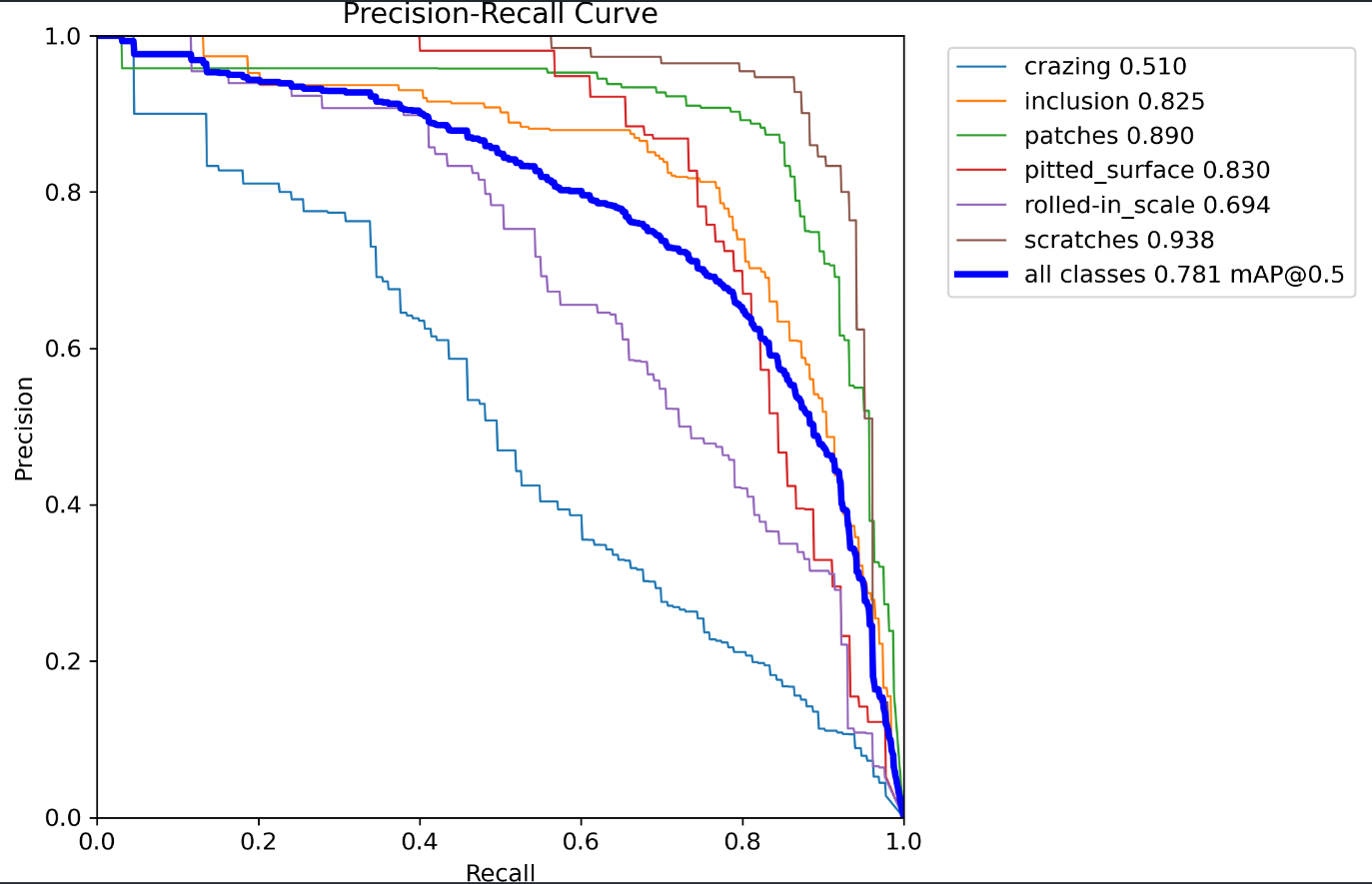

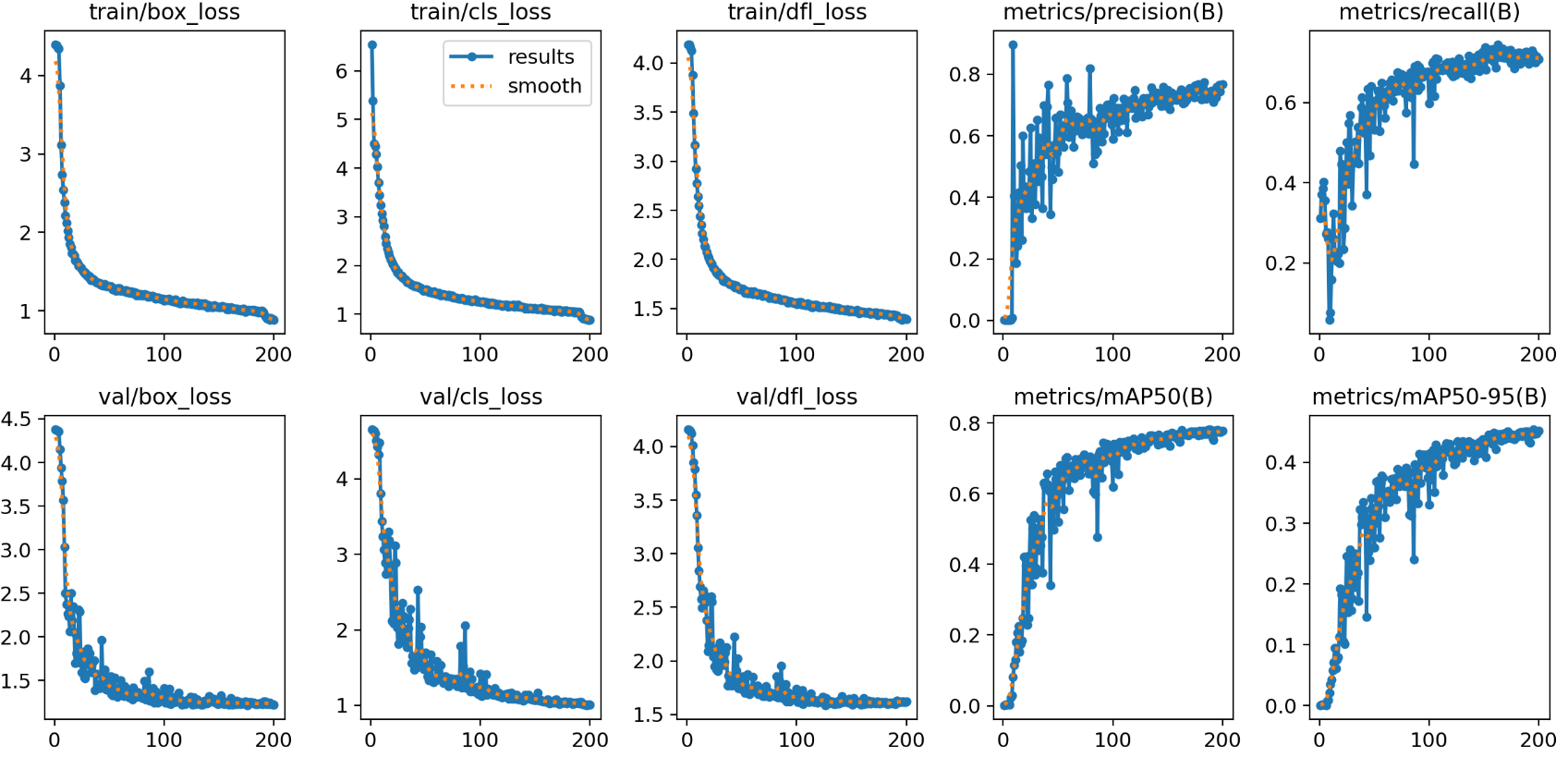

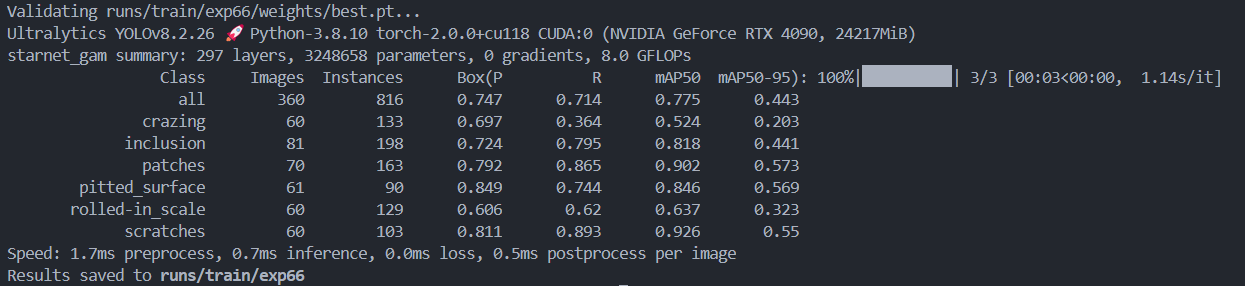

Starnet+Pkiblock(77.8)

结果

MSPA+PKIBlock(77)↑

运行条件

200epoch(250epoch也是这么多)

结果

最好结果是将pkiblock放在第九层,尝试放在第十层,或者第八层都是不如第九层好,尝试使用原版ciou函数效果没有iou好

MSPA+DAF_CA(77)

200epoch

结果

不确定性算法警告,

MSPA+slimneck+piou(76.6)

- 200epoch

DWR_DRB+slimneck+piou(77.7)↑

200 和 250epoch结果都是一样

结果

替换EIOU后(76.5)↓

结果

替换Focaler-Iou(76.8)↓

结果

替换NWD_loss<ratio=0.5>(77.3)

结果

rati0=0.7(77)

DWR_DRB+slimneck+SPPF_

UniRep(76.8)

结果

不加slimneck(77)

- map77

c2f_DWR_DRB+CPMS+Slimneck(77)↑

结果

DWR_DRB+slimneck+LADH(77.3)

结果

GAM+DWR_DWB+NWD(75.7)↓

GAM+DWR_DWB+PIOU2(78)↑

3.5M参数量

GAM+Starnet;不替换neck(75.6)↓

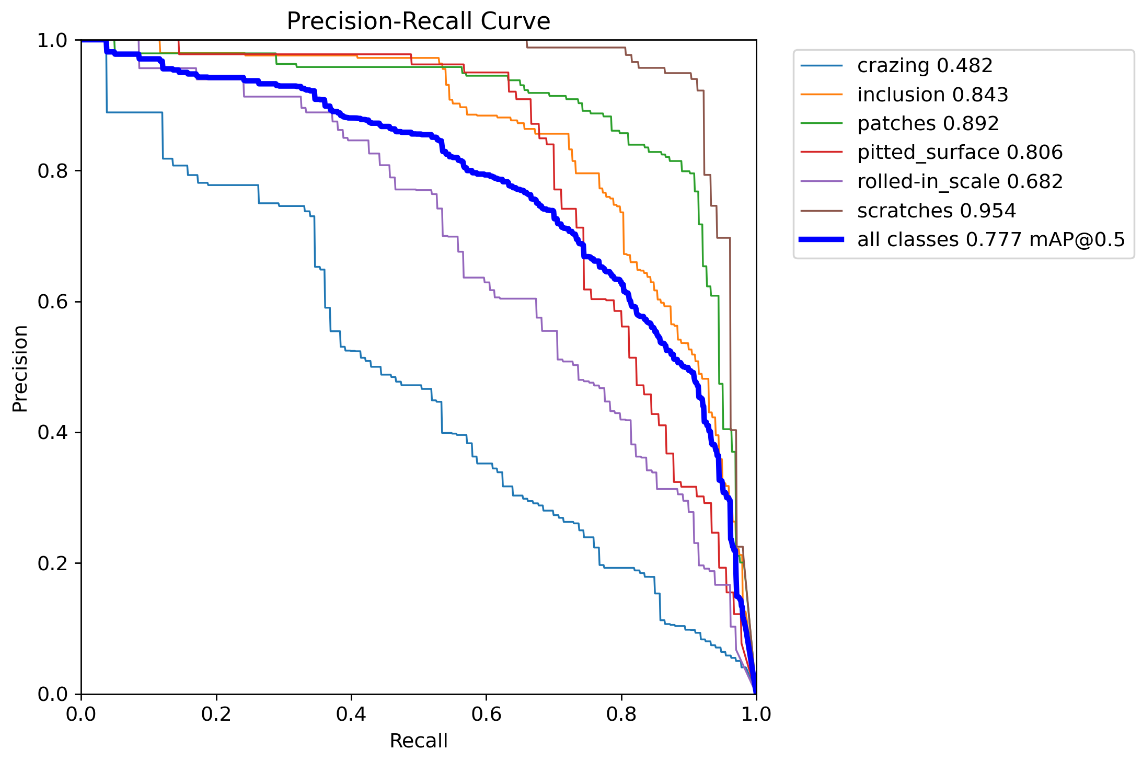

GAM+Starnet;替换neck部分(78.1)↑

3.5M参数

主干最后两层加starnet,neck全加(74.4)

替换WIOUv3损失(77.5)↓

替换neck部分所有C2f(77.7)

GAM+Slimneck+PIOU2(76.1)

配置文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs

s: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPs

m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs

l: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPs

x: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs

# YOLOv8.0n backbone

backbone:

# [from, repeats, module, args]

- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- [-1, 3, C2f, [128, True]]

- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- [-1, 6, C2f, [256, True]]

- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- [-1, 6, C2f, [512, True]]

- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- [-1, 3, C2f, [1024, True]]

- [-1, 1, GAM_Attention, [1024]] # 9

- [-1, 1, SPPF, [1024, 5]] # 10

head:

- [-1, 1, nn.Upsample, [None, 2, 'nearest']]

- [[-1, 6], 1, Concat, [1]] # cat backbone P4

- [-1, 3, VoVGSCSPns, [512]] # 13

- [-1, 1, nn.Upsample, [None, 2, 'nearest']]

- [[-1, 4], 1, Concat, [1]] # cat backbone P3

- [-1, 3, VoVGSCSPns, [256]] # 16 (P3/8-small)

- [-1, 1, GSConvns, [256, 3, 2]]

- [[-1, 13], 1, Concat, [1]] # cat head P4

- [-1, 3, VoVGSCSPns, [512]] # 19 (P4/16-medium)

- [-1, 1, GSConvns, [512, 3, 2]]

- [[-1, 10], 1, Concat, [1]] # cat head P5

- [-1, 3, VoVGSCSPns, [1024]] # 22 (P5/32-large)

- [[16, 19, 22], 1, Detect, [nc]] # Detect(P3, P4, P5)结果 exp71

GAM+Starnet+Slimneck+PIOU2(75)

配置文件

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45# Ultralytics YOLO 🚀, AGPL-3.0 license

# YOLOv8 object detection model with P3-P5 outputs. For Usage examples see https://docs.ultralytics.com/tasks/detect

# Parameters

nc: 80 # number of classes

scales: # model compound scaling constants, i.e. 'model=yolov8n.yaml' will call yolov8.yaml with scale 'n'

# [depth, width, max_channels]

n: [0.33, 0.25, 1024] # YOLOv8n summary: 225 layers, 3157200 parameters, 3157184 gradients, 8.9 GFLOPs

s: [0.33, 0.50, 1024] # YOLOv8s summary: 225 layers, 11166560 parameters, 11166544 gradients, 28.8 GFLOPs

m: [0.67, 0.75, 768] # YOLOv8m summary: 295 layers, 25902640 parameters, 25902624 gradients, 79.3 GFLOPs

l: [1.00, 1.00, 512] # YOLOv8l summary: 365 layers, 43691520 parameters, 43691504 gradients, 165.7 GFLOPs

x: [1.00, 1.25, 512] # YOLOv8x summary: 365 layers, 68229648 parameters, 68229632 gradients, 258.5 GFLOPs

# YOLOv8.0n backbone

backbone:

# [from, repeats, module, args]

- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- [-1, 3, C2f_StarNB, [128, True]]

- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- [-1, 6, C2f_StarNB, [256, True]]

- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- [-1, 6, C2f_StarNB, [512, True]]

- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- [-1, 3, C2f_StarNB, [1024, True]]

- [-1, 1, GAM_Attention, [1024]] # 9

- [-1, 1, SPPF, [1024, 5]] # 10

head:

- [-1, 1, nn.Upsample, [None, 2, 'nearest']]

- [[-1, 6], 1, Concat, [1]] # cat backbone P4

- [-1, 3, VoVGSCSPns, [512]] # 13

- [-1, 1, nn.Upsample, [None, 2, 'nearest']]

- [[-1, 4], 1, Concat, [1]] # cat backbone P3

- [-1, 3, VoVGSCSPns, [256]] # 16 (P3/8-small)

- [-1, 1, GSConvns, [256, 3, 2]]

- [[-1, 13], 1, Concat, [1]] # cat head P4

- [-1, 3, VoVGSCSPns, [512]] # 19 (P4/16-medium)

- [-1, 1, GSConvns, [512, 3, 2]]

- [[-1, 10], 1, Concat, [1]] # cat head P5

- [-1, 3, VoVGSCSPns, [1024]] # 22 (P5/32-large)

- [[16, 19, 22], 1, Detect, [nc]] # Detect(P3, P4, P5)结果 exp72

复现WSS-YOLO()

配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31# YOLOv8.0n backbone

backbone:

# [from, repeats, module, args]

- [-1, 1, Conv, [64, 3, 2]] # 0-P1/2

- [-1, 1, Conv, [128, 3, 2]] # 1-P2/4

- [-1, 3, C2f, [128, True]]

- [-1, 1, Conv, [256, 3, 2]] # 3-P3/8

- [-1, 6, C2f, [256, True]]

- [-1, 1, Conv, [512, 3, 2]] # 5-P4/16

- [-1, 6, C2f_DySnakeConv, [512, True]]

- [-1, 1, Conv, [1024, 3, 2]] # 7-P5/32

- [-1, 3, C2f_DySnakeConv, [1024, True]]

- [-1, 1, SPPF, [1024, 5]] # 9

head:

- [-1, 1, nn.Upsample, [None, 2, 'nearest']]

- [[-1, 6], 1, Concat, [1]] # cat backbone P4

- [-1, 3, VoVGSCSP, [512]] # 12

- [-1, 1, nn.Upsample, [None, 2, 'nearest']]

- [[-1, 4], 1, Concat, [1]] # cat backbone P3

- [-1, 3, VoVGSCSP, [256]] # 15 (P3/8-small)

- [-1, 1, GSConv, [256, 3, 2]]

- [[-1, 12], 1, Concat, [1]] # cat head P4

- [-1, 3, VoVGSCSP, [512]] # 18 (P4/16-medium)

- [-1, 1, GSConv, [512, 3, 2]]

- [[-1, 9], 1, Concat, [1]] # cat head P5

- [-1, 3, VoVGSCSP, [1024]] # 21 (P5/32-large)

- [[15, 18, 21], 1, Detect, [nc]] # Detect(P3, P4, P5)